This announcement comes as Renesas pushes towards the next generation of Renesas embedded AI (e-AI), designed to accelerate increased intelligence of endpoint devices.

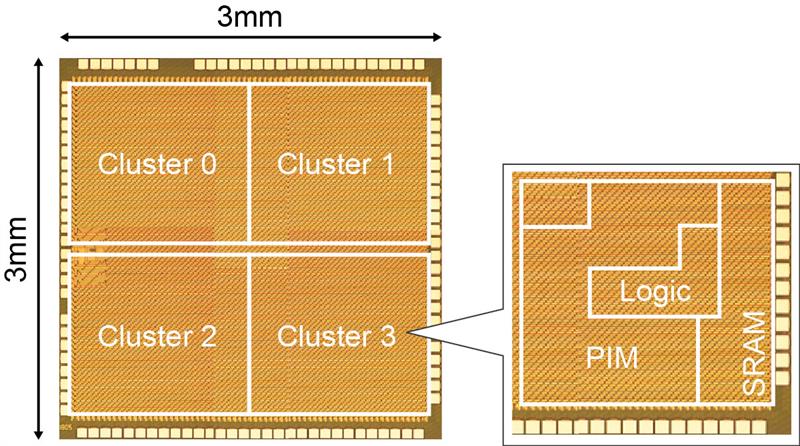

A Renesas test chip featuring this accelerator has achieved the power efficiency of 8.8 TOPS/W, which it claims is the industry's highest class of power efficiency. The Renesas accelerator is based on the processing-in-memory (PIM) architecture in which multiply-and-accumulate operations are performed in the memory circuit as data is read out from that memory.

To create the new AI accelerator, Renesas developed the following three technologies. The first is a ternary-valued (-1, 0, 1) SRAM structure PIM technology that can perform large-scale CNN computations. The second is an SRAM circuit to be applied with comparators that can read out memory data at low power. The third is a technology that prevents calculation errors due to process variations in the manufacturing. Together, these technologies achieve both a reduction in the memory access time in deep learning processing and a reduction in the power required for the multiply-and-accumulate operations. Thus, according to Renesas, the new accelerator achieves the industry's highest class of power efficiency while maintaining an accuracy ratio more than 99 percent when evaluated in a handwritten character recognition test (MNIST).

Until now, the PIM architecture was unable to achieve an adequate accuracy level for large-scale CNN computations with single-bit calculations since the binary (0,1) SRAM structure was only able to handle data with values 0 or 1. Furthermore, process variations in the manufacturing resulted in a reduction in the reliability of these calculations, and workarounds were required. Renesas has now developed technologies that resolve these issues and will be applying these, as a leading-edge technology that can implement revolutionary AI chips of the future, to the next generation of e-AI solutions for applications such as wearable equipment and robots that require both performance and power efficiency.