The first experiment to demonstrate how evolution could build hardware from scratch took advantage of what were at the time comparatively new devices: FPGAs. Because rewriting their bitstreams could modify their internal logic, it became practical for computers to simulate evolutionary processes. Software could mutate the bitstream randomly and use selective pressure to drive development in the direction of a fairly simple specification.

In the case of the experiment run by Adrian Thompson at the University of Sussex in the mid 1990s, the desired outcome was for the evolved circuit to discriminate correctly between two audio rate frequencies fed into it, which it managed to achieve after several thousand rounds of mutation.

The emergence of field programmable analogue arrays – such as the devices made by Anadigm and the more basic programmable transistor arrays developed at NASA and the University of Heidelberg in the late 1990s and 2000s – made it possible to transfer the idea to analogue circuitry. In this case, software mutates the connections between analogue elements, as well as the resistances used to tune them, to try to build circuits that match a target transfer function.

The same ideas transferred to the virtual environment, resulting in experimental EDA tools where mutated circuits could be run under SPICE simulations to gauge their performance. This SPICE-in-the-loop approach also supported commercial tools, such as those developed by Barcelona Design and Neolinear, although these used heuristics to provide the core circuitry architectures that were then refined by numerical optimisation.

Another approach that may prove to be common in the very long term is ‘evolution in materio’ – a term coined in 2002 by Julian Miller from the University of York and Keith Downing of the Norwegian University of Science and Technology. By applying voltages and other electromagnetic forces to materials, their structure will change over time, building useful electrical circuits based on a combination of mutation and selection pressures. They proposed the idea of a ‘programmable matter array’.

The problem, however, is building matter that can be programmed in useful ways. Although the idea of evolution in materio is inspired by nature, only biological organisms sport the kind of complexity to evolve into complex forms using a combination of DNA, RNA and proteins. Nothing in the artificial world yet matches that level of complexity. But the properties of some manmade materials exhibit enough non-linear behaviour to be interesting. Miller and Downing suggested using liquid crystals because of the way they change alignment and structure based on voltage.

In 2014, Mark Massey and colleagues from the University of Durham tried using carbon nanotubes (CNT), making use of one of the attributes that has stymied the use of the long, highly conductive molecules in conventional electronics.

In principle, CNTs would make excellent replacements for the metal wiring on chips because they are, by their nature, incredibly thin and electrons flow through easily once they are in the tube. But no-one has found a way to deposit them as wires that fit the typical Manhattan layout of chips. The best that current researchers can do is sprinkle them onto a surface and hope some align correctly – although DNA-assisted lithography may help here.

Initially used to evolve a structure that could solve the ‘travelling salesman problem’ for up to 10 cities, the Durham team found a way to encourage unordered nanotubes to learn simple logic functions, such as half adders, teaching them using a process similar to that used to program artificial neural networks. They mixed, at low concentrations, nanotubes with a polymer insulator, then spread them across glass.

The nanotubes stiffen the resulting film and enhance its conductivity where their concentration is higher. The material does not evolve as such; changes in voltage do not move the nanotubes around. Instead, the training process finds existing high-conductivity paths that can be harnessed to perform computations. Because the conductivity of these paths seems to depend on the relative strength of the applied electric field, the material can handle a wider range of functions than suggested by a material that contains a network of simple conductive paths. Further work on a mixture that involves liquid crystals should make it possible to build materials that alter their form during training.

|

Evolvable hardware may make more sense at a higher level of abstraction, using reconfiguration of more complex electronic building blocks than transistors, passives or logic gates. In 1999, Howard Abelson, working at MIT, developed the idea of the amorphous computer. This is based on a large array of identical microprocessors connected wirelessly, rather than through dedicated mesh networks, to form a network of parallel processors able to adapt to changes.

The idea has been revived in recent years as a possible method for dealing with the higher likelihood of failures in nanometre technologies. Evolutionary algorithms could find alternative networks of processors if some key elements fail. In practice, other techniques may prove quicker to respond and rewire around a failure.

Robots sent into dangerous areas or deep space, where manual repair is impossible, could prove to be the main beneficiaries of the idea of evolvable hardware. But speed of response is a problem in the development of evolutionary algorithms that will allow these robots to recover from damage.

In a recent experiment, a team from the Pierre and Marie Curie University in Paris, working with the University of Wyoming, found a way to speed the process for a six-legged robot to learn new walking gaits when it suffered an injury. Rather than trying to learn how to walk from scratch, the robot was programmed with a table of possible gaits derived from simulations of possible combinations of leg movements. It would select new movements compatible with its remaining legs, then refine them in real time to learn a new way of moving around. The robot could even react to changes in environment, such as a highly polished floor.

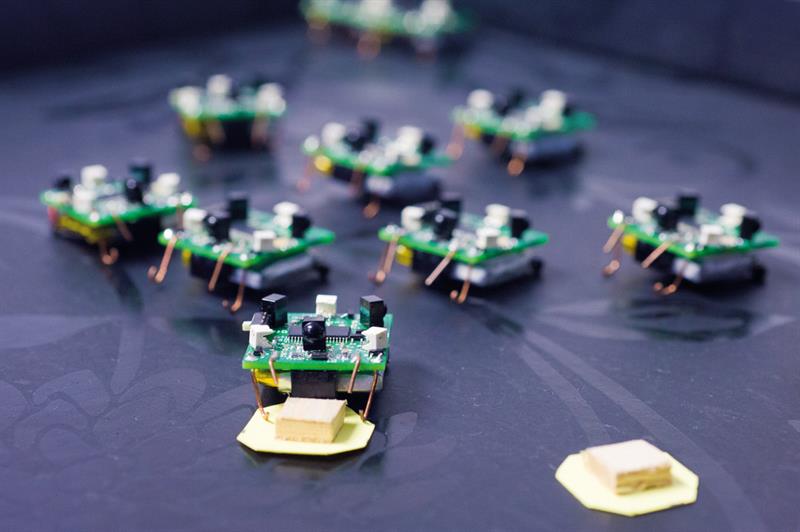

Some work is going further, using the idea of modular robotics – a mechanical analogue to amorphous computing, where detachable units that contain motors and processors can reassemble themselves into new shapes to cope with a problem. For example, a bipedal robot might remake itself in the form of a snake to crawl into a tight space to look for survivors trapped in the wreckage of an earthquake.

Although modular robotics remains at an early stage of development, with most of the experiments being purely conceptual – showing how swarms of tiny unconnected robots can form into different shapes – some researchers are looking to the possibility of programmable matter. This would use nanomechanical structures to build much more finely grained modular robots – and allow true evolution in materio. But it is a concept that currently outstrips the ability of current technology to support it.

Robot swarms not only see the behaviour of the individuals changing constantly, but also that of the group.

Robot swarms not only see the behaviour of the individuals changing constantly, but also that of the group.