Even for the humanoid finalists in recent RoboCups, the regular challenge where machines try to demonstrate their skills at the beautiful game, the machines’ moves are often less than pretty.

At a conference organised by the University of Michigan on embodied AI, Gabe Margolis, PhD student at the Massachusetts Institute of Technology (MIT), noted how the machines still go through a rigid series of steps where they walk up to the ball, line themselves up and then kick it somewhere. What they have not shown in these championships so far is the ability to dribble the ball around, something that comes naturally to human players.

Much of the problem stems from the problems that walking robots have in adapting to subtle differences in what the control algorithm expects to the reality of a foot making contact with the ground and alternately with the ball. Even wheels prove tough to control outside the flat surfaces of the typical factory or warehouse, where most mobile robots are still expected to perform. At Farm-ng, head of autonomy David Weikersdorfer is designing wheeled robots to trundle up and down rows of plants in greenhouses and smaller fields. Though simulation is important to higher-level planning, low-level motion control relies heavily on data captured from the real world and the rough terrain, even in a more controlled indoor environment. Wheels can easily slip on wet or dusty ground and divots will throw off the alignment of the whole platform as it rolls along.

“There is a lot to do in terms of accurate tyre-ground physics. The interaction with the ground is non-trivial and often neglected,” Weikersdorfer explained at the Actuate conference last autumn.

Conveyor belts, which supposedly move at a constant rate, present issues for picking robots. But, according to Rajat Bhageria, founder and CEO of food-preparation automation startup Chef Robotics, conveyor speed turns out to be quite spiky, which can make it hard for robots to pick objects from them reliably.

Such minute differences between theory and practice call for the ability to sense changes quickly and react to them. The question is how many sensors? Does the robot need an array of accelerometers and other position sensors to go along with the cameras and other perception sensors? Not necessarily.

Proprioception

Systems are increasingly taking advantage of proprioception, an internal awareness of body position and movement. Vector-based motor-control algorithms rely on the ability to detect rotor position accurately in real time. The controllers can do this simply by measuring changes in current flow and applying those to an internal model. “You can think of this as the feeling in the robot’s joints,” explained Margolis.

Though position feedback is almost baked into electric motor drives now, the artificial tendons of future soft robots may take advantage of similar internal senses. Several years ago, Disney Research put stretchable cords into artificial tentacles. Attached to strain sensors, these could provide feedback that let a model reconstruct a virtual model of how the tentacles have bent and stretched.

The proprioception of motor-propelled robots is helping to drive reinforcement learning strategies. A half-metre tall robot modified by Google DeepMind researchers to run a neural network showed greater agility on the artificial turf of a mini football field. The approach might yet let the machine learn to dribble a ball. Using the same Transformers structures as those used by language models, Professor Jitendra Malik’s team at the University of California at Berkeley altered the software of a Digit made by Agility Robotics and let it go for hikes along rough trails in the hills overlooking the campus.

Proprioception only gets you so far. In designs like those of Malik’s team, the “egocentric” camera plays an important role in helping the robot sense its own position on stairs or tilted surfaces. And it points to the increasing importance of image sensors in robot design. That, luckily, has coincided with the phone industry driving down cost.

Many warehouse robots use a combination of lidar and cameras to look around the space they are in. But cameras are taking on greater importance as AI underpins more of the higher-level control duties. Lidar has the major advantage of being able to sense obstructions more clearly. But it remains a relatively expensive and large instrument. Companies such as Ambi Robotics have prioritised camera data for retrieving in-field data from the arms its machines use. And Nvidia’s latest AI models designed to generate synthetic data for training robots under different lighting and atmospheric conditions prioritise the generation of video imagery.

However, the beginnings of the Ambi work lay in work like Dex-Net at UC Berkeley, which built a dataset of point-cloud models of objects of many different shapes. These 3D representations helped train robots to work out the many ways in which robot hands or grippers could pick them up and move them in simulation. Those objects were modelled as being solid and hard. Where robots need to make better progress is on softer or friable materials. Motor-based proprioception is not that great if you are already at crushing force by the time the robot has worked out that it has picked up a raspberry.

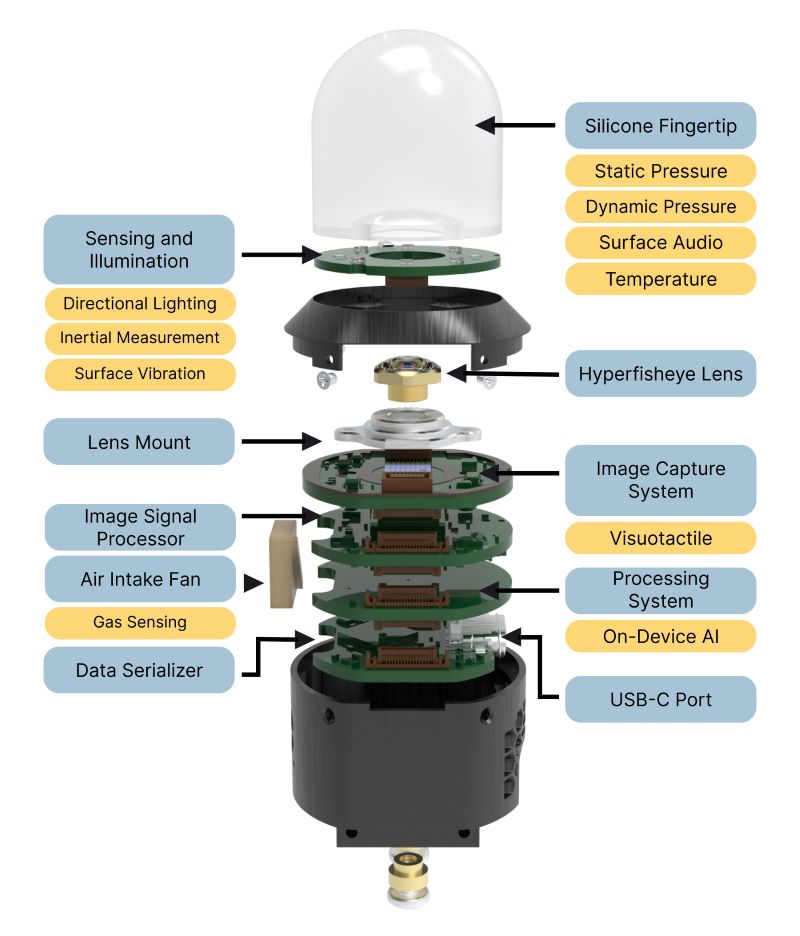

Above: The Digit 360 has been designed for use as a fingertip with sensors that can detect vibration, pressure and even gases.

Image sensors

This is where image sensors may prove crucial in less expected ways. One answer to improving a robot’s ability to sense the object it is touching or holding is to use elastomers to form a skin over the fingers of a robot. Conductive or magnetic sensors spread throughout the membrane transmit a data on how the object is deforming the soft skin.

Researchers at the KAIST research institute in South Korea proposed embedding tiny MEMS microphones in a sandwich of silicone and hydrogels. A neural network model in the sensor controller uses source localisation to work out where the robot has made contact with another object.

In work from almost a decade ago, MIT researchers used a similar neural-network approach to detect changes in light reaching an image sensor from inside a rubber skin sandwich. Commercialised by the spinout GelSight, these sensors use a reflective coating on the inside of the rubber layer to deflect light transmitted from an LED. Changes in light patterns as the surface bends around the grasped object lets the network infer how the surface has deformed.

From this basic sensor, GelSight and MIT developed sensors that wrap around the inner half of a robotic finger. A pair of mirrors let a single camera capture the deformations across the inner surface. Last year, saw a domed version called Digit 360 developed in a project with Meta. This one is designed for use as a fingertip. It includes extra sensors to detect vibration, temperature, pressure and even gases. Those sensors may couple with additional egocentric cameras sitting in the robot’s wrists to focus on what they are trying to manipulate.

A characteristic of these new touch sensors is their harnessing of on-device AI. Researchers such as Pieter Abbeel, director of the robot learning lab at UC Berkeley see this distributed intelligence as a way of improving robots’ ability to react to situations and reduce the effects of processing lag. He points to the animal nervous system as an example of why distribution might be a better idea, where nerve bundles in the spinal column react quickly to sensations without the need to wait for a response from the brain.

For Abbeel, a further benefit may come from distributing the job of training across multiple models running inside the model rather than trying to use reinforcement learning on a single, all-encompassing model. It is one more example of the ways in which robot sensing and behaviour are becoming intimately linked.