From the advent of the Dreadnought battleship back in 1906 to the rise of nuclear weapons during the Cold War, militaries around the world have often talked about a ‘revolution’ occurring in military affairs but in truth, while the technology changed, the way in which wars were fought did not - whether that was in Korea, Vietnam, The Falklands or Iraq.

Today, however, there is a growing belief that over the next 20 years, or so, we will experience a revolution in warfare, with significantly more changes than we’ve seen at any time in the past 50 years – from weapons’ capabilities to the way in which wars are conducted.

Revolutionary technologies from new sensors and embedded computers to drones and robotics, together with developments in artificial intelligence and the use of big data, are being combined and will radically change the nature of warfare.

In terms of the future battlefield we could see swarms of robotic systems, both as sensors and weapons, the deployment of laser weapons, reusable rockets, hypersonic missiles and unmanned, autonomous vehicles.

The Ministry of Defence (MOD) in the UK is, for example, developing a state-of-the-art weapons system, known as Directed Energy Weapons (DEW), which operates without ammunition.

These laser weapons systems deploy high energy light beams to target and destroy enemy drones and missiles and are expected to be trialled in 2023 on Royal Navy ships and Army vehicles but, once developed, could be operated by all three services.

Part of the MOD’s “Novel Weapons Programme” which is responsible for the trial and implementation of innovative weapons systems, the MOD has commissioned three Dragonfire demonstrators that will combine multiple laser beams to produce a weapons system.

Whatever the weapons programme being developed, however, underlying all these new technologies is the growing international competition that is being seen between the likes of the US, China and, to a lesser extent, Russia.

“The marriage of rapid technological progress with strategic dynamism and hegemonic change could prove especially potent,” argues Michael O’Hanlon, a senior fellow and director of research at the Brookings Institute.

“The return of great-power competition during an era of rapid progress in science and technology could reward innovators, and expose vulnerabilities, much more than has been the case to date,” he suggests.

According to O’Hanlon ‘revolutionary’ change is likely and we’ll see significant developments across all forms of technology from communications to projectiles, forms of propulsion and platforms.

The biggest revolution, however, is likely to come in terms of the use of AI, big data and the use of autonomous systems.

When it comes to autonomous systems leading defence contractors, Boeing and Northrop Grumman, are already building unmanned fighter jets. With no need for crew facilities, these drones will be able to provide the military with more capabilities both in terms of payload and longer range. It’s thought that Lockheed Martin’s F-35, which is now entering service both in the US and around the world, could quite possibly be the last manned strike aircraft that most countries will look to buy.

If that proves to be correct then drones look set to dominate the skies by 2050.

Ethical issues

Today, we talk a lot about ‘a new normal’ and in the future, when it comes to defence, unmanned and autonomous systems will become every-day devices that will be deployed in all manner of situations.

But the use of autonomous vehicles and drones raises some interesting ethical issues.

How independent should drones become, will decisions to engage with an enemy still be made by “a man in the loop”, or will those decisions be made by the robots themselves?

In an attempt to address this, there is growing interest and work in the development of ‘ethics’ software that will be capable of querying decisions or determining whether the use of a specific weapon, in a particular situation, might violate the norms of a ‘just war’. The goal of such software is to aid, not replace, human decision-making.

Speaking at COGX, earlier this year, Dr Ozlem Ulgen, an international lawyer specialising in autonomous weapons, explained that there was a long tradition of setting rules around forms of conflict.

“Regulating warfare, setting boundaries and establishing ethical underpinnings for warfare have a long tradition when it comes to warfare and by establishing such rules we regulate warfare and legitimise it.

“There are basic principles governing military engagement and the conduct of hostilities - combatants need to be mindful of situations, limit harm and show awareness. So the development of AI and machine learning and their use in warfare raises real and interesting challenges.”

The potential to use AI combined with autonomous machines on the battlefield is enormous.

According to many AI is ‘very much’ the future, but questions are being asked as to whether AI can, no matter how clever, replace humans.

“Technology can only enhance and help humans by working alongside them,” argues Dr Vitor Jesus, Senior Lecturer in the School of Computing at Birmingham City University.

“I’m sceptical about the use of AI. When you look at its use in cyber security it tends to create more problems than solutions. While AI is a great tool when used to help humans, it is rather poor when deployed to replace them.”

Dr Jesus makes the point that while technology will certainly evolve, “I don’t believe that we will see the kind of breakthrough in technology that will be required in the next 5-10 years. I don’t think that AI techniques and the models and maths behind them, will improve sufficiently.

“AI is great when it is used to help us better understand what we know well, patterns and images, for example - it does a good job. The problem comes when something isn’t found in ‘the catalogue’, i.e. a Black Swan event. We need a new way of thinking about machines and reasoning.”

He also makes the point that the growing complexity of systems brings additional problems.

“Hosting complex systems is a challenge and we know that when it comes to cyber security, complexity can see systems compromised.”

AI is designed to learn in operation, and a service attack could result in turning a system against its owner, to the benefit of the adversary, suggests Dr Jesus.

“Basically, you’ll have created an insider working within the system. Can we use AI to increase safety and reliability? Not yet. My simple message would be ‘don’t do it’. AI works as an advisor, but should not be allowed to make the final decision - a human needs to be responsible for that.”

Despite his concerns defence systems designed to counter missiles already rely on robots to defend assets, such as warships. Their ability to react to a fast moving threat – a missile – is now well beyond the capabilities of humans.

Both China and Russia are believed to be designing software that will remove the human from some decisions, so responsibility for actions, responses and ultimately deaths is destined to become “very, very, very diluted”, according to Emmanuel Go , a French air-force expert on robotic warfare, “and you can’t pin war crimes on a robot.”

Robots and the use of autonomous systems raise a number of additional questions – could their use mean that adversaries are more inclined to strike at civilian targets, if they are unable to engage and take out military adversaries? Could they encourage countries to turn more quickly to military solutions and start more wars?

Technologies combined

According to Dr Bryan Wells, NATO’s Chief Scientist, “Rarely does an individual technology make the difference, it is when they’re combined. For NATO the key characteristics determining the future of technology in this space are: distributed, digital, interconnectedness, and intelligence, and we judge that AI, ML and autonomy will be the key areas we should be looking at over the next 5-10 years – looking beyond that, we see biotechnology and quantum coming to maturity.

“The pace of technology is accelerating but so too is the breadth of technology. which will give rise to greater choice for leaders who will be confronted with moral and ethical issues,” he said.

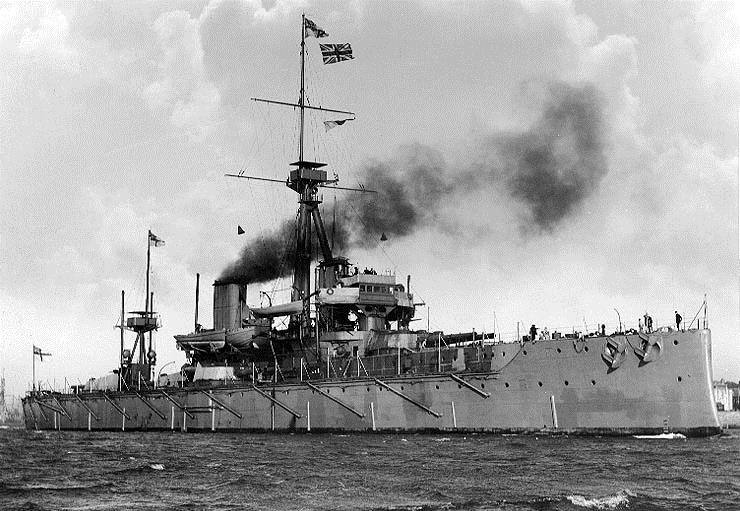

The launch of the HMS Dreadnought, an all-gun battleship by the Royal Navy, back in 1906 revolutionised naval warfare. It made all existing vessels obsolete and risked Britain’s then naval dominance – likewise with today’s new technologies, can the West\NATO retain its lead in military robotics in the face of competition from the likes of China and Russia?

In the UK, our emerging capabilities in terms of AI could benefit by adopting current and near-term quantum technologies, according to a recent research paper published by the Defence Science and Technology Laboratory (Dstl), on behalf of the MOD.

According to the report, by embracing quantum technology the UK could see the pace, precision and pre-emption of decision making enhanced for military commanders.

Dstl’s report identified commercially available quantum computers – ‘annealers’ – that could have the potential to run an important and versatile class of AI software at speeds vastly in excess of normal digital computers. This software is based on pattern-matching, which imposes extremely high loads on classical digital computer architectures - the unique properties of a quantum annealer, means that it can execute a neural net in one machine cycle instead of thousands or millions.

According to the report, quantum neural nets could be used to perform Quantum Information Processing (QIP) to search archive, near real and real-time data feeds, automatically looking for features of interest, detecting anomalies and instances of change.

This would significantly improve the time, cost and quantity of military data processing.

Over the next 5 to 10 years, within MOD and more widely, QIP technologies look set to be applied to control systems in aircraft, missiles, fire control and defensive systems, sensor data processing such as data fusion, navigation, resolving signals in noise, interference and jamming, AI situational understanding and pattern analysis.

Commenting Gary Aitkenhead, Dstl Chief Executive, said, “Quantum technology is a game-changer for defence and society - one that maintains the security of the UK, and offers significant economic benefits.”

“The pace of change over the past 10-15 years has been rapid and the threat landscape too, has changed markedly,” added Aitkenhead. “Our ability to harness the capabilities of science and technology will be fundamental and directly linked to our ability to deliver defence and security.”

Many countries are now upgrading their defence platforms after the decades of asymmetric warfare in the Middle East, and their investment in new platforms is looking to incorporate AI and other tools that turn data into tactical information.

Investment in digital technology is accelerating and the focus is on developing new platforms and systems that are faster and more agile and, as a result, this technology-focused approach is seeing the world’s militaries increasingly having to engage with the commercial sector in order to identify technological innovation.

While some areas of military technology may not change dramatically in the coming years, whatever the technology and the science behind it, the key is likely to be how these individual technology trends interact with one another, and what innovations will arise in terms of how they are employed on the battlefield.