The Pentagon, in the US, has made a commitment to spend $2 billion over the next five years through the Defense Advanced Research Projects Agency (DARPA). Its OFFSET programme, for example, is looking to develop drone swarms comprising of up to 250 unmanned aircraft systems (UASs) and/or unmanned ground systems (UGSs) for deployment across a number of diverse and complex environments.

In China, there are a growing number of collaborations between defence and academic institutions in the development of AI and machine learning and Tsinghua University has launched the Military-Civil Fusion National Defense Peak Technologies Laboratory to create “a platform for the pursuit of dual-use applications of emerging technologies, particularly artificial intelligence.”

Russia has gone one step further and is creating a new city named Era, which is devoted entirely to military innovation.

Currently under construction, the main goal of the research and development planned for the technopolis is, “the creation of military artificial intelligence systems and supporting technologies,” according to the Kremlin.

“What we are seeing is a renaissance in interest in AI and machine learning (ML),” says Dr Andrew Rogoyski, Innovation Director at Roke Manor, a contract research and development business owned by Chemring.

“The initial surge in AI came in the 1940s and 50s and then again in the 1980s. Today, in what can be described as a ‘third renaissance’ we have the computing power and enough data to deliver on the promises made for the technology in the past. It’s a really exciting time in terms of what is possible.”

According to Dr Rogoyski, “When we talk about AI we tend to mean machine learning. You have to remember that AI is a vast subject and includes areas such as natural language processing, robotics, machine vision and data analytics.

“While the media tends to focus on ‘killer robotics’ the use of ML in the military space takes in areas as diverse as logistics, surveillance targeting and reconnaissance. Healthcare is one of the biggest costs for the military as it needs to keep service personnel fit and ready for deployment. ML can be used to optimise and tailor individual training schedules. As such, the application and use of AI and ML is extremely broad.”

The UK’s Ministry of Defence (MoD), sees autonomy and evolving human/machine interfaces as enabling the military to carry out its functions with much greater precision and efficiency.

A 2018 Ministry of Defence report said that the MoD would be pursuing modernisation in areas like, “artificial, machine-learning, man-machine teaming, and automation to deliver the disruptive effects we need in this regard.”

The MoD has various programmes related to AI and autonomy, including the Autonomy programme, which is looking at algorithm development, artificial intelligence, machine learning, as well as “developing underpinning technologies to enable next generation autonomous military-systems.” Its research arm, the Defence Science and Technology Laboratory (Dstl), unveiled an AI Lab last year.

“The MoD is focused on a variety of AI techniques such as machine vision and robotics across a number of different use cases,” explains Dr Rogoyski. “These range from threat intelligence to data science, and the ministry is now having to operate in much the same way as it would if it was in the commercial world. That means it needs to be able to deploy these technologies fast enough to match deployments in the commercial space. Keeping up is a real challenge, you are looking at getting technology into operational use without taking 5-10 years to procure it and go through the long drawn out cycles of the past.

“Ultimately, it will mean changing procurement strategies.”

In terms of weaponry, one of the best-known examples of autonomous technology currently under development is the Taranis armed drone, the “most technically advanced demonstration aircraft ever built in the UK,” according to the MoD.

“Whether it’s drones or autonomous vehicles, there’s a big push to develop technologies that protect servicemen,” explains Dr Rogoyski. “Whether that’s drones or route clearance vehicles, the aim is to move servicemen away from the front line and to allow technology to take their place.”

The Royal Air Force and Royal Navy are ten per cent short of their annual recruitment targets, while the Army is more than 30 per cent short, so the MoD also sees automation as a possible solution to this manpower shortage.

“Another area of interest, and which is becoming increasingly important, is where ML is being used in psychological operations,” explains Dr Rogoyski. “It may not have anything to do with ‘killer robots’ or drones but these types of operations, via social media or through the use of fake news, are transforming the way we can influence the political will behind the use of military force.”

According to Dr Rogoyski, psychological management is just one of a number of new ‘fronts’ that need to be addressed.

“Another is the security of a nation’s critical and increasingly connected infrastructure and the role of AI in protecting it. How can we project military power overseas when our entire infrastructure could be at risk from a cyber-attack, not just from other nation states but from non-government organisations?”

The MoD has a cross-government organisation called the Defense and Security Accelerator (DASA), that looks to find and then fund exploitable innovation to support UK defence and security quickly and effectively.

Advances in AI and automation offer real opportunities but will require a fundamental shift in how they are viewed and treated. Instead of being seen as something confined solely to research labs, the MoD has been urged to adopt a much nimbler and more ambitious approach in terms of how they are used to transform defence programmes, using experimentation to try, fail, learn and succeed, while at the same time developing procurement processes that allow for a more agile adoption.

The history of military innovation shows that operational advantage is secured more by understanding how best to use new technology than developing the technology itself.

“A key issue with both AI and ML that will ensure their successful adoption is that they need to be explainable and come with a level of assurance,” says Dr Rogoyski. “Both are fundamental and are not solely confined to the military.

“If you supply a black box system and the user has no idea how or why it makes a decision, how can they trust it if, and when, it makes a mistake? Trust in weapons systems is critical, but then would you put your trust in a robotic surgeon or a banking system that made arbitrary decisions about your savings?

“AI needs disciplined thinking and the systems will need to operate in the way you expect, under specific circumstances. If they make a mistake you want to be able to understand why, so there’s an important link with post analysis and how a system has made a particular decision.”

In fact, by gathering and analysing multiple limited implementations it could provide the MoD with a clearer sense of direction and an ability to rapidly exploit AI developments in the commercial sector, leading the way for further military development.

Military use of AI

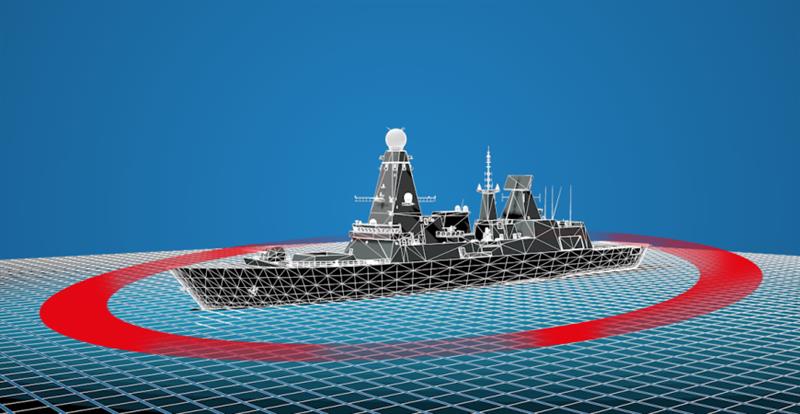

“Roke Manor is involved with a number of projects using AI,” says Dr Rogoyski. “We have developed STARTLE, for example, for users operating in a cluttered marine/air situation. Its situational awareness software continuously monitors and evaluates potential threats using a combination of AI techniques.

Above: Roke Manor's STARTLE uses AI to monitor and evaluate potential threats

“It operates by rapidly detecting and assessing potential threats, augmenting the human operator providing enhanced situational awareness in a very complex environment.”

Another project involves Envitia, a UK geospatial and data company who, along with its partner BAE Systems Applied Intelligence, is using artificial intelligence to task autonomous submersibles with hunting underwater mines for the Royal Navy.

Mine-hunting is currently carried out by a fleet of mine-hunter ships using sonar. These new AI-enabled submersibles will be much quicker in being able to scan an object, identify the threat, and make decisions about what to do with it.

The Royal Navy’s Route Survey & Tasking Analysis (RSTA) project looks to adopt autonomous vehicles, open architectures and AI, with the intention of delivering an unmanned capability for routine mine countermeasure tasks in UK waters by the year 2022.

Commenting the outgoing First Sea Lord, Admiral Sir Philip Jones, said: “AI is set to play a key role in the future of the service. As modern warfare becomes ever faster, and ever more data-driven, our greatest asset will be the ability to cut through the deluge of information to think and act decisively.”

Above: Envitia is using AI to task autonomous submersibles with hunting for underwater mines for the UK's Royal Navy

Envitia is working with BAE Systems Applied Intelligence to deliver RSTA, one of the first applications to be built on the Royal Navy developed NELSON data platform that is used to deliver coherent access to data generated by the Royal Navy at sea and ashore.

In addition, Envitia is utilising its maritime geospatial toolkit to deliver geospatial services into the application, ensuring RSTA has accurate and up-to-date maritime data for each mission.

As part of the Mine Countermeasures and Hydrographic Capability (MHC) programme, RSTA will intelligently task a fleet of autonomous vehicles, utilising machine learning, to analyse mission conditions and improve the success rate of all its missions over time.

Ethical dimension

The question of AI ethics has become an increasingly important one. The EU and other countries have been engaging for some time with the issue of AI ethics and the importance of civilian over-sight of AI research, and have been encouraging more open research and dialogue between nations due to fears that unregulated AI could ultimately lead to an international arms race.

“The worries around AI are varied,” according to Nick Colosimo, Chief Technologist, BAE Systems. “One is the ease of weaponisation of commercial off-the-shelf technology, another is the growth in small vehicles, no bigger than a human hand, which could prove difficult to detect and counter. The growth in machine speeds is another issue - how will human intervention be managed with the development of ever faster machine speed warfare?”

According to Adam Saulwick, Senior Research Scientist, the Australian Government, “We are faced with a data deluge; information comes from natural engagement, human machine interaction etc. and we are faced with a plethora of problems when it comes to AI. How do we use that data ethically? Can we trust it? If the Western democracies look to use data correctly can we be sure that their opponents will do so too?”

According to Dr Rogoyski, “AI and ethics is a difficult area. But it is crucial that we continue to research both the benefits and pitfalls of widespread AI application and implementation.

“In terms of norms of behaviour momentum is building. Earlier this year the US published an AI strategy document in which they talked about the need for a thoughtful and human centric approach to AI – which I think is both a sensible and pragmatic approach.”

Dr Rogoyski makes the point that when it comes to ethics, “Is it ethical not to use AI if you can save lives and shorten conflict? Is it ethical that if you are faced with a country or organisation with AI capabilities that you don’t seek to match that capability? There is a duty as a military planner/strategist to keep up with your adversaries.”

At the end of the day, who decides what is ‘ethical’?

“We know that both Russia and China see AI as a key element of both their industrial and military strategies and it is striking the scale of the investment they are making,” notes Dr Rogoyski.

With the level of investment set to accelerate in both AI and machine learning over the coming years we are entering a critical period in determining how militaries around the world will use AI – whatever happens, the nature of warfare is changing and that will only accelerate as the technology becomes ever more pervasive.