While it may seem that AI is already endemic, there is still a long way to go before it fulfils its promise. This raises many questions but one of the most pertinent is ‘will AI be secure?’ In the embedded sector the topic of security in the IoT has been fiercely debated for over a decade and we can now say with some certainty that the IoT is well on the way to being made more secure. Manufacturers have access to guidance and best-practice, not least because of the efforts of ETSI and free access to its work, such as the Technical Report ETSI TR 103 533, which gives an overview of the standards landscape for security in the IoT.

Following several surveys carried out between 2018 and 2019, the European Commission discovered that while it could identify over 200 organisations working on AI, only one of them was specifically addressing security as it applies to AI. This was identified as a major potential threat, looming on the horizon. Anyone familiar with the IoT will appreciate how consuming the subject of security can become. As more things become connected, the legal liability associated with introducing a node with poor security is, alone, enough to make manufacturers stop and think.

Considering that we can expect AI to soon be embedded in everything, from IoT nodes to cloud servers, the scale of the potential threat is apparent. Depending on which analyst report you consult, the AI market is expected to demonstrate a compound annual growth rate (CAGR) of as much as 50% over the next five years, some say it could be even higher. This means we are at an inflexion point in the evolution of AI. The time to address security is now.

With most ICT systems the security threat is identifiable and, often, direct. With AI, this is not necessarily the case. The nature of AI means that the threat could be introduced at various points. We can expect most IoT-like systems to employ a form of AI known as Machine Learning (ML), a subset of AI that is formed through acquiring, curating and presenting data to an algorithm to build a model. It is the model that is subsequently deployed in embedded software, but it then still needs to be trained for a specific application.

Threat assessment

In order to assess the scope of the potential threat, ETSI formed the world’s first standardisation initiative dedicated to securing AI. The ETSI Securing Artificial Intelligence Industry Specification Group (SAI ISG) was formed in September 2019. In January 2021 it released its first group report, giving an overview of the problem.

While the ultimate intention is to see standards produced that provide solid solutions to the threat, the initial objective must be to identify how AI might be used and how those use-cases may be susceptible to attacks.

As an example, which is indicative of the unique challenge AI systems present, it is currently uncommon for an AI system to be backed up or rolled back in the event of an attack causing total lockout. It may not even be possible to ‘switch off’ an AI system, in the traditional sense.

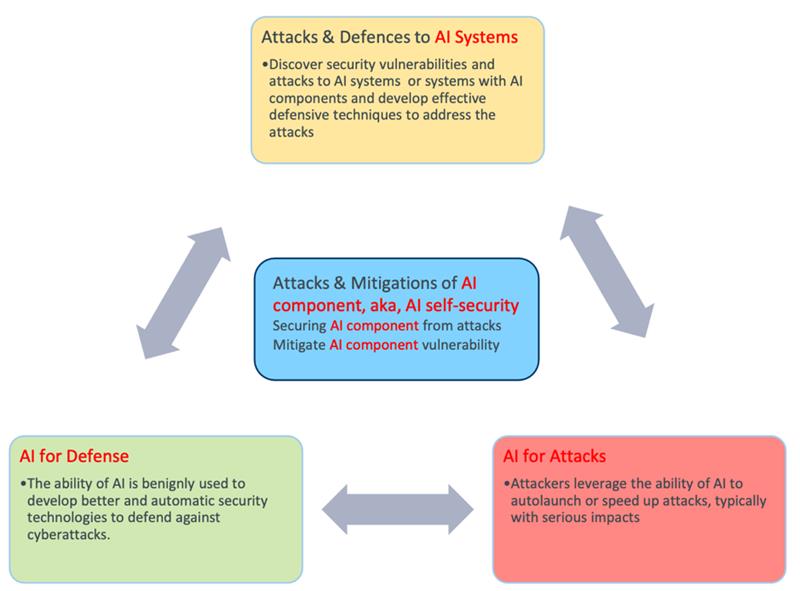

The SAI ISG has identified three scenarios that it feels put manageable parameters on this challenge; defending a system from an attack launched by another system that is using AI; using AI to make systems more defensible from all attacks, and making AI systems less susceptible to all forms of attack.

The concept of using a trained model to probe another system, looking for weaknesses, is compelling. This could include using AI to break the type of encryption being used (if any) by analytical methods, or it could simply involve using AI to create a more intelligent type of brute force attack.

Similarly, AI could be employed to defend a system, by being trained to identify attacks faster and respond quicker. This is, perhaps, the most familiar type of threat, as it extends the way antivirus software is used today. Arguably, antivirus software is much less common in edge devices and the IoT, but this is exactly where ML will be deployed.

It is inevitable that cybercriminals will enlist AI to help them in their attempts to break into secure systems. This will pit AI against AI, forcing the respective systems to go head-to-head in an unseen battle. Standards that help in the development of AI systems that understand this threat and can defend against it will be crucial in the future.

The unique threats

It would be understandable to view AI through the same lens as other ICT systems, but the real picture is very different. Networks operate in an inherently serial, linear way; protective mechanisms have been developed to operate in the same way. AI systems are not serial, they are much more parallel in nature, which means the threats present differently. Directly porting existing security measures across from other ICT systems would not provide adequate protection.

The SAI ISG is going back to the start, to look at AI from the bottom up, to identify where the weaknesses exist. This is a technology-centric, rather than application-centric, approach. The result is the security solutions developed will not be specific to any given application of AI. In short, it will not matter what AI is being used for as it will still be protected.

By understanding how to build a trusted AI solution, any application layer that sits on top of that will be inherently secure, thanks to the building blocks put in place. Simple firewall filtering or monitoring approaches, as we understand them today, would not provide that.

The ML lifecycle

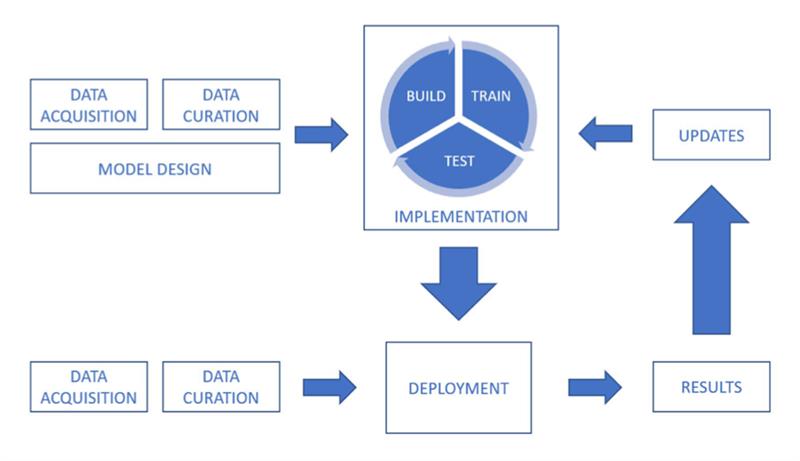

Unlike a traditionally developed system, which generally needs to protect itself from attacks only after it has been put into service, AI systems have many stages in their lifecycle that are susceptible to potential attack. These are outlined in Figure 2.

During the data acquisition and data curation stages, the data comes from many real-world sources. Any of these sources, or their data, could be influenced, or ‘poisoned’ to introduce a security weakness. This represents an integrity issue, which can extend to the training phase of the ML lifecycle. The deployment and inferencing stages are also susceptible to integrity issues, by attackers introducing data that forces the system to react or behave in an unplanned or unexpected way.

What makes these issues more specific to AI systems is that they can be associated with and optimised for the method of learning employed. For example, AI and ML systems will typically use supervised, semi-supervised or unsupervised learning, where the data sets are labelled, partly labelled or unlabelled, respectively. In addition, reinforcement learning is a method that ‘rewards’ the system for a correct answer and ‘punishes’ it for an incorrect answer, but the concept of reward is entirely subjective and therefore prone to abuse.

The SAI ISG has six active work items, securing the AI problem statement is the first. This will be followed by the AI Threat Ontology (which will define what might be considered an AI threat); the Data Supply Chain report (which would address the issues associated with maintaining and protecting the integrity of training data); and the Mitigation Strategy report (which will summarise and analyse the existing mitigation against threats to AI-based systems). The final two are the Security Testing of AI work item and the group report looking at the role of hardware in SAI.

The efforts of the SAI ISG will likely result in standards being developed that can help all users and beneficiaries of AI-based systems maintain a high level of security.

At the very least, it will promote greater conversation around the issues threatening that security. As with all standards produced by ETSI, it and the findings of the SAI ISG will be freely accessible online.

Author details: Alex Leadbeater is Chair of ETSI SAI ISG