With a 50% increase over the HBM3 Gen1 data rate of 6.4 Gbps, the Rambus HBM3 Memory Controller can enable a total memory throughput of over 1.2 Terabytes per second (TB/s) for training of recommender systems, generative AI and other demanding data centre workloads.

“HBM3 is the memory of choice for AI/ML training, with large language models requiring the constant advancement of high-performance memory technologies,” said Neeraj Paliwal, general manager of Silicon IP at Rambus.

“HBM is a crucial memory technology for faster, more efficient processing of large AI training and inferencing sets, such as those used for generative AI,” explained Soo-Kyoum Kim, vice president, memory semiconductors at IDC. “It is critical that HBM IP providers like Rambus continually advance performance to enable leading-edge AI accelerators that meet the demanding requirements of the market.”

HBM uses a 2.5D/3D architecture which offers a high memory bandwidth and low power consumption solution for AI accelerators. Delivering improved latency and a compact footprint, it has become an integral part for those developing AI training hardware.

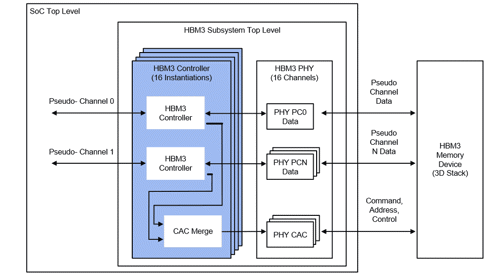

The Rambus HBM3 Memory Controller IP is designed for use in applications requiring high memory throughput, low latency and full programmability. The Controller is a modular, highly configurable solution that can be tailored to each customer’s requirements in terms of both size and performance. Rambus provides integration and validation of the HBM3 Controller with the customer’s choice of third-party HBM3 PHY.