Left out in the sun for too long, they went into the thermal shutdown mode intended to protect the SoCs and battery.

At the other end of the scale, the mass deployment of IoT sensors has focused attention on how much energy the processors and memories need to extract from a single battery charge. Both trends are making power and energy management primary concerns in embedded-systems design. But it’s far from a simple process to work out how a system will use power before it’s built.

Gate-level logic simulation can support highly detailed power modelling. Even then it is not necessarily completely accurate because traditional simulation and emulation techniques focused on simple algorithms that considered just switching power and not the subtle effects of leakage.

Leakage in digital circuits is a particular problem because of the way it changes not just with process, voltage and temperature (PVT) but gate configuration.

In their work at North Carolina State University, Barkha Gupta and Rhett Davis showed that for a single silicon-on-insulator (SOI) process, which typically exhibit low leakage levels, the ratio of off-currents through an inverter versus a more complex NAND4 transistor stack varies dramatically with changes in process conditions at the fab. A leakier “fast” process corner on circuits running at 1V can exhibit off-current ratios 40 times those of the slow at very low temperatures, dropping to four-fold at 100°C.

There are two further problems. One is that gate-level simulation is exceedingly slow. A system-level analyser should be able to assess the aggregate power consumption of potentially billions of gates operating at once, which is only possible at reasonable clock rates on expensive emulation hardware. Second, and perhaps even more troublesome, is that the analysis made possible by gate-level simulation comes way too late in the project. By the time the gates are in place, the hardware is more or less fixed with perhaps some wriggle room for clock and power gating. There is little that the software can do to alter the outcome other than juggle with power-down states. With better information earlier architects might have made different architectural decisions. For example a parallelised accelerator may wind up demonstrating excessive power when active that may lead to unanticipated overheating. “In typical designs we see that accelerators are guzzlers. They need to be shut down when not needed or, if they are pipelined, turned off until data is available,” says Vojin Zivojnovic, CEO of software-tools company Aggios.

Standards for systems designers

About five years, the Silicon Integration Initiative (SI2) and the IEEE set about creating two standards that could be used by system designers alongside the widely used Unified Power Format (UPF) and which would remove one of the common drawbacks with today’s UPF-based flows. The first to make it to completion with a summer 2019 release is IEEE 2416, which uses technology originally developed at IBM to work out how leakage and other aspects contribute to total power. The techniques in the standard, which was released over the summer, makes it easier to build models of leakage that remain accurate across a wide range of temperature and process conditions. However, that does not readily address the system-architecture problem.

One thing that did not become readily apparent until widespread adoption of methodologies based on UPF is the frequent mismatch between logical and physical dependencies. Very often logically separate blocks will run at the same voltage and so share the same voltage rails as it is more area efficient for them to do so. In an earlier era when the main technique for saving power was clock gating, where the clock is suspended from blocks with nothing to do, this was not a problem: it is relatively easy to insert the additional logic late in the project.

Now that designers routinely use power gating to suppress leakage in dormant cores, splitting and consolidating supply rails becomes much more important at an architectural stage. If a processor is power gated, it probably makes sense to take down its memory controller. However, there may be DMA-capable peripherals that need to stay awake during sleep and cannot access a power-gated memory. System-level simulations may indicate the cost of dumping the entire contents of memory and reloading them again after each sleep cycle imposes too much of a delay.

UPF-driven simulations show up these potential mismatches as a design nears completion. But earlier power modelling would help plan the architecture to ensure the design does not break later on when power controls are applied. But it can go further. The memory controller may be too power hungry to keep alive during long sleeps.

It might make more sense for those peripherals to cache data locally and only deliver the data to main memory when the system is in a high-activity state. Accurate power models available at an early stage would make those decisions easier to assess. The currently provisional IEEE 2415 standard seeks to deal with that problem though it may take another year to get there and could end up being abandoned if not. Originally expected to complete this year, Zivojnovic, who is the working IEEE 2415 group chair, says the work has expanded to encompass security considerations. The reason for this is that power controllers have control over an entire platform: low-priority hardware or software cannot be allowed to take an entire system down by programming in the wrong states.

Zivojnovic says the current standard, as well as the Aggios software, takes advantage of a description approach used in the Linux environment. This is a device tree that represents the various dependencies that each software task, such as one used to send data over a network interface, has within the system. If that task is active, all its sub-tasks and the hardware they access have to be active.

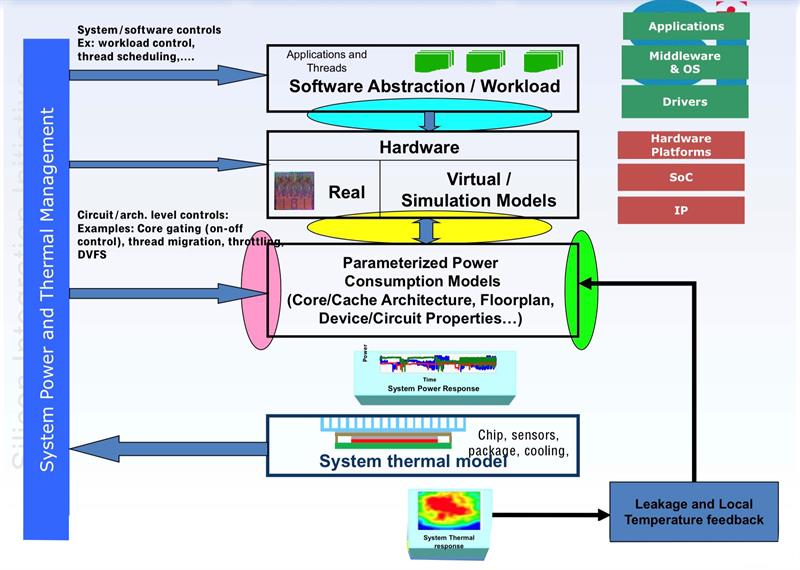

Above: A somewhat idealised simulation based design approach

Simulation software uses that tree-based description to work out how much power that task will demand based on models of each block. They could be more detailed but often the early power estimate for a block will be a combination of clock speed, voltage and throughput in a simple polynomial function. If the power is excessive, architects and developers can look more closely at what modules need to be active at any one time and rearrange the dependencies if they are not necessary.

In a design flow that involves IEEE 2416 and 2415, UPF remains the core of the hardware design. UPF specifies the way in which logic and circuit simulators apply virtual power to the circuit design.

“As participants on the relevant IEEE teams, we watch those standards closely,” says Allan Gordon, product manager at Mentor for the Questa family of logic-simulation tools. He notes version 3 of the UPF standard has integration extensions to ensure the other IEEE power standards will work together.

It will be vital to ensure that the UPF description and that used in a high-level power model will need to be kept in sync. But by modelling the dependencies early, which may be achievable in a multivendor environment if IEEE 2415 is approved in the near future and EDA vendors support it, tools will be able to show how different configurations work and maintain the connection through to the point the design goes to production.