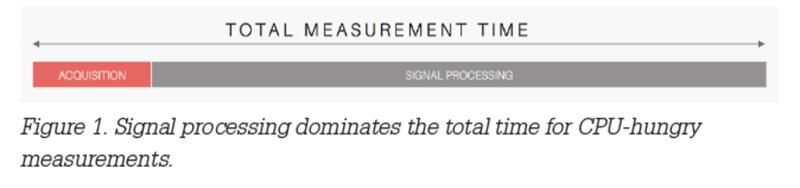

This is especially true for signal processing intensive measurements, such as RF, sound and vibration, and waveform-based acquisitions. Considering even the fastest FFT-based spectrum analysers on the market, only 20 percent of their measurement time is actually spent acquiring the signal, the remaining 80+ percent is spent processing the signal for the given algorithm. If you apply this to an instrument released five years prior, the breakdown is even worse. The capital cost of investing in instrumentation makes it impossible for test departments to continuously invest in completely new test equipment in order to speed up the signal processing. This results in a large performance gap between the processing power of their systems and their true performance needs.

Meanwhile the complexity of devices under test continues to escalate, resulting in demand for increased test coverage and speed, and hence increased processing power is required. A key benefit of PXI modular instrumentation is the ability to replace the CPU with the latest processing technology while keeping the remaining components (chassis/backplane and instrumentation) the same. For most use cases, keeping the instrumentation and upgrading the processor extends the life of a modular test system well beyond that of a traditional instrument.

The traditional approach is to rely upon increased processor clock speed to achieve faster signal processing. But the trend of utilising faster clocks speeds is facing physical limitations. With the clock speed of processors plateauing, will traditional instruments be able to rise to the challenge of reducing test times when faced with increased complexity? In order to continue to see increased performance, the test and measurement industry needs to look at adopting many-core processor architectures. Until now, most automated test engineers have used technology such as Intel Turbo Boost to increase the speed of a single core on a quad-core processor and reduce the test times of sequential software architectures, but this technology is plateauing.

Certain test application areas are prime candidates for leveraging the power of many-core processor technologies. The semiconductor industry is a prime example. In The Maclean Report 2015, IC Insights researchers examine many aspects of the semiconductor market, including the economics of the business. They state, "For some complex chips, test costs can be as high as half the total cost…longer test times are driving up test costs." They continue to note, "Parallel testing has been and continues to be a big cost-reduction driver."

The semiconductor industry is not alone in adopting parallel test. You would be hard-pressed to find a wireless test system that tests fewer than four devices at a time. Many-core processors are also primed to impact any industry that must test the wireless connectivity or cellular communication protocols of a device. It is no secret that 5G is currently being researched and prototyped, and this technology will greatly exceed the bandwidth capabilities of current RF instrumentation.

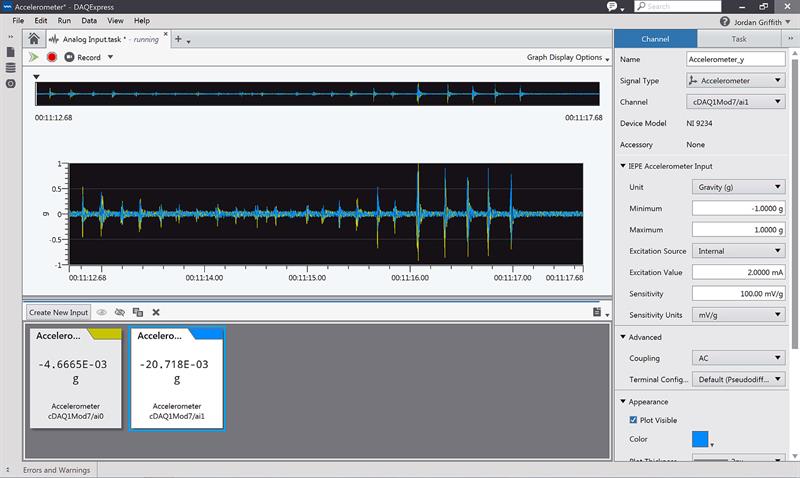

As well as the increased demands being placed upon the processing power of instrumentation, having the capability to move data sets between instrumentation and processor is becoming more important. The approach of traditional, boxed instrumentation is to perform the signal processing on board the instrumentation, leading to limitations in the ability to customise data processing and preventing access to the full raw data the instrument acquires. This lack of sufficient bandwidth limits the host computer to post-processing the data, the result of which is the inability to perform real-time signal analysis.

These issues are becoming more apparent as we have to acquire and process larger data sets.Again, the development of 5G is an example of this. The industry expects to start developing production-ready automated test systems for 5G in 2018. Ensuring that these test systems are built on a platform that can handle the increase in bandwidth and providing the ability to transfer full data sets from instrumentation to processor will be essential. Finding a solution to both the need for increased processor performance as well as addressing the challenge of increasing system bandwidth between processor and instrumentation is essential.

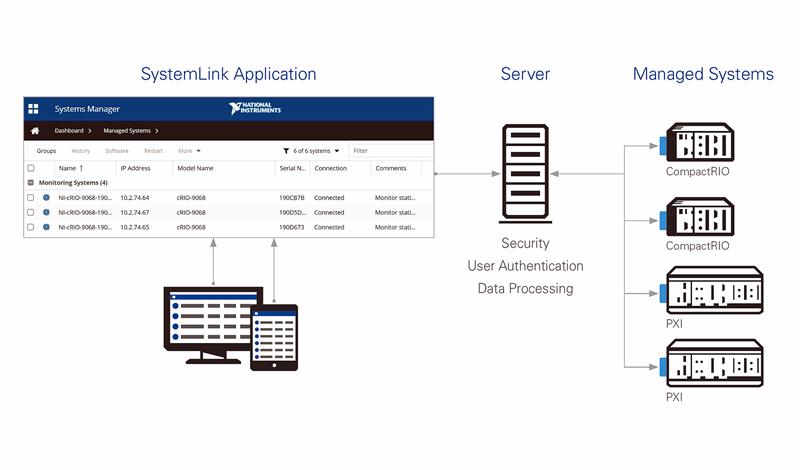

Fortunately, the test and measurement industry has responded with the creation of the PXIe Gen 3 standard, built upon PCIe Gen 3 technology, alongside the adoption of many-core architectures for data processing. The latest innovation in the PXI platform is the release of the industry's first PXI Express Gen 3 chassis, along with the first PXI Express embedded controller to be powered by an Intel Xeon 8-core processor. The combination of twice the processing power and twice the system bandwidth delivers the highest performing system on the PXI platform.

Again, looking at the semiconductor industry, increases in chip complexity is escalating at a phenomenal rate. As such, engineers need to choose a measurement platform that is capable of shifting these large amounts of data from measurement hardware to processor, ensuring the data can be analysed, processed and stored. The backplane has long been a key differentiator of the PXI platform. The architecture, built upon PCI and PCI Express technology, provides a powerful interface to the controller, where the processing can be performed. In addition, the inclusion of shared sample clocks and dedicated trigger lines makes it highly suited for test and measurement systems. The rapid advancement in the PC industry has seen the PCI Express standard evolve, providing a multitude of improvements in maximum data bandwidth.The introduction of the PCI Express Gen 3 standard has gone further again to deliver higher performance, offering capabilities of 1 GB/s per lane of communication. The new NI PXIe-1085 has 24 lanes of PCIe Gen 3, allowing the transfer of 24 GB/s between instrumentation and the controller.

With all this data being moved from instrumentation to processor, having the capability to process the data is essential. Many-core processing has been an essential feature of server architectures for a number of years and this trend is now finding its way down to consumer electronics. The new PXIe-8880 implements a many-core processor on the PXI platform allowing test and measurement system to take advantage of improved processing capabilities. In order to make the most of this added processing capability, careful planning and advanced software architectures can be required. Alternatively, an inherently parallel software development environment, such as LabVIEW, can automatically parse the code and execute it across multiple cores of the processor.

The test and measurement industry is facing all-time high demands for increased test performance whilst continuing to deliver reductions in test times. The traditional approach of fixed functionality, box instrumentation is struggling to keep pace with these demands. Fortunately, the PXI platform has proven it can meet these challenges and continues to improve the way engineers build their test systems. This latest releases highlight the capabilities of the platform to keep pace with these ever-increasing demands.

Author details

Adam Foster is Senior Product Marketing Manager, Automated Test and Aaron Edgcumbe is Regional Marketing Engineer, Automated Test, National Instruments