The problem for the neural network was primarily one of size. The technology of the time could only support networks of limited size, even using specially designed devices, such as a 2000-neuron chip presented by Bell Labs at the 1991 International Solid-State Circuits Conference.

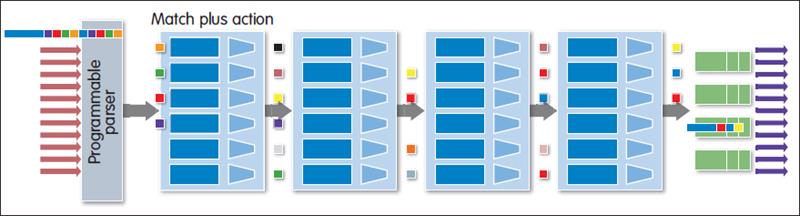

Experiments with neural networks in the 1990s and 2000s used a fairly flat structure, with no more than three layers of neurons – an input layer, a middle 'hidden' layer and an output layer – feeding data forward through the network (see fig 1). A neuron in one layer is connected to each neuron in the next layer, calling for a large number of calculations for each input. For image data, which might need to be encoded using hundreds of thousands of neurons to represent each pixel, the number of multiplications needed for just a few layers quickly spirals upwards.

As well as sheer network size, a more fundamental issue kept neural networks to a few layers: how to train them. Training is performed by adjusting iteratively the weights that each neuron applies to its input data in order to minimise the error between the network's output and the desired result. Back propagation starts with the training data, so neuron weights are adjusted until they have minimum error when presented with the training data at the front-end. This is comparatively simple with a few layers, but quickly becomes harder when layer count is increased. Limitations of the back propagation technique meant attempts to build deeper structures provided worse results than their shallow counterparts.

It all changed in 2006, when Hinton and Salakhutdinov of the University of Toronto came up with a two way method for training deep neural networks with multiple hidden layers. One approach was to 'pre-train' the network – the output of each layer is adjusted independently before moving onto training the network's output as a whole.

Typically, the early layers are used to extract high-level features and to perform dimension reductions, which are then processed by layers with far fewer neurons. The structure of deep neural networks removes some of the need to fully interconnect neurons between layers. In convolutional neural networks, neurons in intermediate layers are arranged into overlapping tiles, so neighbouring groups receive some of the same data. This avoids the problem of separate tiles missing features because data has been split arbitrarily across the tiles.

These convolutional layers are responsible for much of the dimension reduction and provide a focus on high-level structures that has been put forward as a key reason for the recent successes of deep-learning techniques. But the early layers still need many neurons to handle image or audio data.

Improvements in training do nothing to solve the scale issue. A 1Mpixel image can take hundreds of billions of calculations to process; training can involve quadrillions of multiplications and additions. Hinton and Salakhutdinov's approach became practical courtesy of another mid 2000s development – the programmable graphics processing unit (GPU).

However, GPUs are far from tuned for neural-network processing; they are used primarily for their raw floating-point throughput. While applications run reasonably well on single GPUs, it has taken some reworking of the algorithms to let them run across a network of GPU-accelerated workstations or servers. Although weight calculations are fast, it takes time to communicate the state of the neural network to all of the elements that need to know.

The power consumption of GPUs has caused issues for implementations in cloudservers, demanding the attachment of a separate power cable for GPUs that sit on PCI-Express daughtercards.

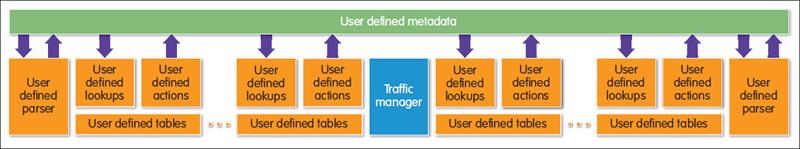

Some cloud server users, such as Baidu and Microsoft, have turned to FPGAs instead. Although their peak floating-point performance is lower than that of GPUs of comparable die size, their architecture makes it easier to feed results from one virtual neuron to another and their energy consumption is low enough for them to be powered purely from the PCI-Express bus.

Even so, companies such as Qualcomm believe there is value in developing specific deep learning architectures that are more suitable for use in embedded devices. At the moment, the main option is to offload the heavy duty processing – particularly the training – to cloud servers.

The designs for dedicated deep-learning processors may start with FPGA implementations. The Origami architecture developed by at ETH Zurich started life on FPGAs, but is being ported to an ASIC that deploys multipliers and adders in parallel, split into banks that feed into a series of summing engines (see fig 2). However, the researchers concede their design is performance limited in that it does not support high-speed external memory; neither does it support architectures where multiple Origamis are used in parallel to support larger neural networks.

Other approaches to embedded deep learning may reduce the need to divide the workload among multiple chips and bring overall power consumption down. Because the weights used by neurons do not need to be precise, approximate computing – in which the circuitry used to perform calculations does not provide bit level precision – may prove a suitable substrate for deep learning. Although approximate computing does not necessarily reduce chip area that much, it can demonstrate big savings in power consumption. However, work by NEC Labs and Purdue University into approximate computing for neural networks has shown that some parts of the algorithm call for high accuracy, whereas others allow quite coarse resolution, demanding close analysis of where precision and accuracy can be traded off.

Deep learning itself is likely to have its own limitations, which will mean it needs to be deployed with other forms of artificial intelligence. One such limitation was shown in recent demonstrations of neural networks playing old Atari computer games. The work by London based DeepMind – bought by Google in early 2014 – showed the networks typically did well where they could learn from initial random strategies, such as bouncing blocks of different parts of the wall in a game of Breakout. Even if the reward was delayed, they could learn successfully. Those where a number of stages need to be completed successfully before receiving a reward, such as navigating a maze, met with far less success.

As a result, deep learning may prove to be a useful component of self-driving vehicles, where neural networks are used to read roadsigns or to perform an initial analysis of the road ahead. But other algorithms will probably be needed to perform deeper analyses of the results provided by deep-learning networks. But, unless another technology arrives that shows as good performance on image and sound recognition as deep learning, it is likely to be an important component of many future systems that interact with the real world.