The vast array of embedded systems on the IoT have one concern in common – security. The reasons may not be obvious, but many of these systems pervade our lives and carry risk if they are infiltrated.

Implementing security for connected embedded systems requires hardware, connectivity, middleware, vendors and, most importantly, software to be addressed. However, correct coding is only one aspect of overall software security; functional security and safety must be built into the application from the beginning. Software must be ‘secure by construction’ and development from the first stages must be based on comprehensive requirements that guide the design, development and verification workflow.

Security from the ground up

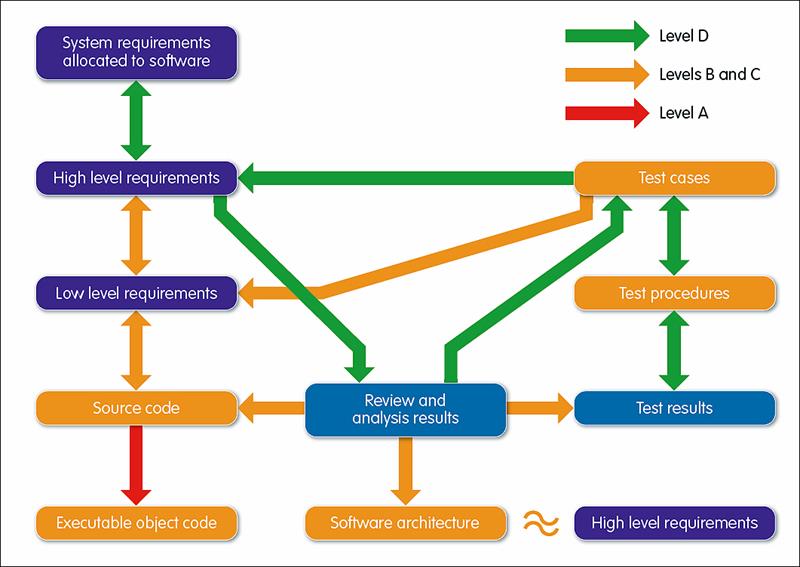

Certain measures can be taken at the lowest level by choosing a solid and reliable RTOS. However, this is only the beginning. In order to achieve ‘secure by construction,’ it is vital to start with a comprehensive set of requirements that are traceable forward to the code and from the code back to the requirements (see fig 1).

At the heart of developing secure code is devising a strategy to address confidentiality, integrity and availability – or CIA.

* Confidentiality means only authenticated people and systems will have access to secure data.

* Integrity means the data has not been manipulated during storage or transfer and that it is received in valid form.

* Availability means authenticated people and systems have access to information when they need it.

Each of these has requirements of their own. For example, authentication might require a retinal scan, which will have its own requirements. All these constraints must be tested and traceable between the requirements document and running code. In addition, the project should adopt a coding standard, such as MISRA C, CERT C or Common Weakness Enumeration, to guard against questionable coding methods and careless mistakes that could jeopardise security or cause functional errors.

Finally, there must be a way to determine if and when the testing is sufficiently complete and that the strategy and coding standard have actually been carried out effectively. Due to the size and complexity of today’s software, this can no longer be done manually, so engineers must make use of a comprehensive set of tools that can analyse the code thoroughly both before and after compilation, as well as trace functional, safety and security requirements throughout the development lifecycle.

Figure 1

Secure and verify

The two major concerns when it comes to addressing a CIA strategy are data and control. Who has access to what data? Who can read from it and who can write to it? What is the flow of data and how does access affect control? Static and dynamic analysis work together to address these issues under the umbrella of data and control coupling.

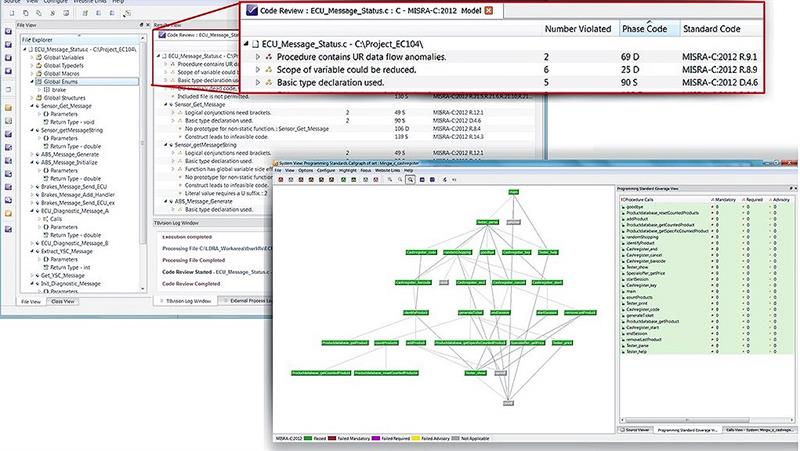

Static analysis works with uncompiled source code, checking the code against a coding specification. Static analysis can also report on overall code quality in terms of its complexity, clarity and consistency, helping to identify potentially vulnerable areas. It can also detect software constructs that can compromise security, as well as check memory protection and trace pointers that may traverse a memory location. In addition, by analysing and understanding the overall architecture and behavioural characteristics of the code, it can produce tests automatically that can be used alongside manually generated tests during dynamic analysis (see fig 2).

Dynamic analysis then tests the compiled, running code, which is linked back to the source code using the symbolic data generated by the compiler. The results of automatically and manually generated tests are then used to verify the correct operation of the code and to confirm that all functions implemented have been tested and verified or found in need of correction. Functional security tests include attempts to access control of a device or to feed it with data that would change its mission. Functional testing based on created tests includes robustness such as testing for results of unallowed inputs and anomalous conditions. Dynamic analysis also provides for code coverage and data flow/control analysis, which in turn can be checked for completeness using two-way requirements traceability. Such comprehensive analysis, especially code coverage analysis, provides in-depth insight and measurement into the verification process.

Figure 2: By analysing and understanding overall architecture characteristics, tests can be generated automatically during the dynamic analysis

Start from the beginning

It is important to understand that ‘comprehensive testing’ cannot begin with a nearly completed program; it must start from the beginning – even before the target hardware is available. Development should start on the host OS environment or on a target simulated on the host and move to the target hardware.

Developers can perform test generation (test harness, test vectors, code stubs) and result-capture support for a range of host and target platforms.

Testing and verifying units created by different teams in a large project smooths the overall workflow as the project moves forward. Code reuse – either code originally created for other projects or acquired from third parties or as open source – can also reduce time and effort. It may be tempting to just build such units into the project, but they must be subjected to the same rigorous static and dynamic analysis and verification as all other components of an application.

Dead code is another potential vulnerability resulting from code reuse –there may be functions that are not needed or used by the application under development. Not only does this take up valuable space and resources, but also a hacker looking for a port into the system may be able to use it as a concealed base to create mischief. If analysis detects code that is never executed, that code should be removed.

One further aspect is needed for security assurance – coverage analysis. On the surface, coverage analysis answers the question ‘have I touched all the bases?’ Merely checking to see if every line in the code has been executed does not reveal the structure of execution and does not clearly relate back to the requirements. To do this, we must turn to branch/decision testing and its cousin modified condition/decision coverage (MC/DC). MC/DC invokes each point of entry and exit in the program at least once, using every condition so that a decision has taken all possible outcomes at least once. This shows that changing any condition in a decision affects that decision independently.

Software developers must take responsibility to protect the ‘soft underbelly’ of embedded systems under design. But security is not something that happens all at once. It must be addressed throughout the software design life cycle so designers can be confident that their systems are safe, secure and reliable. Requirements traceability, static and dynamic analysis in the context of unit and integration testing allow complex applications to be defined and developed and for engineers to make sure they work as expected. These processes provide a cohesive approach to developing software that is both safe and secure.

Author profile

Jay Thomas is technical development manager with LDRA Technology.