The language presents challenges for security-critical applications because it is based on standards (ISO/IEC 9899:2011 and 2018) that omit comprehensive specifications for how implementations must behave.

While this omission gives developers and third-party software packages more flexibility to manage system resources and memory access, that flexibility can lead to unpredictable behaviours.

The result can be code that is written “correctly” to the standard but that can still lead to a security breach.

As developers add features to products within already constrained budgets and schedules, software becomes the weak link that can allow malicious entities to gain access to sensitive data and take over systems. For safety-critical systems or those that have access to sensitive data, security becomes a mission-critical element. But even for many non-safety-critical devices, the importance of secure code has become more evident.

Devices that were not originally designed to be connected are likely to have that functionality added as the product evolves, which can provide unexpected backdoor access into more critical systems. And security vulnerabilities can be hard to find because they may cause malfunctions only under specific circumstances. C developers need additional support to help them recognize and eliminate such vulnerabilities.

That’s where the new MISRA C guidelines come into play.

Why MISRA C Matters

MISRA C continues to be the leading set of guidelines that drive safe, secure, and reliable code for embedded systems from automotive to avionics to medical devices.

Despite a common misconception to the contrary, MISRA C has never been safety-specific and has always been appropriate for use with security-critical code. MISRA C defines a subset of the C language in which the opportunity to make mistakes is either removed or reduced. Code that is compliant with MISRA C will therefore likely be of high quality – and high-quality code will likely be both safe and secure.

To underscore that position, recent MISRA updates include the MISRA C:2012 Addendum 2, which maps MISRA C:2012 to ISO/IEC 17961 “C Secure,” and MISRA C:2012 Addendum 3, which maps to the CERT C coding standard and incorporates the newer editions of the C standard (C11 and C18).

The latest release of MISRA C:2012 Amendment 4 (AMD4), published in April 2023, makes MISRA more relevant than ever. Along with the new amendment, MISRA C:2023 consolidates all previous MISRA C editions, amendments, and technical corrigenda into a single document. This consolidation addresses what has been a huge configuration-management challenge for development teams.

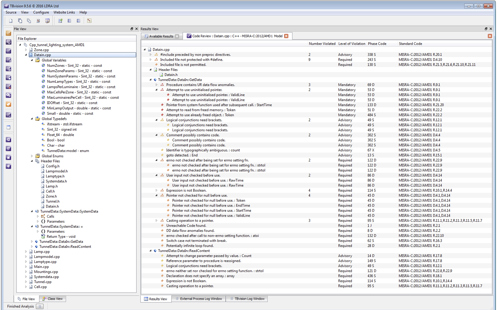

Above: LDRA’s TBvision tool provides a deeper look into MISRA compliance to help developers quickly find and fix defects. Double clicking in the tool goes to the violation and gives contextual information on why the code violates the standards.

For C developers, using automated verification tools that incorporate the most up-to-date MISRA guidelines provides a proven approach to safer and more secure code. While some C compilers can identify risky coding implementations, it is more efficient and cost-effective to prevent issues before they are introduced into the code base. Using a static analysis tool to automate MISRA C compliance helps to identify and remediate issues as early as possible in the lifecycle.

This approach helps developers to create more secure code in several ways:

Reduce the risk of common coding errors: MISRA C guidelines include rules that address common coding errors that can lead to security vulnerabilities. For example, the guidelines include rules that require the use of explicit typing, limit the use of pointer arithmetic, and specify requirements for variable initialisation.

Improve code readability and maintainability: The guidelines are designed to improve code readability and maintainability, which can help developers identify and fix security vulnerabilities more easily. By following the guidelines, developers can also create code that is easier to review and maintain over time, reducing the risk of security vulnerabilities introduced during code changes.

Promote good coding practices: The guidelines promote coding practices that help to minimize the risk of security vulnerabilities. For example, the guidelines require developers to use consistent coding styles, limit the use of global variables, and avoid the use of non-standard language features.

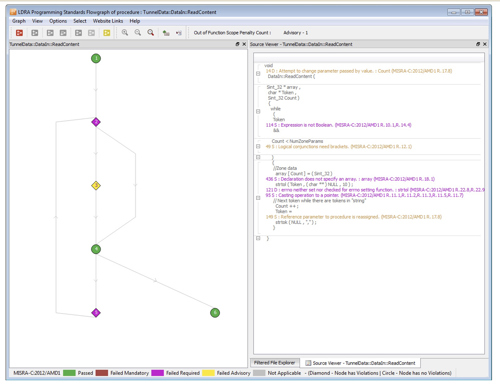

Above: LDRA’s flow graphs provide a view of program flow interspersed with violations to put them into context.

Insecure Coding Examples

While many requirements for code safety apply equally to code security, establishing explicit guidelines to address known vulnerabilities gives developers and static analysis tools a framework to improve the security posture of applications. MISRA C establishes 14 guidelines for secure C coding to improve the coverage of the security concerns highlighted by the ISO C Secure Guidelines. Several of these guidelines address specific issues pertaining to the use of untrustworthy data—a well-known security vulnerability. Several examples from the guidelines can help to demonstrate some of the subtle issues that, if not properly understood, can cause serious problems.

Example 1: Don’t open the door to bad actors

“The validity of values received from external sources shall be checked” (Directive 4.14)

This directive is concerned with data received from external sources, which has become critical as more devices are connected to one other or to the Internet. It is important that data is validated if the attack surface is to be minimized.

The following sample code is potentially vulnerable because the length of a message to be received from an external source is not validated. Copying that data in full to a buffer and hence writing beyond the allocated memory represents a vulnerability, and opportunity for an aggressor to corrupt the system – or worse.

extern uint8_t buffer[ 16 ];

/* pMessage points to an external message that is to be copied to 'buffer'.

* The first byte holds the length of the message.

*/

void processMessage ( uint8_t const *pMessage )

{

uint8_t length = *pMessage; /* Length not validated */

for ( uint8_t i = 0u; i < length; ++i )

{

++pMessage;

buffer[ i ] = *pMessage;

}

}

The directive implies a need for “defensive” code to constantly check not just that the data received is within the expected bounds (as per the example) but also to make sure that the data makes sense. For example, a history of commands received over time can be used to check for abnormal patterns of use, which may (for example) be symptomatic of a denial-of-service attack.

Example 2: Use the right function for the right task

“The Standard Library function memcmp shall not be used to compare null terminated strings” (Rule 21.14)

The memcmp() function is designed for comparing blocks of memory, and is not designed for comparing strings such as passwords. Because it returns more than just true and false, it can be used to reveal passwords in database systems. It returns zero when the two buffers are the same, but if the two buffers differ, it returns a positive or negative value. If it is implemented as a calculation—and you control the value on one side, you can keep calling it until you work out the value on the other side, which might be a password. In fact, the manpage for memcmp() specifically says do not use it to compare security-critical data.

The following sample code uses memcmp() to compare strings when strcmp() should have been used.

extern char buffer1[ 12 ];

extern char buffer2[ 12 ];

void f1 ( void )

{

strcpy ( buffer1, "abc" );

strcpy ( buffer2, "abc" );

if ( memcmp ( buffer1, buffer2, sizeof ( buffer1 ) ) != 0 )

{

/* The strings stored in buffer1 and buffer 2 are reported to be

* different, but this may actually be due to differences in the

* uninitialised characters stored after the null terminators.

*/

}

}

Example 3: If someone can control how you talk, they can make you say anything they want

“The pointer returned by the Standard Library functions asctime, ctime, gmtime, localtime, localeconv, getenv, setlocale or strerror shall not be used following a subsequent call to the same function” (Rule 21.10)

Local messages control the formatting of strings—effectively defining how the software “talks.” If hackers can take control of how strings are formatted, they can make them say whatever they want, including controlling whatever programs the first program interacts with. That means these strings can be used to execute arbitrary commands and take over a system.

The following sample code may not work as expected because the second call to setlocale() may lead to the string referenced by “res1” being the same as the one referenced by “res2.” Beyond correctness, controlling locale has been used in exploits where strings have been reformatted to facilitate the execution of commands on a remote computer.

void f1( void )

{

const char *res1;

const char *res2;

res1 = setlocale ( LC_ALL, 0 );

res2 = setlocale ( LC_MONETARY, "French" );

printf ( "%s\n", res1 ); /* “res1” is set to the current locale. */

}

Simplify Compliance

The complexity and size of contemporary software makes manual checking for compliance with MISRA C guidelines unreasonable. The primary means to check code against standards and guidelines is through the use of automated static analysis tools.

Finding and correcting violations of these guidelines takes static analysis tools that have incorporated the guidelines and that detect potential security flaws early in the process so that developers can eliminate them before code is compiled.

MISRA C is an integral component of any embedded software certification process and so the adoption of static analysis tools is a valuable investment toward meeting compliance goals and ensuring the security and reliability of the code.

Author details: Mark Pitchford is a Technical Specialist with LDRA Software Technology