One way to reduce the energy cost would be to move to optical interconnect. In his keynote at OFC in March 2016, Professor Yasuhiko Arakawa of University of Tokyo said high performance computing (HPC) will need optical chip to chip communication to provide the data bandwidth for future supercomputers. But digital processing itself presents a problem as designers try to deal with issues such as dark silicon – the need to disable large portions of a multibillion transistor processor at any one time to prevent it from overheating. Photonics may have an answer there as well.

Optalysys founder Nick New says: “With the limits of Moore’s Law being approached, there needs to be a change in how things are done. Some technologies are out there, like quantum computing, but these are still a long way off.”

New’s company sees photonics as providing a way of making big power savings for the kinds of data manipulation needed in common HPC applications. The aim is not to build optical supercomputers, but rather to give conventional machines a big speed boost through coprocessors handling specific roles.

Although a number of research groups are working on ways to apply photonics to optical computing, Optalysys looks to be the closest to commercial reality. The company, which spun out of a research group based at the University of Cambridge, demonstrated a prototype in late 2014 and aims to start selling production models by the end of 2017.

The origin of the Optalysys system lies in work dating back almost to World War II, when researchers started to exploit the way that diffraction alters the paths of photons passing through a lens according to their wavelength or frequency, effectively performing an instantaneous Fourier transform.

A flurry of research in the mid 1960s found ways to combine the Fourier transforms of lenses with holographic interference to perform more advanced processing.

“The same principles are in use today,” New says, “though the technology itself has changed a lot. The enabling factor is the development of LCD technology.”

One useful attribute of the optical processing investigated in the 1960s was that it could perform instant correlations of two images. But the correlations were highly sensitive to rotation and changes in light, making them less useful in practice compared to digital image processing algorithms that can compensate.

Micro LCDs make it possible to assemble arbitrary images that represent a much wider variety of data than the photographs used in the early research. This lets the machine perform tasks such as searching for DNA sequence matches within a database of genomes.

“Genomics provides very much a direct fit with the correlation functions,” New claims.

Alternatively, the images and optics can be tuned to use Fourier transforms to calculate many derivatives in parallel. This adaptation helps handle the partial differential equations used in Navier-Stokes models for fluid dynamics and weather forecasting. A similar calculation on a digital computer demands orders of magnitudes more compute cycles and energy. The more pixels in the microdisplay, the better the performance and energy differential.

“There has been a massive revolution in the past four or five years to produce 4K resolution TV microdisplays,” says New. “These allow us to enter very large amounts of numerical data. We also use the liquid crystals themselves as lenses, which provides a dynamically reconfigurable way of doing things.”

As well as the issue of converting data into a form usable by photonic processing, the problem that has faced the optical analogue computer is one of maintaining the precise alignment needed to ensure consistent processing: temperature changes, mechanical vibration and contamination can be problems.

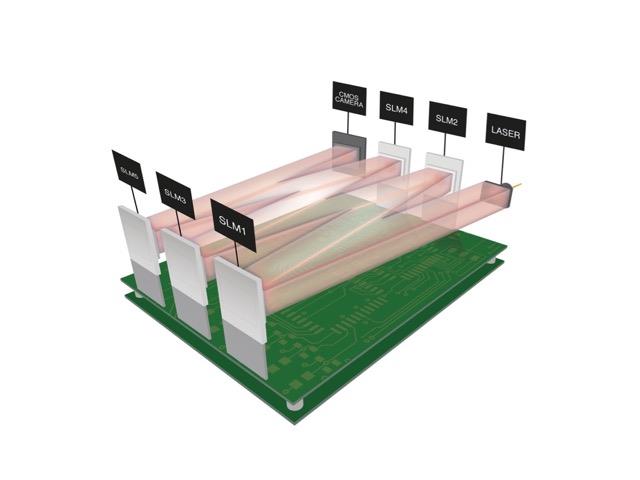

“Dust is of a similar size to the pixels on the microdisplay,” New says. “The other limiting factor was that optical processing had very precise alignment criteria. In the past, these systems needed to be mounted on an optical table. When mounted onto a board, our components are connected by solid glass: once everything is fixed, it simply can’t move.

“We have worked on putting together systems in a compact and scalable way. So we can tile these displays together to create even larger grids, we have made the design modular. That will let us put together coprocessor boards that can go into a rack. For fluid dynamics and derivatives applications, we can start pairing functions and build towards a solver-type system.

“Or they can slot into a PC motherboard in a similar way to past coprocessors,” New adds. “With applications such as DNA matching, it opens up the possibility to put one in each doctor's surgery or even in the field to help deal with disease outbreaks.”

Coprocessing options

More options for coprocessing are appearing, according to Professor Bahram Jalali of UCLA, thanks to the development of nano-waveguides etched into silicon. Through effects such as Raman scattering, where some photons shift in wavelength as they pass through a transparent medium, it is possible to model non-linear systems that are computationally intensive in the digital domain. The use of waveguides for creating optical coprocessors follows work with fibre optics as a way of preparing data for conventional computers.

Jalali argues optical processing based on techniques developed for telecommunications can help winnow the data that otherwise threatens to swamp conventional HPC. His group has been working on ways to use optics to compress data more intelligently, focusing the detail on important features in images. He compares the approach to the fovea at the centre of the retina; while it covers 1% of the area of the retina, it contains half of the light-sensitive cells.

A big problem for data acquisition and processing systems today is Nyquist sampling. “At one frequency, you are optimum. Below that, you are taking too many samples; above it, you miss them,” Jalali says.

The aim of UCLA’s ‘anamorphic’ compression is to focus the highest resolution on fast-changing elements within the image, such as edges, and use more relaxed sampling on background and other less interesting data. “We want to do it blindly, without advanced knowledge of the signal,” he says.

The group released a digital implementation in 2013 and, since then, has turned to building a version that works in the optical domain to reduce the bandwidth of electronic instruments. To do that, the researchers coupled two techniques. One is a ‘time stretch’ camera, unveiled in 2009, that uses pumped optical-fibre amplifiers to perform dispersive Fourier transforms. This reduces the sampling rate needed to capture high-bandwidth images by spreading the wavelength components in time. A Bragg grating in the fibre etched with a chirp profile performs frequency-based optical warping. This results in more resolution being given to fast-changing signals, effectively compressing the parts of the image with few features in them.

One application that combines the Jalali group’s optical techniques is screening blood for abnormal cells. The problem with screening today is that a sample may contain just one cancerous cells out of many healthy cells. Instruments not only need to cope with high-throughput image capture, but also the issue of storing and processing the data captured. A system based on conventional data capture could need a throughput of 1Tbit/s. Using the front-end data compression can reduce that significantly.

A further application of telecom technology to optical computing comes from wavelength division multiplexing (WDM). Not only has Professor Paul Prucnal’s group at Princeton University employed the components needed for WDM to act as a front ends for GHz frequency cognitive radio transceivers, but it has also adapted WDM for use in neural networks.

A major problem for any kind of artificial neural network is its demand for high degrees of interconnectedness. Electronic computers try to limit connections and put as much processing power as powerful into mostly independent units. Using the add-drop multiplexing techniques developed for WDM, photonic interconnects can help reduce the energy cost of transmitting signals to many neurons at once by placing them at intervals along a single loop of fibre that broadcasts updates across many wavelengths.

Optical techniques may even provide a stepping stone to quantum computers. In February, researchers from the University of Virginia and the Royal Melbourne Institute of Technology published work on a quantum computer thing that swaps the qubits used in most experiments on entangled states for ‘qumodes’ – quantum optical fields defined by the resonant modes of a laser cavity.

According to Virginia researcher Olivier Pfister, qumodes could have major advantages in quantum computers because it is possible to make machines that exhibit a very large number of qumodes. The technique uses femtosecond lasers under carrier-envelope, phase-locked control to create combs that could exhibit hundreds of thousands of modes across multiple octaves of wavelength. So far, the team has created 60 qumodes that can be entangled in terms of frequency and polarisation.

Although digital computing quickly surpassed the capability of optical processing in the 1960s, the energy wall that faces HPC today could lead to more tasks being offloaded into the photonic domain. The key challenge is to make the optical techniques programmable enough to keep up with digital computing’s ability to mould itself to different requirements. If that is successful – and there is still work to be done on integration and guaranteeing physical stability – it could drive a new generation of coprocessor cards that swap digital floating-point arithmetic for photonic manipulation.

Data crystals for the future Optical technology could provide big improvements in speed for non-volatile memory and, in the case of one technique, lifetimes that go way beyond even mask ROMs. Photonics provides an alternative way to make phase change materials work as solid state memories. Although many research teams have tried to use chalcogenides as purely electronic memories over a period of close to 50 years, commercialisation remains a problem. A team from Karlsruhe Institute of Technology, working with researchers from the universities of Exeter, Münster and Oxford, has gone back to theproperties exploited in the most successful IT application of phase change materials – the rewriteable CD – to create the basis for an all-optical memory. Two different intensities of optical pulse switch the material between amorphous and crystalline states. Wavelength division multiplexing allows weak and strong optical pulses to be carried down the same channel, enabling access circuitry to perform reads and writes simultaneously. At the University of Southampton, Professor Peter Kazansky and colleagues have developed a memory technology that resembles conceptually the data crystals that science fiction film makers often like to portray: where the memories of long dead civilisations are packed neatly into a handheld disc. Using fused quartz as the substrate, this memory could easily last that long. The ‘five dimensional’ memory uses a femtosecond laser to etch a nanostructure into the surface of a crystal. As well as using the 3D shape of the etched pits to carry data, much like those in the surface of a CD, the nanograting produces birefringence effects that can be exploited as two additional data storage dimensions. Polarisation and light intensity control the shape and the effect of the nanograting. “We discovered the structures by accident 10 years ago and it is still something of a mystery as to how the structures are formed,” Kazansky says. |