These aspirations are the goals of many researchers in both academia and industry. The ultimate dream of an engineer pressing a 'magic button' that automatically designs, layouts, and optimises their product to meet performance specifications and manufacturability is still science fiction, but great progress is now being made with the use of various design of experiments (DOE), specifically, artificial neural networks (ANNs).

Basics of ANNs

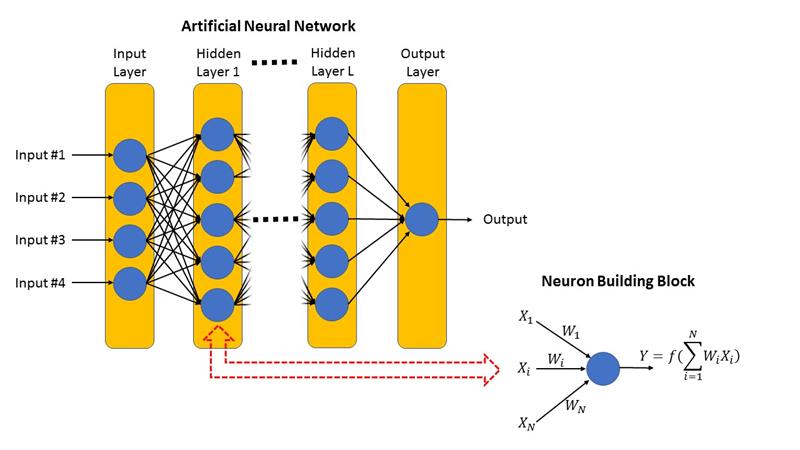

An ANN is a network of nodes that contain a basic building block known as a neuron (also called a perceptron) as shown in Figure 1.

| Figure 1: Artificial Neural Network |

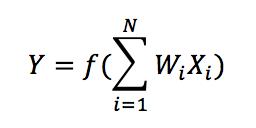

This basic building block takes a series of inputs, Xi , where Xrepresents an input signal (which can be a constant if a bias or intercept point is needed), ian index for the number of inputs with a range of 1 to N inputs, each scaled or weighted by Wi , to form an output Y with the following relation:

From an electrical engineer’s standpoint, the output Yis like a weighted sum of input values, as in a multi-input summing OpAmp circuit, except that it is transformed by a function f(x).This function f(x)is called an “activation function” and is used to generate non-linearity as well as bound the output; this is important to prevent the output from saturating when there are lots of layers in the network. Common activation functions include the hyperbolic tangent and sigmoid functions.

As shown in Figure 1, an ANN consists of an input layer, L-hidden layers, and an output layer that are connected using the basic neuron building block. The number of inputs and outputs of the system determine the number of neurons in the input and output layers, respectively. The number of hidden layers and the number of neurons used in each hidden layer are design parameters determined by system requirements such as accuracy, speed, and complexity. The term 'deep learning' refers to many hidden layers in the ANN. It’s not exactly clear however, how many hidden layers constitutes deep learning, except that it is greater than one.

Looking at these ANNs, there are a lot of close ties to adaptive equalisers. In fact, the learning aspects of an ANN for supervised training is very similar to the training of tap coefficients in an adaptive equaliser, where weights Wi are trained with a known data sequence and adapted by minimising an error or cost function. The backpropagation algorithm in an ANN is a gradient descent method used to calculate weights to minimise the cost function, much like stochastic gradient descent algorithms are used to optimise tap coefficients in adaptive equalisers. Overall, engineers familiar with adaptive equalisers will be able to draw a lot of similarities between ANNs and adaptive equalisers.

How Can ANNs Help Me?

As signal integrity (SI) engineers, Cadence is responsible for the signal and power integrity of its high-speed system. Typically, these systems involve multiple high-speed integrated circuits, some with complex multi-pin packages, on multi-layer PCB boards with DIMM connectors and backplanes that need SI simulation tools to extract and verify the system is meeting the performance and reliability requirements.

Cadence says it often finds itself modifying multiple parameters of a complex PCB layout (trace length, width, impedance, component placements, etc.), simulating, checking the results, and redoing the process repeatedly until it meets its required signal quality or eye diagram requirements. This process is inefficient and certainly not optimal, especially if the number of parameters it is changing is large and the time to run each simulation is not negligible. For example, if it were to change only four parameters of a PCB layout with 35 possible values for each parameter, this would require over 1.5 million simulations to cover the entire design space—which is not realistic.

Instead, what if it can apply an ANN program with its SI simulation tool to predict the output and optimise the eye diagram with much less number of simulations? Essentially, use ANNs to help it improve its efficiency.

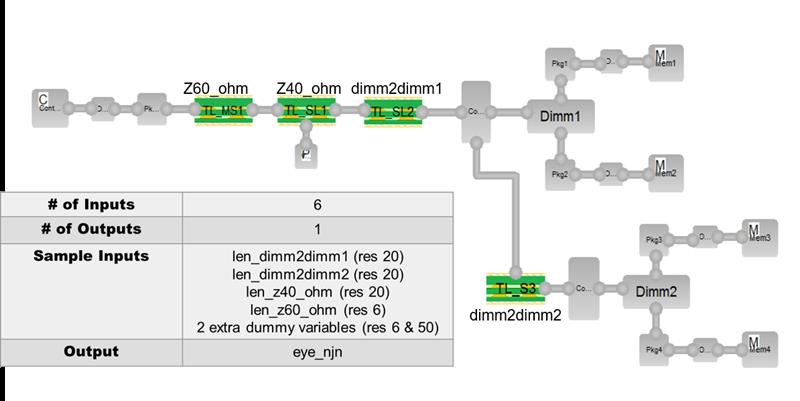

| Figure 2: DDR4 multi-drop topology |

The team, consisting of M. Kashyap, K. Keshavan, and A. Varma, developed a deep learning algorithm to do this and published their results in the Electrical Performance of Electrical Packaging and Systems EPEPS 2017 conference. Their example used the Cadence Sigrity SystemSI tool to generate the dataset by random sampling six PCB input variables for a DDR4 multi-drop topology, as shown in Figure 2. Each input variable can take 20, 20, 20, 6, 6, and 50 distinct discrete values, respectively, giving a total design space of 14.4million different combinations.

By applying a hybrid cross-correlation and deep learning algorithm with the Sigrity SystemSI tool, only 1000 preliminary + 595 secondary SI simulations were needed. The first 1000 simulations reduced the design space from six inputs to four inputs with cross-correlation, and the other 595 simulation data sets were used to train, validate, and test the ANN. While 395 points were used to train the ANN to obtain a model that defines the relationship between the remaining four PCB input parameters to the output (eye diagram performance). Fifty validation points were used to check the effectiveness of the model after training was completed by comparing the predicted output from the ANN model versus the actual simulated output. The validation accuracy reached an average of 97.4% with a 1σ of 1.6%. The remaining 150 data points were used for testing and achieved an average accuracy of 97.3% with a 1σ of 1.8%. The results were also verified by matching the predicted output of a randomly selected set of inputs with the actual simulation result using the same inputs.

The total training time by conservative estimates is 100 seconds and the testing time for 150 data points is 20 seconds, for a total of two minutes. The largest time consumed was generating the 1000 preliminary data and 595 secondary data sets, but this is significantly less than the time needed to generate 14.4m simulations. This preliminary study represents a tremendous improvement in time and efficiency, while only sacrificing less than 3% in accuracy. More importantly, the ANN offers the chance to explore and optimise large solutions spaces whereas it is practically impossible using brute force methods.

Overall, applications using ANNs are still in its infancy, but they are starting to become a reality. From an SI standpoint, we still have a long way to go before having the 'magic button' capability—but we can develop ANN programs that use information from Sigrity tools to help us explore large design spaces.

This is a profound improvement.

Author details: Lawrence Der, Senior Product Marketing Manager, Cadence.