CEVA has also introduced the CDNN-Invite API, the industry’s first deep neural network compiler technology that is able to support heterogeneous co-processing of NeuPro-S cores together with custom neural network engines, in a unified neural network optimising run-time firmware.

The NeuPro-S, along with CDNN-Invite API, is intended for any vision-based device with the need for edge AI processing, including autonomous cars, smartphones, surveillance cameras, consumer cameras and the emerging use cases in AR/VR headsets, robots and industrial applications.

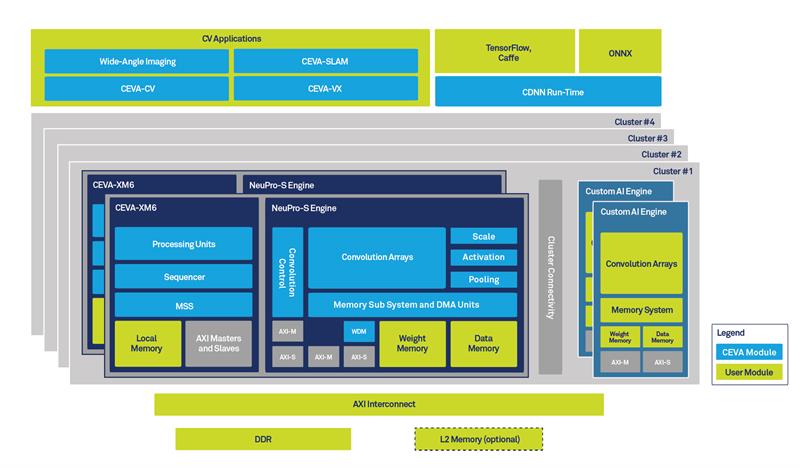

Designed to optimally process neural networks for segmentation, detection and classification of objects within videos and images in edge devices, NeuPro-S includes system-aware enhancements that deliver significant performance improvements. These include support for multi-level memory systems to reduce costly transfers with external SDRAM, multiple weight compression options, and heterogeneous scalability that enables various combinations of CEVA-XM6 vision DSPs, NeuPro-S cores and custom AI engines in a single, unified architecture. This enables NeuPro-S to achieve on average, 50% higher performance, 40% lower memory bandwidth and 30% lower power consumption than CEVA’s first-generation AI processor.

The NeuPro-S family includes NPS1000, NPS2000 and NPS4000, pre-configured processors with 1000, 2000 and 4000 8-bit MACs respectively per cycle. The NPS4000 offers the highest CNN performance per core with up to 12.5 Tera Operations Per Second (TOPS) @ 1.5GHz and is fully scalable to reach up to 100 TOPS.

Addressing the growing diversity of application-specific neural networks and processors, the CDNN-Invite API allows the incorporation of customer’s designed neural network engines into CEVA’s Deep Neural Network (CDNN) framework.

CDNN will then holistically optimise and enhance networks and layers to take advantage of each of the CEVA-XM6 vision DSP, NeuPro-S and custom neural network processors. The CDNN-Invite API is already being adopted by lead customers who are working closely with CEVA engineers to deploy it in commercial products.

Commenting Ilan Yona, Vice President and General Manager of the Vision Business Unit at CEVA, said: “The NeuPro-S architecture addresses the root causes of the growing challenges in data bandwidth and power consumption in these devices. With our CDNN-Invite API, we are reducing the entry barriers for the growing community of neural network innovators, allowing them to benefit from the breadth of support and ease of use our CDNN compiler technology offers.”

The fully programmable CEVA-XM6 vision DSP incorporated in the NeuPro-S architecture facilitates simultaneous processing of imaging, computer vision and general DSP workloads in addition to AI runtime processing. This allows customers and algorithm developers to take advantage of CEVA’s extensive imaging and vision software and libraries, including the CEVA-SLAM software development kit for 3D mapping, CEVA-CV and CEVA-VX software libraries for computer vision development, and its recently acquired wide-angle imaging software suite including dewarp, video stitching and Data-in-Picture sensor fusion technology.

The NeuPro-S is available and has already been licensed to lead customers for automotive and consumer camera applications. CDNN-Invite API is also available for lead customers and for general licensing by the end of 2019.