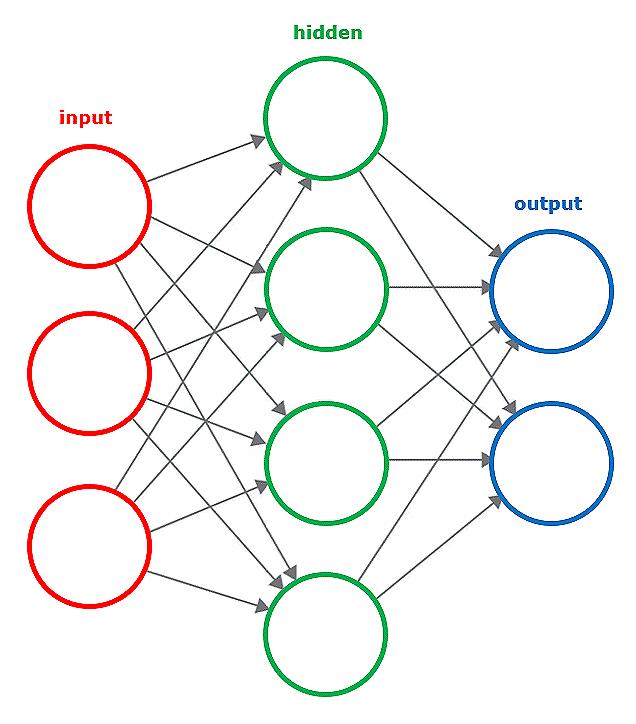

The big breakthrough came in 2006, when University of Toronto computer scientists Geoffrey Hinton and Ruslan Salakhutdinov found a way to train networks that were significantly more complex than those used previously. The classical neural network used just two or three layers to process data (see fig 1). The Toronto team worked on ‘deep autoencoders’ that interposed many more hidden layers between those that act as the input and output surfaces.

Hidden layers made it possible to work on much more complex inputs – high-resolution images and chunks of human speech. For a simple neural network, the amount of data is simply overwhelming. A network with just a few layers cannot home in easily on distinct features that can be used to recognise objects or phrases in millions of pixels or audio samples.

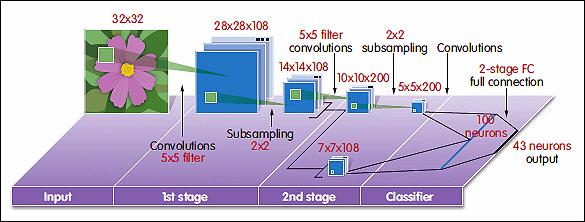

The deep autoencoder, on the other hand, brought the ability to crunch data with many dimensions – such as a 1000 x 1000 pixel image – into more convenient forms. The hidden layers pick out elements such as shapes and edges that can be recognised more easily by downstream layers. In this way, an image that starts out a mass of pixels leads the network to conclude that one of the things in the picture is a cat.

The opportunities for deep autoencoders or convolutional neural networks (CNNs) for real-time control became apparent in a test by a team from Swiss research institute IDSIA. Its 2011 experiment found that a collection of trained CNNs had a better chance of recognising a road sign than people tested on the same images. The machine could also pick out, in some cases, information from images that were too badly degraded to be readable by humans.

The same group employed GPUs to speed the training process and to run the trained networks.

Figure 1: A simple representation of a neural network

While GPUs were never designed to handle neural network processing, their high throughput for floating-point arithmetic makes them suitable for handling the calculation of billions of weights that the neurons use to analyse inputs. The use of GPUs has seen nVidia open a sideline in processors for advanced driver assistance systems (ADAS) and the company’s management believes the autonomous driving systems that evolve out of ADAS will depend heavily on CNN processing.

The GPU is not alone in chasing embedded-CNN applications. Cadence and Ceva have reworked their DSP architectures to be more efficient in handling neural-network processing. Google and Qualcomm, meanwhile, have opted for silicon tuned specifically to CNN handling.

One of the biggest issues with CNN processing is power consumption. DSPs and GPUs can easily process many floating-point calculations in parallel, but the accesses needed to pull the inputs and weights into local memory are less predictable than those needed for 2D and 3D graphics. This makes caching difficult. Although pipelining hides access times, there remain large demands on energy because of the need to go to main memory frequently. On top of that is the sheer quantity of calculations needed.

A number of layers in any CNN are fully connected. Each neuron has to take inputs from every single neuron in the layer that precedes it. Even with the dimension reduction that deep architectures provide, this entails billions of calculations. The workload is orders of magnitude higher when training, which involves moving forwards and backwards through the network iteratively to compute the best gradient for each path. An image processor may have to perform 1022 multiply and add calculations.

One answer is to split the workload. Companies working on these systems do not expect CNNs deployed in ADAS to do training locally. Instead, dashboard computers will log incidents for which the onboard models appeared to fare badly and upload preprocessed sensor inputs to the cloud. Servers that collect the data from the many cars on the road will, at regular intervals, crunch the new material and deliver updated models to vehicles.

Deep autoencoding brings the ability to 'crunch' data with many dimensions

Offloading training to servers provides a further benefit to CNNs deployed in embedded systems. Training calls for high precision to be used in the arithmetic to avoid ‘baking in’ errors during the process. Typically, that means using 32bit floating point. The launch of Google’s custom CNN processor – Tensorflow – underlined the kinds of corners that can be cut to make the technology more readily deployable. The device is designed to handle inferencing using quantised data and, in many cases, even 8bit resolution is enough. Some have suggested that for carefully selected paths, 4bit precision is all that is needed. And in others, no bits.

Work by Cadence and others has suggested that it is possible to prune the network after training. Many of the calculations needed in fully connected layers, among others, can be safely ignored because they have little effect on the overall results. Because their contribution to the neuron’s output is dominated by other paths, a law of diminishing returns is at work. However, at some point, the connection surgery goes too far and the network loses performance.

Another source of possible energy efficiency is to accept the presence of errors. Approximate computing hardware operates very close to the threshold voltage, to the extent that some computations do not complete on time. But if the number of failed calculations is low, overall network performance may still be acceptable, particularly where the network is processing data continuously, such as video and audio streams.

Although silicon suppliers are keen to optimise CNN performance, there is still no guarantee they will go into mass deployment outside server farms. There are questions over reliability and their ability to deal with problems more complex than recognition. One shortcoming is the ability to train networks to make complex plans. Google has trained a network to play Go, having succeeded in building a system to play simpler computer games. But the forward planning that works in a structured environment like games may not translate as well to the less predictable real world.

The strength of the CNN approach is also the source of one of its main weaknesses. Much network training can be performed in an unsupervised way; the network picks out features in images by itself, then homes in on them. Supervised training on labelled data then lets the network declare that an image contains a black cat or a 30mph road sign. But the features the network chooses may be very different to those used by people.

By homing in on features the human eye does not see readily, training can go down unexpected paths with equally unexpected results. An assessment of CNN performance by Google researchers found curious anomalies in highly trained systems, where two practically identical photographs led to wildly different outputs. Often, one was correct, but the network failed to identify the other. In the road-sign project, the network had trouble telling the difference between a sign that prohibits vehicles entirely and another that banned overtaking – the network did not pick up the duplication of the car in the ‘do not pass’ sign.

The difficulty of verifying the performance of CNNs may prove to be the technology’s Achilles heel when it comes to developing safety-critical systems. But if that issue – along with their energy consumption – can be overcome, possibly through the use of complementary processing techniques that act as watchdogs, CNNs could become commonplace in real-time control.