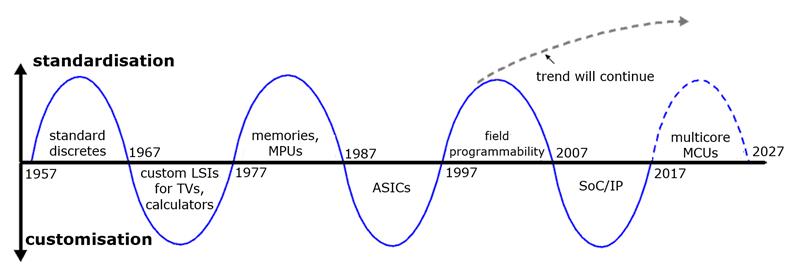

A recent GSA report – Charting a New Course for Semiconductors – also highlighted the possibility of a swing towards programmability based on open source software and next generation reprogrammable chips like xCORE. This swing to programmability coincides with development of the IoT and major initiatives by technology companies to add voice interfaces to their products.

Examples such as Apple’s Siri, Microsoft’s Cortana and Facebook’s M are showing the potential for voice interfaces in consumer applications. Amazon is developing voice driven home ecosystems with Alexa, while teleconferencing systems are increasingly using smart technology in the enterprise. These products are coupled with ‘always on’ broadband links to natural language processing engines in the cloud, which are driving rapid innovation in the processing algorithms both in the client product and the cloud services.

Figure 1: Makimoto’s Wave extended to 2027

Figure 1: Makimoto’s Wave extended to 2027

xCORE multicore microcontrollers, developed by XMOS, are applicable for these diverse voice enabled IoT applications. The combination of high performance control, DSP and I/O flexibility make xCORE a suitable candidate for single chip solutions in a range of control and interfacing applications.

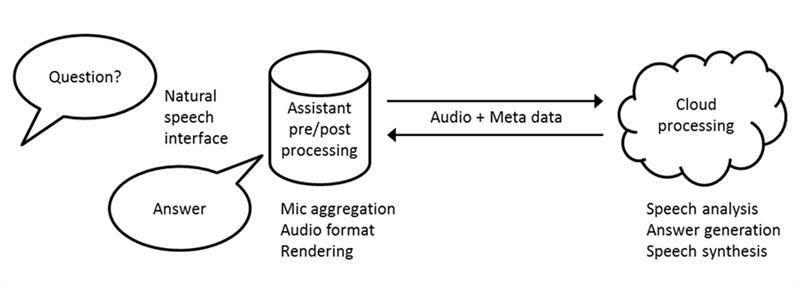

A typical example of such a system – a network connected voice controlled assistant – is shown in Figure 2. The user’s voice is detected, captured and preprocessed locally before being sent to a cloud server for speech and semantic analysis. The generated answer is then sent back to the assistant for playback. The assistant may also apply post-processing algorithms to enhance audio rendering.

Figure 2: Example of distributed information processing system

Figure 2: Example of distributed information processing system

A distinctive feature of such systems is that computation is distributed among the resources in the system. The interface node acts as a preprocessor to the raw data provided by its sensors to transform it into a format suitable for subsequent stages. Raw data preprocessing allows for real time adaptation, bandwidth reduction between nodes and enhanced system reliability and security.

This changes the role of interfacing applications fundamentally: in addition to control and communication, these applications must now also be able to handle DSP functions. For typical voice interfaces – in particular, those requiring bidirectional communication – low latency DSP is necessary to maintain a pleasant user experience.

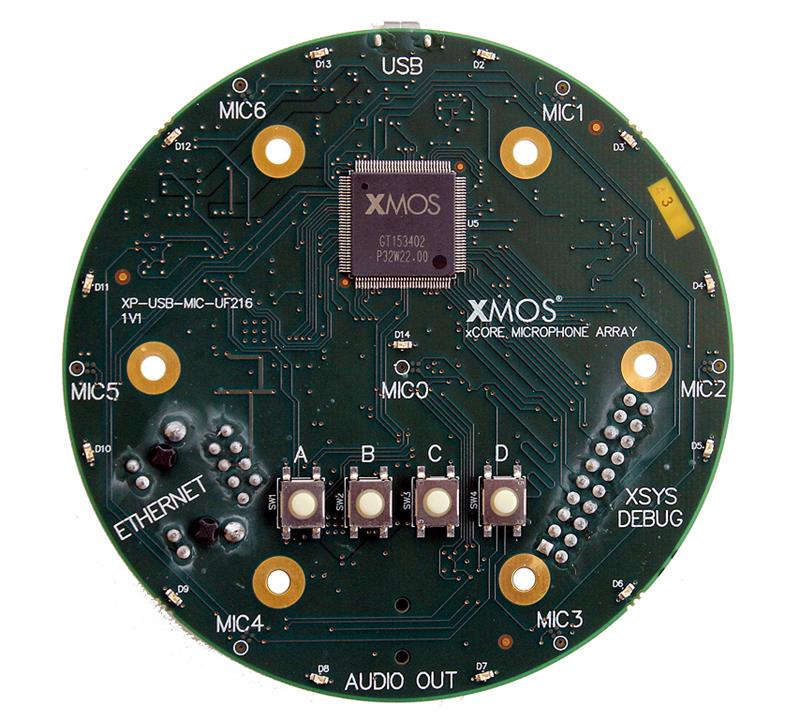

Putting more programmability into the platform is opening new ways to improve the performance of the systems. Adding more microphones in an array can be used to track a speaker’s position, providing new ways of reducing noise and interference. Also, innovating the control and signal processing algorithms on a programmable platform allows different beam forming and direction of arrival estimation algorithms to be implemented by temporal alignment analysis of the input signals. Coupling these with a voice activity detector and key word detector implemented on the programmable processor builds a complete front end for a network connected voice controlled assistant.

Figure 3: The programmable xCORE array microphone evaluation board

This can bring other advantages for the designer. Processing signals locally can lead to overall power savings and faster responses. Rather than sending raw data over the broadband link, the data can be analysed locally for a faster, more responsive implementation. Further semantic processing in the cloud can be used to improve the quality of the requests, storing and aggregating large amounts of data and parameters that can be downloaded to modify the local software.

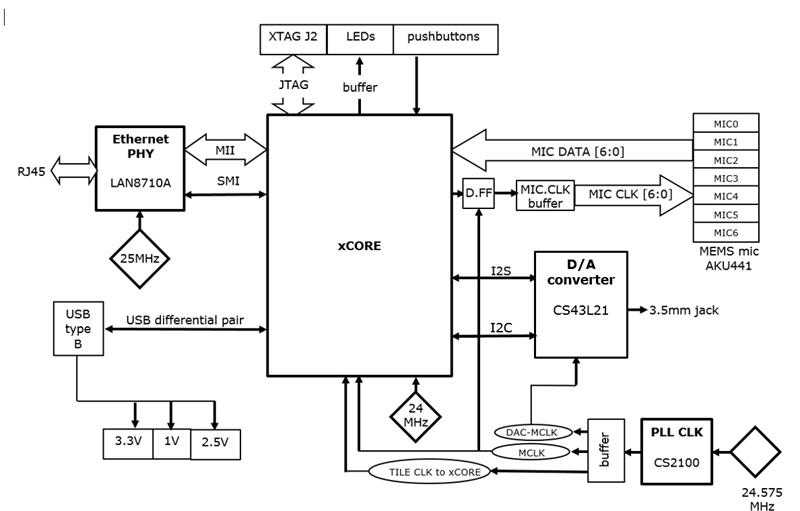

Figure 4: xCORE array microphone block diagram

xCORE devices contain a series of logical cores that provide high performance, control and DSP with fast deterministic I/O response for implementing flexible I/O structures. This provides the advantages of a fixed function implementation with dedicated signal chains and the flexibility of a programmable engine.

Large, tightly coupled memories and optimised DSP libraries provide developers with excellent performance for algorithms requiring intensive random memory accesses such as the fast FIR algorithm for analysing data from pulse density modulation microphones. On such algorithms, the xCORE-200 architecture displays a performance similar to, or better than, dedicated standalone DSPs.

Conclusion

Combining the advantages of very low latency, programmability and system level integration with cost effective real-time DSP capabilities provides an innovative platform for voice enabled smart interfaces. For applications based on natural speech interaction, such as connected assistants, the sub millisecond latency enabled by the xCORE architecture comes along with the advantages of programmability, ready for the next generation of user interface.