The high bandwidths and low latencies demanded by operators are also having a huge impact on the way data centres are networked over both short and long distances.

This fast shifting landscape is challenging optical transceiver and module suppliers to change their pace of innovation. Companies that focus on these devices – such as Oclaro, Finisar, JDSU, NeoPhotonics, Sumitomo and Source Photonics – have to use a mix of vertical integration and merchant supply to keep up with rapidly changing data centre demands while remaining competitive.

The brightest prospects are in the coherent dense wavelength division multiplexing (DWDM) modules sector. “This is the fastest growing portion of the market,” Matthew Schmitt, founder and CEO of consultancy Cignal AI, told New Electronics. “Non coherent solutions will be used by some large web scale providers, but coherent has a much longer runway in terms of cost reduction and, ultimately, is much easier to deploy.” Until earlier this year, Schmitt was a research director at IHS Infonetics.

Perhaps the biggest challenge – and certainly the biggest opportunity – is the shift from 40G to 100G Ethernet networks. “Shipping of 100GbitE and coherent WDM modules has started in earnest,” said Adam Carter, chief commercial officer at Oclaro, “and it is the demand from data centre operators that is fuelling the shift from 10G and 40G.”

Oclaro’s roots go back to the late 1980s, when it was founded in the UK as Bookham Technology. The company grew incrementally, having absorbed the optical components activities of GEC Marconi and Nortel Networks, amongst others. Over the years, it merged with Avanex to create Oclaro, which still operates the old Caswell fab in the UK as a pilot manufacturing line for InP based devices.

The move to 40G should not be such a great surprise, suggests Carter. After all, when you are focusing on applications inside the data centre, most already run a significant percentage of their high bandwidth data over optical fibre, making use of some serialiser/deserialiser circuits such as Broadcom’s Tomahawk and Jericho circuits to pack data from the serial buses used inside processors into a single stream for each link.

“These are now driving the density on the line card face blades to, for instance, 32 QSFP ports on the front panel. If you double stack this and use the QSFP28 format, that bandwidth is equivalent to 3.2Tbit/s for a 1RU line card.” That trend will only continue, Carter suggests, and he anticipates that, by the second half of this year, we might see some data centres rolling out 100Gbit/s in those switches.

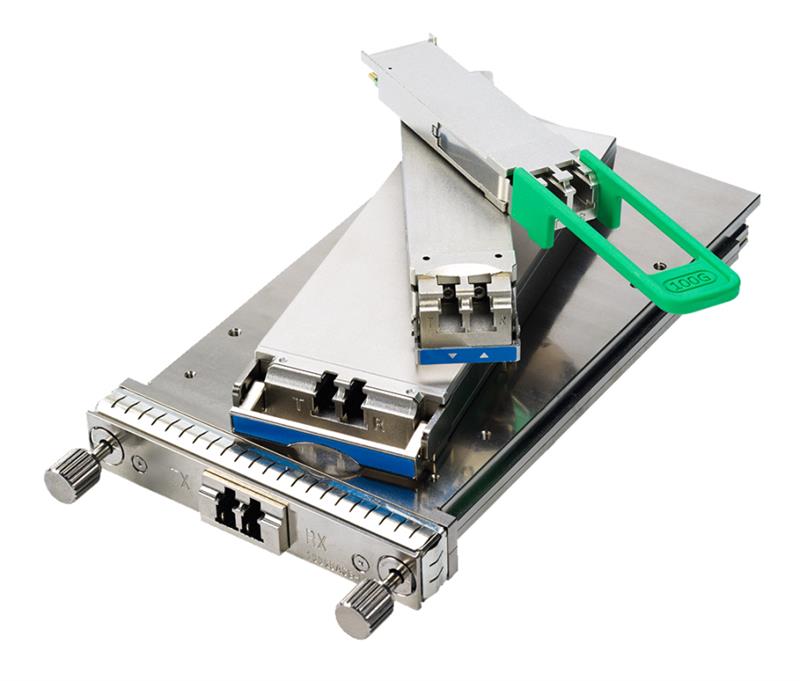

Oclaro's optical interface portfolio now features a 100GbE QSFP28 transceiver module, designed for high port densities

Oclaro's optical interface portfolio now features a 100GbE QSFP28 transceiver module, designed for high port densities

Carter suggests this is good news for the optical components companies and network equipment manufacturers because the anticipated volumes should result in a very healthy industry for many years to come, with demand likely to outstrip supply at some point. However, he questions whether the industry can continue its rapid pace of innovation.

“We’re all set to deploy 100G in the second half of 2016 and the industry is already talking about 400G. The amount of money that will need to be spent in R&D to enable 400G is going to be substantial and it’s not clear the industry is set up in a way that will allow that to happen naturally.”

Component and module manufacturers are already investing heavily to support data centre volumes and when this does not get amortised, it has a negative financial impact on these companies, Carter suggests

This is further compounded by the fact that the industry wants these innovations to appear faster than ever, placing enormous pressure on the entire distribution chain.

“Since hyperscale data centre operators and cloud providers own the boxes at both ends of the network,” he added, “we are seeing a rapid proliferation in the number of optical interfaces and connection choices.”

At 10G, there were two choices; at 100G, this has increased to eight. From a component supplier’s point of view, this makes leveraging economies of scale across operations much more difficult, warns the Oclaro executive.

With 100G in sight, the industry still wants modules to be better, faster, cheaper and to consume less power – and it wants these products to be brought to market more quickly. This is likely to place enormous pressure on the core optical components industry, which has been undergoing significant change.

Carter suggests the optical components and modules sector faces other big challenges and issues. For instance, higher data rates and new innovations, such as on-board optics, will require a level of investment that will only be possible with a stable and healthy optical component industry with funds available for significant R&D investment. This will require an industry with competitive gross margins that allow it to continue funding the move to higher bandwidth, smaller form factors, higher density and lower power. This, Carter maintains, is not a given at the moment.

The question of the sector’s profitability has very much become a ‘hot topic’, according to Vladimir Kozlov, CEO of consultancy LightCounting. He notes that pricing of 100Gbit Ethernet modules has become very aggressive and that, at the recent Optical Fibre Communications Congress, ‘the target of $1/Gbit/s set out in 2015 by Yuval Bachar – formerly of Facebook and now with LinkedIn – was revised to $4/Gbit/s’. He suggests that, between them, Amazon, Google and Microsoft spent $250million on Ethernet transceivers last year.

Oclaro’s Carter notes the rapid adoption of many different form factors and packaging formats is increasing, so the industry urgently requires more standardisation effort in order to make it easier to develop products that work through the entire supply chain. And he adds that, as bandwidth demands increase beyond 100G, it is becoming increasingly important for optical companies to have the ability to manufacture the high speed lasers and receivers capable of supporting those higher data rates.

Carter warns that, currently, there are fewer than five laser fabs in the world with this capability (at more than 25G) and this represents an opportunity for the industry to undergo much needed consolidation.

Seeding the cloud

Internet service providers and social networking companies such as Google, Microsoft, Facebook and LinkedIn are beginning to call most of the shots when it comes to how data centre interconnection (DCI) is designed and implemented.

The companies are shaking up the business by designing custom hardware and software and by sourcing much of the hardware, even components such as optical modules, directly from suppliers, bypassing the way in which DCI has been implemented previously.

An important recent example of this strategy was the announcement in March at the Optical Fibre Communications Conference (OFC), when Microsoft and component supplier Inphi said they were collaborating on modules that will plug directly into switches and routers. The move could spell trouble for those developing dedicated DCI systems and boxes.

The idea is that large data centre operators can ‘stitch together’ regionally distributed data centres so – when appropriate – they can work like a hyper data centre. The approach allows data centres 80km or less apart to be connected almost switch to switch, essentially by running Ethernet directly on a dense wavelength division multiplexing (DWDM) link, with only amplifiers and multiplexers/demultiplexers in between.

Inphi has released what it calls the ColorZ reference design for a DWDM QSFP28 form factor pluggable transceiver that, importantly, uses the PAM-4 (pulse amplitude modulation) signalling specification for single wavelength 100Gbit/s transmission, delivering up to 4Tbit/s bandwidth over the single fibre

“We are very excited to be working with Microsoft on this project and believe it was a combination of a deep knowledge of PAM-4 and on-going projects in silicon photonics that clinched it for us as these enable a module that is low cost, low power – just 4.5W consumption – and the right form factor,” Loi Nguyen, co-founder and head of Inphi’s optical interconnect group told New Electronics.

As well as making use of silicon photonics based functional integration of modulators, photodetectors and multichannel muxes and demuxes, the design incorporates the in-house developed InphiNity Core DSP engine.

Microsoft outlined three years ago how it saw its data centre needs evolving in the medium to long term, and this included a 100G capable solution for distances of up to 80km. But it realised this was not available for deployment in the late 2016 time frame and decided to put out a tender to component suppliers to spur development.

There were 100G standard optical modules and work on technologies such as IEEE802.3ba, 100GBase CR4 and LR4 for point-to-point links of less than 10km in the required QSFP packaging, as well as solutions for long-haul solutions (traditional coherent QSPK, 16QAM) suitable for links of more than 100km.

Microsoft’s director of network architecture, Jeff Cox, said at OFC that the company could not use established 100G coherent solutions as they would not scale economically for the shorter links, so it went for the so-called ‘direct detect’ approach, specifically for those intermediate distances. Cox said Microsoft chose Inphi because its proposal seemed the most straightforward.

The companies demonstrated the module at the OFC plugged into switches from regular suppliers to Microsoft, including Cisco and Arista Networks, working with amplification and multiplexing units from ADVA’s CloudConnect line.

Nguyen said the modules would be packaged by a ‘non traditional supplier’ and that volume production is scheduled for Q4 2016. Both he and Cox stressed the deal is non exclusive and that both companies hope to work with others on the modules. And other web-scale companies could adopt the same approach, if it fitted their needs.

Following the OFC announcement, some suggested this made existing optical DCI/coherent DWDM solutions obsolete, but this seems most unlikely. Nguyen himself believes coherent will not go away and told New Electronics: “We believe we will have a significant power and cost advantage for a few, maybe three, years, by which time something newer, better and maybe more cost efficient will come along. That is the nature of this business.”

Neither does the development mean the end of DCI boxes, as Jim Theodoras, VP of global business development at ADVA, stressed. “The optical line shelf is not going away any time soon. While an exciting development, it is a niche application for a specific case defined by one player in data centres, admittedly huge.”

One major drawback is that Inphi’s solution requires dispersion compensation on the fibre because it relies on PAM – an analogue transmission scheme – whereas coherent DWDM techniques will not. Dispersion compensation costs would include capital equipment and operational expense.