In an ideal world, we would design embedded software to work correctly all the time. But the processes evolved by the industry over decades have failed to achieve this, creating instead some situations that not only seem bizarre to those outside software development, but which are also leading to severe financial problems for all involved.

Take the process of bug hunting; often used well into the development process. “We tell people: go find some bugs. When they say they can’t find any more, we treat that as a negative,” says Vector Software CTO John Paliotta. “Having no bugs should be a good thing, but we are worried when we can’t find them because we suspect they are still there. Teams have, unwittingly, been formalising incorrect behaviour.”

Many bugs make it into production and sit in the system waiting to be uncovered by a rare event or by a later modification that alters the behaviour of code around the bug.

In effect, by skimping on testing for correct behaviour up front, Paliotta argues, organisations have allowed themselves to build a massive technical debt that surfaces during the maintenance phase. It is a debt that needs to be repaid when the time comes to make changes or fix a customer’s problem with the deployed system. And if the problem means 1million products need to be recalled, that represents a major payment.

The debt is accumulating, Paliotta believes. “I feel that only a very small portion of the code in existence today is going to get thrown away. Because of the expense of building software, companies want to prolong its life. So you are maintaining the software for a very long time.

“Early in my career, I read about technical debt. The solution then was to ‘bulldoze the house and declare bankruptcy’ – throw it all away and build again from scratch. That seemed fine when you had an application that was just 100,000 lines of code in total; small enough to be relatively easy to replace,” Paliotta recalls.

“We are now at the point where we can’t afford to ‘declare bankruptcy’: the code base is too big and complicated. What does that mean for development? If bankruptcy is not an option, you need to find another way to deal with the debt. You need to spend less, save more and take some of the capital you build up to pay down the debt.”

One way to deal with the overhanging debt would be to take subsystems, analyse and refactor them to be more maintainable. But that is difficult for many code bases. “There are parts that are fragile. People tend not to refactor and fix them out of fear,” Paliotta says.

Organisations now seem to be stuck, lacking a clear way to identify correct behaviour because they have no test harness that demonstrates whether the software is working as it should.

“Vector is tackling that in a couple of ways,” Paliotta says. “First, we are building analytics tools to see how adequately users have tested the existing code. Second, we are creating tools that can build test cases from the deployed code.

“We look at the logic to get the highest levels of code coverage we can. We see great value in that technology in terms of dealing with technical debt for the companies who don’t have the resources to go back and hand-build test cases.

“We capture what the code actually does as the test cases execute and all the output values for the minimum set of input values. That allows the tool to formalise what the code does. It doesn’t prove the code is correct, but customers often say ‘the software is deployed and running, so we’re happy with what it does’. But they know they can’t refactor because they don’t know deep down what it does,” Paliotta says.

“By building a set of test cases for the deployed code, they can be stored and used in the future as a baseline for tests. So, as I go forward and gradually refactor the code, I know when that code is changed, it hasn’t regressed.”

|

A change in development processes will let organisations keep their future technical debt under control, Paliotta insists. An important starting point for the change is the adoption of code coverage. “It provides a guide to testing thoroughness. Without coverage, you don’t know how thoroughly you’ve tested. Even for those with very elaborate test environments, the first time they measure coverage, they are really surprised at how low the number is.

“The problem is that people often misunderstand code coverage. They say ‘if have to check the execution of every statement to get executed, that will make development as expensive as it is for the guys in aerospace’. But, what I want you to do is look at requirements and analyse how well those have been covered. That means formalising what each component should do and testing against it,” he explains.

“For example, one requirement may be to implement a square root function. What am I supposed to do if I get a negative number or an invalid floating point number? What should the function return? How does that affect the overall application? The edge cases are important and may be missed by someone building tests based on the logic of the source code. You need test-driven development,” Paliotta asserts.

With a large battery of tests put in place as early as possible – because many of the requirements were developed before coding started – regression testing can become the norm. This ensures that new functionality is tested as soon as the code is committed. And errors will be flagged sooner.

“As a developer, if I can get a bug report immediately and change it there and then, that’s OK. If the tests are not run until three months later, I have probably context switched away to something else. You don’t want to accumulate latent defects that are only found weeks or months later,” Paliotta notes.

With large applications, full regression testing for every commit can become expensive to run, he adds: “Some prospects say it takes two months to run the full test suite – that’s too long. They could use parallelism and run the tests on a server farm, but the problem with parallelisation is that it doesn’t work when many people need to run the test suites.

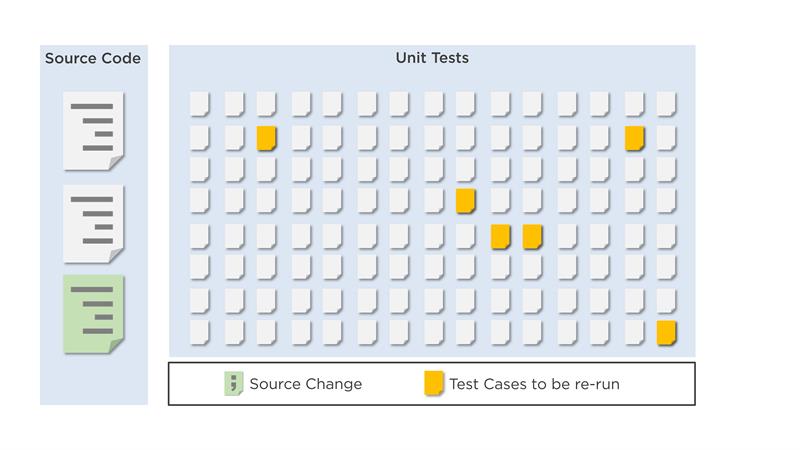

“Vector’s answer is change-based testing. You select a much smaller subset of tests based on the code changes. Because we record what is touched by the test cases, we can tell which direct and indirect tests are affected. By using that subset, you will reduce test times dramatically.

“For many companies, software has become a core competence as they build more intelligence into their products. Now, smart people are realising that almost every company is a software company and this means your core competency should be around building software efficiently. Some $300billion is spent on bug fixing; would you not want 10% of that?,” Paliotta asks.

By building test into the core infrastructure of development, organisations can not only recover the money wasted on pursuing bugs and deliver software that works correctly, but which is also the result of a more efficient process.

Author profile:

John Paliotta is CTO of Vector Software

Using change-based testing enables developers to execute much smaller subsets of tests based on code changes, reducing testing time from days to minutes.

Using change-based testing enables developers to execute much smaller subsets of tests based on code changes, reducing testing time from days to minutes.