Crucially the team, which has been developing and evaluating demonstrators for a variety of end users such as the military, healthcare and heritage, emphasise the importance of understanding human factors when developing interactive technologies, whether wearable devices, headsets or games controllers incorporating the need for realistic visuals, sounds and haptics.

Prof Stone, who is also a member of the Chartered Institute of Ergonomics and Human Factors (CIEHF), was one of the first Europeans to experience the NASA VIEW Virtual Reality system in the 1980s and established the UK’s industrial VR team at the National Advanced Robotics Centre. He was also accredited by Russia’s Gagarin Cosmonaut Training Centre with introducing VR into the Russian space programme.

“The HITT was set up in Birmingham to build on those experiences and to help corporates to better understand VR and manage the hype that then existed, and which still does today, around the concept,” Prof Stone explains.

Working with technical experts from the ESEE, the team has taken VR scenarios and developed bespoke interfaces incorporating platforms ranging from drones to submersible devices.

Today, VR is more accessible and affordable and, from an industrial perspective, it’s much easier to turn projects around quickly, according to Prof Stone. “Today, using a £700 lap top and free software, we can do work that would have cost hundreds of thousands of pounds just 10 years ago.”

He warns that the VR environment remains one in which hype and false promises are still prevalent.

“There are too many start-ups that are here today and gone tomorrow businesses, making the same mistakes we’ve seen in previous cycles, from naïve business models to overly optimistic sales projections. We are in the middle of another hype cycle,” he contends.

There are a variety of definitions floating around covering this space but Prof Stone contends that: “VR is a totally computer-generated world, AR sees virtual models interacting with the real world and, while mixed reality is similar it uses real world objects to make the virtual more real.”

All will have a role to play going forward, says Prof Stone, but while VR is actually a mature technology, the industry is “lacking believable real case studies.I think that is where the industry is being held back. We need to see more case studies and ignore industry speakers selling the same old messages. I’d like to see more industrial conferences where claims can be presented directly to end users and examined in detail.”

Collaborative initiatives

When HITT was setup it worked closely with the UK’s Human Factors Integration Defence Technology Centre (HFI DTC) between 2003 and 2012. More recently it has been involved with collaborative initiatives in maritime defence and unmanned systems.

“This work has provided us with opportunities to work closely with stakeholders and end users in the development of methodologies focusing on human centred design,” says Prof Stone.“Our research looks to avoid the technology push failures that were so evident in the 1980s, ‘90s and early 2000s by developing and evaluating demonstrators that emphasise the importance of the human context.”

HITT has a long-standing working relationship with the military in the UK.

“We helped to develop VR-based part-task trainers and a variety of innovative human interface concepts for simulation and tele-robotic systems,” explains Prof Stone.

Some of the projects delivered include a desktop Minigun simulator, an Interactive Trauma Trainer for defence medics, EODSim – an urban planning tool for counter-IED activities and SubSafe, a submarine safety spatial awareness trainer, the results of which were incorporated into training facilities used by the Royal Navy’s Astute Class submarine fleet, as well as by the Canadian and Australian navies.

Human Factors Guidance for Designers of Interactive 3D and Games-Based Training Systems, an HITT publication, is still used for teaching and contains a distillation of more than 25 years of Human Factors lessons learned from projects it has undertaken.

“When it comes to developing demonstrators, we are focused on using affordable technology that makes training more streamlined, portable and transferrable and which de-risks solutions,” Prof Stone notes.

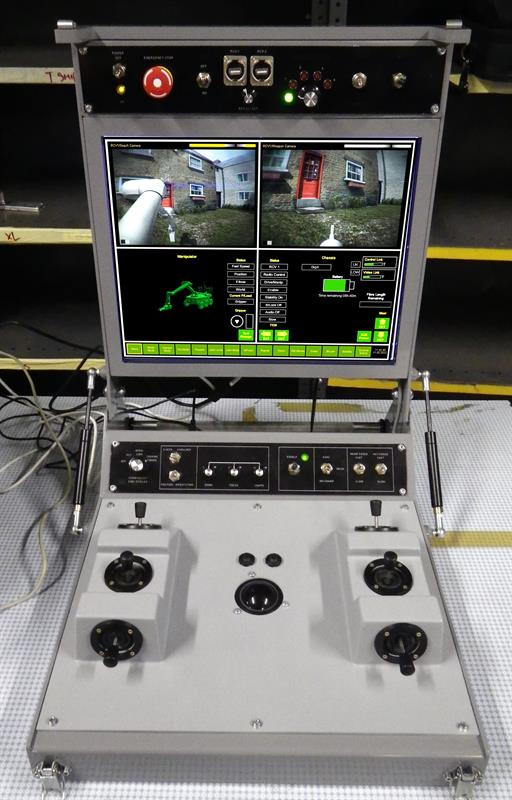

In 2012, the team developed a high-fidelity remote driving and manipulation simulator for the British explosive ordnance disposal (EOD) telerobot system CUTLASS, and for coordinating a small consortium that then delivered 42 complete simulator units to the MoD, that were then deployed in Northern Ireland, Cyprus, Gibraltar and mainland UK.

The team also worked closely with BAE Systems, investigating advanced Mixed Reality interfaces for future Command and Control and next generation cockpit concepts.

“The Cutlass was an outstanding success for HITT," says Prof Stone. "Using an Xbox and headset controller, we created a simulator that replaced outdated classroom training that was failing to equip trainees with adequate skills and system awareness and helped them to appreciate the capabilities and limitations of the vehicles they were to operate.”

The simulator enabled trainees to better adapt to controlling the vehicles and manipulators remotely, and understand the limits of the vehicle.

“As well as a highly realistic virtual urban street scene and house interior, a replica (physical) Cutlass console was constructed containing accurate representations and locations of the key components, including the manipulator mode selection areas on a touch screen,” Prof Stone explains.

HITT developed CUTLASS, a high fidelity remote driving and manipulation simulator for explosive ordnance disposal

Users' needs come first

When it comes to developing simulators, the technology should come second to the users’ needs, according to Prof Stone.

“You need to understand the end user, experience what they do and look out for gaps and mistakes, all of which will help to create a more realistic training experience and one that transfers those learned skills into the real environment. The human factor is critical here. Too many companies believe that by placing sensors into a glove or a headset that their training problems will be solved, the user is simply an after-thought.”

When developed using strong human-centred design principles, VR and AR interfaces can be used to present a wide range of information sources to end users, using appropriate methods of presentation, from gesture selectable windows featuring readable or audio-presented text (e.g. manuals), static images and videos of instructional or previous incident records, to complete 3D reconstructions with a range of interactive elements.

The use of 'intelligent avatars' can also help to guide the attention of the end user to critical elements of the scene, or direct their attention to other relevant features.

One system developed by HITT was used to evaluate advanced human interaction techniques in a command and control context for BAE Systems. The end user’s motions and gestural input commands were tracked using a motion capture system and the outputs from the captured data displayed, in real time, a range of different tactical information types.

These included a cityscape that appeared to exist in 3D on an otherwise empty “command table”, the locations of multiple unmanned air vehicles (UAVs), 'floating' menu screens, which enabled the user to tailor the amount and quality of the data being presented and simulated aerial 3D 'keyhole'/laser sensor scans of suspect terrorist assets. An avatar provided additional spoken information and interface configuration support.

“In 30 years of working in this field, I’ve never been more excited that I am today,” says Prof Stone. “Today, we can put technology in the back of a van, fly drones with a range of sensors in an afternoon and create imaging using that data in the evening. It is so much more exciting and immediate than just a few years ago.”