What’s different about developing code for internet of things (IoT) devices? At one level, not very much. But when you consider what they fit into, the situation looks a lot more complex.

An individual device may perform relatively simple operations but form part of a complex system of systems. Each device needs to be easily accessible and not just be protected against hacking but not provide an easy way into a network for the hacker. And as part of that focus on security, to be able to receive patches in the form of over-the-air (OTA) updates.

The need to maintain some guaranteed level of security, deal with a variety of different use-cases and design systems that can co-operate when thousands of the devices are involved introduce problems that are not necessarily down to bugs in the embedded code but assumptions about behaviour that do not scale well, or which even lead to deadlocks and devices turning into electronic bricks.

One way to approach such systems is to move to agile development in which the system starts with a minimal set of functions but is architected in such a way that more and more capabilities can be added over time. "The fundamental premise of agile is getting something out there you can ship," says medical-device software consultant Jeff Gable, who co-hosts the Agile Embedded podcast with embedded-development consultant Luca Ingianni.

To streamline their agile processes, the cloud-computing community came up with the concept of “devops”: the automation of almost the complete build pipeline. It's an approach that led to the often-cited example of Amazon's deployment system: platform-analysis director Jon Jenkins claimed a decade ago that developers there were deploying updates somewhere on the web-services and retail giant's systems every 11.6 seconds during weekdays.

In a typical devops pipeline, once code is checked in it goes into a queue of batches of unit tests and static analysis. Some can be performed immediately, with others waiting for nightly downtime to run, all under the management of automation tools such as the open-source Jenkins environment. If the tests pass satisfactorily, the result might be an automated nightly build that can be deployed for system-level testing or, if it's a release candidate, issued for deployment.

A need for discipline

Devops for agile requires discipline, says Gable, with everything in the pipeline made available using scriptable command-line tools. If the pipeline needs someone to open the graphical interface of an IDE, it is not going to work in a devops pipeline.

That discipline can be tough to achieve, especially if static-analysis tools throw out a steam of warnings because of a problem that is not easy to fix immediately. But ignoring warnings or mysteriously failing build processes tend to store up problems for the future that will hurt schedules when deadlines become more pressing. Proponents claim that, as long as teams adhere to the discipline, they will achieve tangible benefits. Many bugs – assuming tests are hitting a decent number of coverage points – will be ironed out quickly. Automated regression tests ensure they do not creep back in without warning.

"We definitely see that customers in all embedded market segments are moving towards a more devops-oriented way of working," says Anders Holmberg, CTO of tools provider IAR Systems. "It is maybe not so much driven by the ability to deliver OTA updates in itself, but rather as a way of optimising the ways of working and utilising tool investments in the best possible way across an organisation. A side effect of this is of course the ability to respond faster to security issues and roll out updates to users in a controlled manner."

Since its introduction of devops, the scope has begun to expand to encompass security with the even more ungainly contraction “devsecops”. This is not simply a bit of Powerpoint engineering in which everyone agrees to think a bit about security when checking in some code.

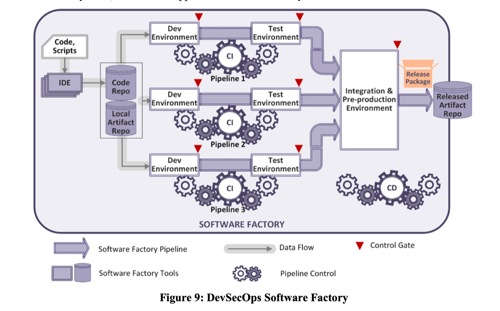

In 2019, the US Department of Defense published its own recommendations for devsecops in critical systems that Wind River, among others, uses to inform its own work on devops pipelines for embedded and IoT systems.

The core idea of devsecops is to use the same test-driven pipeline to make security awareness a core part of application development instead of performing analysis on the final code when it might be too late to make important changes. Static analysis can look for common problems such as vulnerability to buffer overflows.

The chain can similarly check binaries at runtime, by automatically signing code modules and possibly even encrypting them during each and every build process even if the project is at an early stage. Catering for this early on can help iron out deployment problems to secure hardware. It also supports the ability to audit code for evidence of possible compromises in the source code.

Holmberg says the security modules provided by IAR's Secure Thingz subsidiary, which include the C-Trust code-encryption system, can be integrated using command-line integration into a devops pipeline.

One issue with devops in the embedded and IoT world is that developers do not commonly have the benefits of software containers that make it easy to deploy binaries to a wide variety of servers and expect them to run cleanly. Hardware differences play a much larger role and can easily dominate testing.

Simulation testing

For many of the builds that make it through the pipeline, testing code on the final target hardware may be a poor choice because of the time it takes to update the flash memory and the difficulty of performing the kinds of regression tests that underpin continuous integration.

Instead, simulations on workstations or cloud servers can take on more of the load for tests performed after commits or during overnight runs. The hardware itself winds up being reserved for situations where engineers want to work with real-time, real-world I/O in hardware-in-the-loop setups. In the middle are so-called board farms, which are similar to the device farms used by mobile-app teams to test across a range of smartphones. Board farms use hardware that resembles the target organised into racks that can be accessed remotely.

Though board-farm users have largely had to construct their own infrastructure for managing the hardware, some companies have started to push for some level of standardisation.

Timesys and Sony, for example, have proposed an application programming interface (API) for sending and retrieving data from board-farm devices.

For the virtual targets that run on servers, there has been lot of cross-pollination from the electronic design automation (EDA) community, where simulation is the mainstay of development.

“When we started 12 or 13 years ago, everyone was doing simulation for hardware to get the SoC to work,” says Simon Davidmann, president of Imperas, a company that creates software models of processor cores for simulations. “We founded Imperas to bring these EDA technologies into the world of the software developers."

Arm has similarly supported SoC designers with device models and has started moving into direct support for IoT development with its Arm Virtual Hardware programme, launched at its annual developer conference last autumn. Currently available for the Arm Cortex-M55, the processor designer may extend it to other cores in the family in the future.

Devops for embedded and the IoT is still in its early days and adopting it takes significant upfront efforts, though consultancies such as Dojo Five and toolchain suppliers such as IAR and Wind River are bringing support into their offerings. But the automation of builds and analysis is another technique from the cloud that can prevent IoT projects by being overwhelmed by complexity.