Artificial Intelligence (AI) is pervading more broadly and deeply into almost every aspect our everyday lives but the adoption of AI and machine learning (ML) in the home is still at an early phase - but the potential is unlimited.

New devices and appliances are released into the market every year with increasing AI capabilities. The data generated from these devices enables device makers to learn user habits and predict future usage patterns using ML algorithms, thereby, delivering an improved user experience.

In a future smart home, AI could automatically control lights, appliances, and consumer gadgets based on predicted routines and by being contextually aware. For example, a smart thermostat will be able to learn the preferences of different persons in the household, recognize their presence based on their voice signatures, and locally adjust the temperature based on an individual’s usage history. Similarly, a smart washer, in addition to having voice control, will automatically be able to sense any load imbalance or water leaks, and adjust the settings or send alerts to prevent failures.

Processing at the Edge

While AI has the potential to positively impact almost every aspect of our home life, some users may be wary of the role of AI due to privacy and other concerns. These concerns are amplified when the user’s personal data is sent to the cloud for processing. There have been several cases of a data breach where hackers have intercepted and stolen consumer’s private data. These concerns along with bandwidth and latency constraints are causing many device manufacturers to increasingly think about using edge processors in devices to run ML tasks locally. Several market research reports suggest edge processor shipments will increase by more than 25% driven by the adoption of edge-based ML.

There are several classes of ML algorithms that can be used to make devices ‘smart’ in a smart home. In most applications, the algorithms can recognize the user, the user’s actions, and learn behaviour to automatically execute tasks or provide recommendations and alerts. Recognizing the user or user actions is a classification problem in ML parlance. In this article, we specifically focus on audio source classification.

Advanced Audio and Speech Recognition

Smart home devices and appliances with advanced audio and speech recognition can use acoustic scene classification and detection of sound events within a scene to recognize users, receive commands, and invoke actions. User activity at home is a rich dataset of acoustic signals that include speech. While speech is the most informative sound, other acoustic events quite often carry useful information. Laughing or coughing during speech, a baby crying, an alarm going off, or a door opening are examples of acoustic events that can provide useful data to drive intelligent actions.

The process of event recognition is based on feature extraction and classification. Several approaches for audio event (AE) recognition have been published in recent literature. The fundamental principle behind these approaches is that a distinct acoustic event has features that are dissimilar from the features of the acoustic background.

Audio source classification algorithms detect and identify acoustic events. The process is split into two phases, the first is the detection of an acoustic event and the second is classification. The purpose of detection is to first discern between the foreground events and the background audio before turning on the classifier which categorizes the sound.

It is expected that future smart home devices will have both audio event recognition as well as automatic speech recognition capability.

Recognition (ASR) to drive intelligent actions

A variety of signal processing and machine learning techniques have been applied to the problem of audio classification, including matrix factorization, dictionary learning, wavelet filterbanks, and most recently neural networks.

Convolutional neural networks (CNN) have gained popularity due to their ability to learn and identify patterns that are representative of different sounds, even if part of the sound is masked by other sources such as noise. However, CNN’s are dependent on the availability of large amounts of labelled data for training the system.

While speech has a large audio corpus due to the large-scale adoption of ASR in mobile devices and smart speakers, there is a relative scarcity of labelled datasets for non-speech environmental audio signals. Several new datasets have been released in recent years and it is expected that the audio corpus for non-speech acoustic events will continue to grow driven by the increasing adoption of smart home devices.

Acoustic event recognition Software and Tools

Audio event recognition software using source classification is available through multiple algorithm vendors including Sensory, Audio Analytic, and Edge Impulse to name a few. These vendors provide a library of sounds on which models can be pre-trained, while also providing a toolkit for building models and recognizing custom sounds. When implementing audio event recognition on an edge processor, the trade-off between power consumption and accuracy must be carefully considered.

There are also multiple open-source libraries and models available. Here, we provide the results for audio event classification based on YAMNet ("Yet another Audio Mobilenet Network"). YAMNet is an open-source pre-trained model on TensorFlow hub that has been trained on millions of YouTube videos to predict audio events.

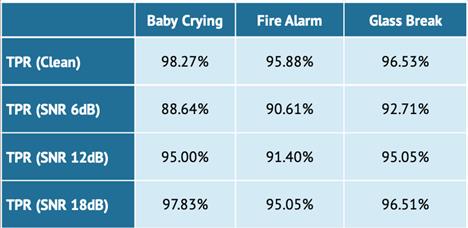

It is based on MobileNet architecture, which is ideal for embedded applications and can serve as a good baseline for an application developer. The figure above shows the simulation results of a simple YAMNet classifier that is less than 200KB in size. It is seen that such a small classifier can deliver sufficient accuracy for the detection of a few common audio events both in clean conditions and in the presence of noise.

As can be seen, the TPR (True Positive Rate) performance of the model increases with the SNR of the signal. The data presented in the table below is meant only for a high-level illustration of the concept. In practice, application developers spend many hours training and optimizing these models to accurately detect their sounds under their test conditions.

The computational blocks illustrated in Figure 1 are key components of the audio processing chain in a smart home system. Often ML algorithms are used to execute these tasks and matrix operations are critical to executing ML algorithms. Depending on the type of application, many 100’s of millions of multiply-add operations may be needed to be executed. Therefore, the ML processor must have a fast and efficient matrix multiplier as the main computational engine.

Conclusion

Smart home devices as we know them now will increasingly become both smart and useful devices as the challenges of audio source classification are solved by audio edge processors specifically designed for advanced audio and machine learning applications.

These edge processors will make smart home devices and appliances more secure and more personal, which will help accelerate the adoption of smart home.

Author details: Raj Senguttuvan is Director, Strategic Marketing, and Vikram Shrivastava is Sr. Director, IoT Marketing, for Knowles