When he was a student in the mid-1990s, Paul Kocher tried to support his vetinary studies at Stanford University with consultancy work in what was then a side interest of cryptography. As the dot-com boom took hold, so too did the work of discovering flaws in clients’ security mechanisms.

A presentation at the university on using differences in inputs to try to detect the changes made by encryption algorithms led him to try out new types of attack. While his PC was not up to the task, he realised that bigger differences might be found in how long encryption algorithms took to do each job.

Kocher put into action an idea that first emerged more than 40 years earlier. In 1949, US Armed Forces Security Agency staffer Ryon Page noticed the changes in sound made by rotor-based cipher machines as they worked and thought it might be possible to recover plain text from the noises alone. Sound, however, did not emerge as an attack vector until 2013, when cryptographer Adi Shamir developed an attack based on the subtle warbling of a power supply transformer.

Kocher focused on differences in timing; by comparing the time it took to complete each of a thousand or so encryptions, an attacker could discover the supposedly private key they used.

Today, the variety and scope of attacks has exploded and all manner of eavesdropping channels – known generically as side channels – are now in use. Power, electromagnetic emission and even sound can reveal enough about the internal operation of a system to break encryption keys, although these often rely on more sophisticated analysis of tiny changes in performance over time to try to glean information among the inevitable surrounding noise.

Timing remains the most pervasive form of attack, largely because of the rise of the internet. Early attacks relied on physical access to devices that were, for the most part, never going to be connected to a two-way network. One example lay in pay-TV, where breaking the keys could lead to millions in sales of counterfeit cards. The rise of the smartcard led to other financial targets, which then needed to build in stronger defences.

“There is no market where people take security more seriously than payment,” says Don Loomis, vice president of micros, security and software at Maxim Integrated.

The internet, however, provided a link between side-channel attacks and remote-controlled spyware. And it supplies a much richer variety of potential victims in industries where the attention to attacks based on side channels is far more limited. In these attacks, time is not necessarily the leakiest side channel, but it is the easiest to access.

In a typical cloud-based attack, some form of spyware is uploaded to a cloud server that monitors how other applications running on the same blade use caches and memory. An attack such as Prime+Probe, developed a decade ago by Dag Arne Osvik and colleagues from the Weizmann Institute of Science in Israel, has the spyware fill a cache bank with its own data and then watches how the activity of the victim program displaces different cache lines.

Although spyware will usually be prevented from reading cache lines it does not own, it can probe which lines have been altered by a different task by timing how long it takes to access data from its own memory pool. If a read takes a long time because data needs to be pulled back from main memory, it demonstrates the other process evicted its data in order to change one of the targeted variables. Changes in access patterns outline to the spyware the internal decisions the victim program is making and so let it trace execution without having to see the data the victim is running.

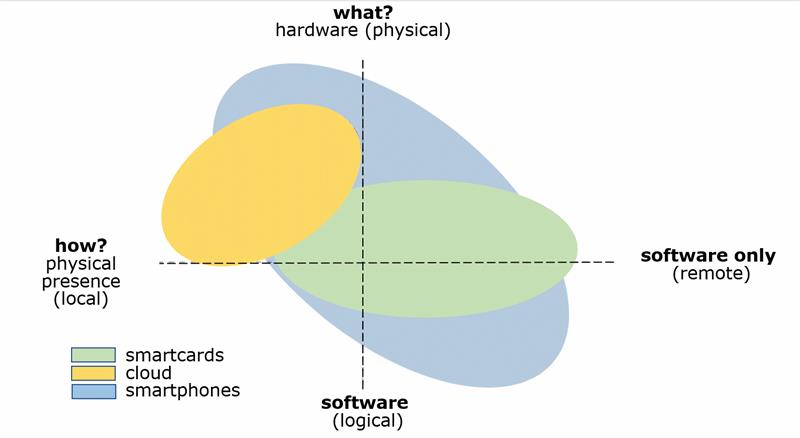

Now side-channel attacks have shifted to the smartphone. Veelasha Moonsamy, a researcher at Radboud University, says devices like smartphones have properties that make them vulnerable not just to the kinds of locals attacks that plague smartcards and traditional embedded systems but those used to exploit cloud applications (see fig 1). And there is a much richer pool of leaky channels in the shape of the many motion and environmental sensors they now contain. Spyware on the device that can gain access to the same sensor readings as the foreground app can use them to work out how the user is interacting with the device and pick up supposedly secure information.

| Smartphones have properties that make them vulnerable to attacks used to exploit cloud applications. |

Even when the device is meant to be inactive, a public USB-based charging station provides one of a number of ways to gain physical access and probe it. Researchers at the College of William and Mary and the New York Institute of Technology published a proof-of-concept attack a year ago that used power fluctuations on the USB charging cable – even with hardware protection to prevent actual data transfer – to work out which web pages the user was visiting. Another attack developed by Moonsamy and colleagues for locker-type public chargers used a trojan app on the phone that could signal covertly to the charging station while it probed background tasks.

The key to fighting side-channel attacks lies in restricting the amount of information an attacker can pick up from measuring differences. Removing sources of difference is the most effective way to do this. This is often done by removing branches that indicate different decisions being taken or performing dummy activities to disguise the use of less power-hungry operations. However, this is not entirely secure.

Circuitry that is prone to glitches can leak power information to a local attacker, as can changes in data from cycle to cycle. A large Hamming distance between successive values passed along a bus will consume more power than a transfer of words that share all but a few common bits. Hardened circuit designs focus on such low-level attributes with glitch-free logic and encrypted buses.

Recognising the likelihood that the next jump in terms of attacks will be to devices intended for the IoT, Maxim has built countermeasures into its low-end DeepCover chip, designed to protect against counterfeiting. But most off-the-shelf microcontrollers are not designed with side-channel protection in mind, although firmware writers can take simple measures to hinder attackers.

Hugo Fiennes, CEO of secure-hardware platform provider Electric Imp, says: “Many customers don’t want to pay for the hardened hardware. But a lot of side-channel attacks rely on being able to run back-to-back crypto operations rapidly. We deal with that by making that hard to do on the platform. It helps a little.”

Another approach is to detect behaviour that indicates an attack is being deployed, such as unusual cache-access patterns or software continually running the encryption unit to try to create enough traces for analysis. A protected device may reset or wipe its keys to terminate the attack. However, these can undermine reliable behaviour by triggering on false positives.

A research project at the University of Bristol aims to bring make it easier to bring software-level countermeasures to IoT and embedded devices, even if hardware hardening does not justify the cost. David McCann and colleagues added power estimation into a version of the open-source Thumbulator, based on data collected from execution traces of the ARM Cortex M0 and M4. Explaining the motivation at last summer’s Usenix Security conference, team member Carolyn Whitnall said: “What we’ve been aiming for is to equip software designers with the ability to detect side-channel vulnerabilities in the development stage and provide an opportunity to make security enhancing adjustments while it is still relatively cheap and easy to do.”

The problem for IoT and embedded systems designers is that it’s relatively cheap and easy to mount attacks. Vigilance and more careful design will push the cost up for the hacker and limit their rewards.