Without efficient cooling to keep the ink flowing at the right temperature the printer cannot operate at full speed. Simulations showed the traditional duct design was causing too much recirculation and turbulence to work effectively.

To get around the issues, HP worked with engineers at Siemens Digital Industries who coupled fluid-flow simulations with a technique mechanical CAD companies now term generative design to come up with a radically different structure.

“As the design was shaping up it kind of defied whatever everyone included me expected,“ said Siemens simulation engineer Julian Gaenz after the launch of the printer that would use the duct, pointing to a tongue-like protrusion in the final design that its predecessor lacked.

There are two main tactics used in this kind of generative design. One is to randomly generate variants after each simulation run in the expectation that one or more will work better. Another is to home in on problem areas, such as the recirculation in the HP printer’s duct, and come up with shapes that seem likely to reduce the problem before simulating a complete part to see how well the changes worked. HP is far from alone in exploiting simulation-driven design.

Above: The result of generative design - the HP duct tongue profusion

Tom Gregory, product manager of thermal-analysis tools supplier Future Facilities says his company’s 6SigmaET tool has been used to help determine the optimum shape for pins used in that style of heatsink.

“The optimisation was performed by using genetic algorithms in combination with CFD. The aim of the optimisation was to reduce the pressure drop across the heat sink while maintaining thermal performance.”

Though it is entirely possible to create the variants or iterations by hand, machine learning looks to be a useful tool for generative design because, in principle, it can create variations quickly and come up with counterintuitive but effective unexpected results. Gregory points to several research papers have come up with novel heat-sink designs. ”The designs that are generated are impressive and visually stunning. However, they cannot be manufactured by traditional methods.”

3D printing not essential

In terms of research heatsinks often need 3D printing, as did the HP nozzle. However as that was replacing six injection-moulded parts, the newer part worked out more cost effective once the 3D printing process was optimised.

Lieven Vervecken, CEO and co-founder of Belgium-based generative-design startup Diabatix says 3D printing is not essential.

“We have a few manufacturing techniques that we support by default, such as CNC machining, extrusion, die-casting and sheet metal forming,” Vervecken says. Each has its use in different markets. For example, sheet metal forming has proved useful for making the large battery cold plates needed by electric vehicles.

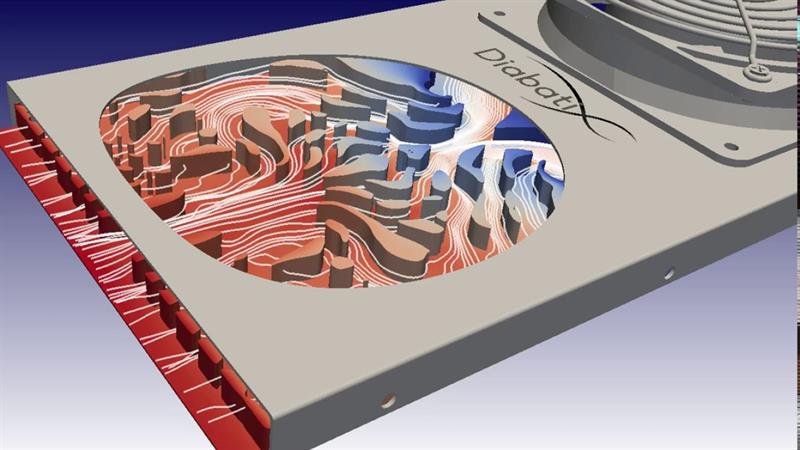

Diabatix has built a generative environment around a selection of open-source design and simulation tools that iteratively refines the structure of a heatsink or cooling component based on the toolsuite’s analysis of thermal flows and mechanical structure.

The company is preparing to launch a cloud-hosted version of the tools in April.

Although generative design can use randomisation to create novel designs, this was not the approach taken by Diabatix. The problem with randomisation is that the simulation overhead needed to assess each variant makes the technique too unwieldy. Instead, Vervecken says the approach used by the start-up uses machine learning to build algorithms that can come up with effective but often unexpected shapes and structures rather than the parallel fins found in most conventional designs, with manufacturing constraints used to stop them from becoming too impractical to manufacture.

“This is why we can generate up to 30 per cent additional cooling performance,” Vervecken claims.

Above: A 3D design from Diabatix

Thermal engineering

Another potential target for machine learning in thermal engineering is to reduce the bottleneck introduced by simulation if a large number of variants need to be analysed in parallel before further progress can be made.

Training a deep-learning model with the results of extensive simulations has been used in areas such as materials and particle physics research because the trained models can run faster than the original simulations, which may take days to complete even on a supercomputer. They do not offer full accuracy but can be used on a fast path to home in on parameters before running a final detailed simulation.

GPU maker nVidia, which is already a strong proponent of machine learning has applied the same technique to a project called SimNet to do the same for the kinds of computational fluid dynamics (CFD) models used for thermal engineering.

A key problem is that deep learning itself is computationally intensive and requires weeks of simulation to build a big enough training set to let an AI model learn how parameters affect air and heat flow in space.

Vervecken says the range of projects in which they are involved, which range in scale from mobile-phone processor heatsinks to truck-scale battery cold plate, increases the complexity of the problem.

”To train a single model to cover that whole range? That's difficult,” Vervecken says, saying that it makes more sense to prioritise machine learning for the design tools rather than the simulation engine.

Against a possible AI-based speedup you need to weigh the contributions that can come from simply optimising the computational fluid dynamics (CFD) algorithms directly.

Specialist vendors such as Future Facilities have employed multicore execution and other optimisations to cut overall turnaround time. In a study with Rohde & Schwarz, the time taken from run a simulation from CAD import through mesh generation to analysis was cut to below 15 hours from more than 40.

As well as heatsinks, simulation-driven machine learning can be applied to core device and system design.

“One area that Future Facilities is working with machine learning research groups is in using CFD simulation to train machine learning algorithms,” Gregory notes.

There are a number of areas where the machine learning can prove useful. One is to help build simple models of heat generation and transfer that can guide algorithms used to decide when to power down processors on a multicore SoC.

“Thermal simulation can easily train the machine learning algorithm how the temperature of the device would change based on processor usage. This can be done quickly in parallel before the device has even been manufactured,” Gregory says.

Another use lies in R&D for new types of device, such as those used for power electronics, where excessive heat can cause thermal runaway and other safety issues. “Comprehensive training data is essential for an effective machine-learning algorithm, but it’s often not practical or safe to obtain training data from physical devices. If you are optimising a new device or system, a physical device may not have been created,” Gregory says.

Performance is not the only motivation for using AI-based models. “Very often it's a cheaper solution they are looking for,” adds Vervecken. “There’s a misconception that exists with our technology. We are not always looking for the most efficient heatsink. We have a project on-going where the customer is instead looking for the most affordable solution. The customer said performance is not an issue: the existing design is already overprovisioned by 30 per cent but they can't get that type of design to be cheaper.”

It is still early days for machine learning for thermal engineering but generative design techniques are demonstrating the value of simulation and rapid iteration in coming up with designs that work better, work out cheaper or both.