Over the next four years, the total value of the data centre industry is set to more than double in size, growing from a $1.2-billion industry to a $3.2-billion industry by 2024. At the same time, the total number of data centres is also growing, with Synergy Research estimating that the number of hyperscale data centres has already risen from 100 in 2017, to over 300 in 2018, and then to nearly 500 as of late 2019.

But while this mammoth industry is clearly growing in size, it’s also growing in complexity. As both consumer and business expectations evolve, ever more data must be stored, managed and processed faster than ever before.

Growing demands for cloud computing, homeworking and live-streaming have added to this pressure, along with new innovations such as 5G, blockchain and machine learning. In addition, there is also a growing pressure for sustainability and efficiency, with data centres now eating up more than 2 per cent of the world’s electricity and emitting roughly as much CO2 as the airline industry.

Every one of these considerations has a knock-on effect for data centre designs, impacting everything from the layout of the largest data centres right down to the tiniest electronic components sitting inside.

As data centres become more powerful, and their applications more varied, optimum power, temperature and performance have become more important but also even more difficult to attain. The challenge for design engineers is to place, what are now, extremely powerful components into compact chassis without compromising reliability, usability or longevity.

With more power, however, comes more heat. Given this fact, the key focus for engineers in future will be figuring out how best to keep their designs cool - regardless of whether they are designing at the component, server, rack or data centre level.

This has led to a major investment in new cooling techniques, along with computational fluid dynamics (CFD) technologies to understand where those new techniques will be best applied.

AI and the data centre

Perhaps the biggest challenge in data centre cooling has been the introduction of machine learning, and the use of data centres for massive AI data processing. These systems are very high powered and typically running at high utilization. This has led many to adopt thermal simulation to ensure new systems can be cooled effectively before the equipment is installed.

Last year, the 6SigmaET team at Future Facilities held a panel discussion with experts from across the IT electronics and data centre component space. This panel identified AI as a key factor driving change at the IT component level, with data centres being forced to adopt increasingly powerful servers and ever more complex cooling systems, such as liquid and immersion-cooled servers.

As Dr Bahgat Sammakia, from the University of Binghampton, put it during the event, “AI is going to change things all the way from chip level to software. It will cause a tremendous rise in the amount of data being processed and will drive tremendous storage demand.”

Already, the hardware being used to enable the rise of machine learning has evolved dramatically. Many electronics manufacturers have produced AI accelerator application specific integrated circuits (ASIC), with Nvidia’s DGX and Google’s Tensor Processing Unit (TPU) being two key examples. But while positioned as game changing developments for the AI processing space, this new hardware also brings serious thermal complications to data centre electronics design.

Unlike Google’s previous tensor processing units, the TPUv3 is the first to bring liquid cooling to the chip - with coolant being delivered to a cold plate sitting atop each TPUv3 ASIC chip and two pipes attached to circulate excess heat away from the components.

This inclusion of liquid cooling at an individual chip level changes the game when it comes to thermal design. Now, rather than relying on fans or server-level liquid cooling, engineers must consider how these components cooled with cold plates fit within their wider design ecosystem.

Although liquid cooling was used to cool super computers in the 1970s and 1980, it has not been very commonly used in modern data centres until recently. However, due to changing technology requirement it is now finding its way into the data centre - and thermal engineers need to adapt quickly to this new reality.

A sledgehammer to crack a nut?

While liquid cooling systems are proving extremely effective in for the new age of AI hardware, they should not necessarily be used as the go-to solution for thermal management for all servers.

Effective cooling is vital within data centre electronics, but so too is optimising efficiency - both in terms of energy and capital investment. While liquid cooling may be extremely effective, it’s vital that designers minimise thermal issues across their entire designs; not relying on the sledgehammer approach of installing a liquid cooling system just because the option is available.

For some of the most powerful chips, such as the Google TPUv3, it may be that liquid cooling is the only viable solution. However, with careful design, air cooling can still be used for even high powered servers.

Liquid cooling may help to rapidly dissipate heat build-up, but the added complexity that comes with these systems adds far more potential points of failure. You still have all the traditional risks, but you now add in a dozen or so additional failure points in the form of hoses, barbs, radiators, water blocks and pumps. All of this adds complexity, cost and, most importantly, risk. In an industry where failure isn’t an option, this risk must be a serious consideration when planning thermal designs.

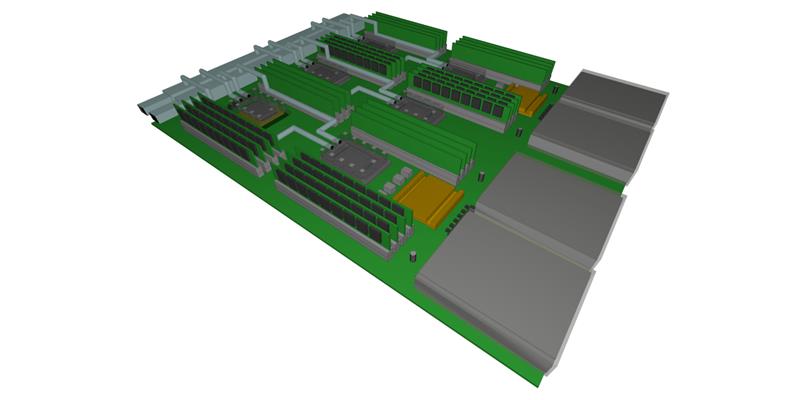

Above: Next generation data centres are driving demand for new cooling techniques, such as liquid cooling

Instead of over-relying on liquid cooling, it’s the businesses that maximise their use of space and build thermal considerations into the fabric of their component, server and rack designs that will be most effective at minimising energy waste and limiting unnecessary component costs.

Heat sink design, fan selection and component layouts are all still huge factors in thermal design. As is knowing when to use a liquid cooling system and when a fan, or even a heatsink, will do the job. So, given the complexity of modern data centre electronics, how do you know what the right approach is and when is best to use each of these different solutions? That’s where thermal simulation is proving so vital, allowing engineers to switch out different hybrid cooling combinations in a risk-free environment in order to work out what’s best.

The case for CFD

The benefits of analysing thermal performance using computational fluid dynamic (CFD) models at the board and server level are well understood.

This practice, established for decades, has proven to be more cost effective to run multiple thermal simulations instead of creating multiple physical prototypes. Thermal simulation allows engineers to evaluate solutions that improve power density, bolster performance and reliability and allow server inlet temperatures to increase. These increased inlet temperatures further improve efficiency, as the hotter the inlet air a device can handle, the less moneys must be spent on cooling.

At the same time, thermal simulation should not just be done in isolation and should consider how the server will be mounted in a rack and how it works with data centre cooling system.

Thermal simulation software such as 6SigmaET are adding functionality to make it easier for engineers to verify their liquid cooling designs including adding 1D flow network modelling, intelligent modelling objects and fast solving of liquid cooling loops.

When preparing for the increasingly complex future of data centre electronics design, thermal management has a major role to play. Whether that’s for detailed components, simplified servers, racks or data centre layouts, thermal simulation will lie at the heart of the next generation of data centre design.

Author details: Tom Gregory is Product Manager, 6SigmaET