However, for reasons of sheer volume, it will be road vehicles where the biggest changes will be felt. Vehicle efficiency and road safety will be improved and congestion will come down and the technology and legislation is in development to make it a reality.

It is generally agreed that the transition to autonomous driving will be gradual. In the US, the National Highway Traffic Safety Administration (NHTSA) has defined five levels of automation, from 0 to 4, which it refers to as the automation continuum. Today, Advanced Driver Assist Systems (ADAS) have moved from being an optional extra to a standard feature in most cars, but despite their sophistication they are still classed as being on Level 0 on the NHTSA continuum.

In its definition, Level 0 is referred to as No Automation, and means that the driver is in full control of all three (throttle, brakes, steering) of the vehicle’s primary controls at all times – the driver has full responsibility for monitoring and reacting to the driving conditions.

Level 1, is described as Function Specific Automation and applies when a specific and independent function is automated. Cars classed as Level 1 may have more than one system automated, but they would necessarily operate independently. These include adaptive cruise control, but crucially the responsibility still lies with the driver.

In Level 2, defined as Combined Function Automation, at least two of the primary control functions would be working autonomously and collaboratively. Level 3 defines Limited Self-Driving Automation, while Level 4 applies to Full Self-Driving Automation. In this scenario, the vehicle is in complete control of all primary functions and is responsible for monitoring and reacting to driving conditions; a scenario that extends to unoccupied vehicles.

Intelligent Vision Systems

Autonomy in vehicles may give the driver time to sit back and relax, but the technology under the hood will be working hard to maintain the safety of drivers and pedestrians alike, and intelligent vision systems are expected to be vital in this respect. Technologies such as short- and long-range radar, and ultra-sonics, are already used for adaptive cruise control and to help identify bends in roads. But, arguably, only intelligent stereoscopic vision systems are capable of detecting and differentiating between features such as road signs, pedestrians, cyclists, stationary objects, moving objects and other hazards, with the level of certainty needed for full autonomous driving.

In this scenario, the vision systems will need to be far more sophisticated than simple cameras; the intelligence levels will need to be comparable with the human cortex – or even better when considering the range of viewing angles and driving conditions that they will need to operate under. As such, efforts are being made to develop vision systems that can offer comparable intelligence to a human driver.

These intelligent vision systems will be closely coupled to — and empowered by — artificial intelligence. They will be intelligent at start-up, but will also be able to learn and better adapt to road conditions. And through cloud-based technologies and the burgeoning Vehicle-to-Vehicle wireless infrastructure, the intelligence of these systems will expand and be shared between road users.

Like much of the technology needed to support and enable autonomous vehicles, intelligent vision systems already exist and are used in other industries, for example, in industrial robots. Not very long ago, vision systems relied on image grabbers added to PCs, which would process a single frame at a time and rarely in real-time.

Intelligent vision systems will need to do much more, and all in real-time. This will require processing power that is only now becoming available, through advances made in System-on-Chip platforms, advanced software, deep learning algorithms and open source projects.

Artificial Visual Cortex

Vision systems will look to match the capability of a human vision system; an artificial visual cortex. This may sound like science fiction, but it has already been developed and deployed.

It is enabled by the development of Heterogeneous System Architectures (HSA); platforms that combine powerful general purpose Microprocessing Units (MPUs) with very powerful and highly parallel Graphical Processing Units (GPUs). These platforms provide the intelligence needed, while highly capable FPGAs are employed to process individual pixels straight from the sensors.

While biological vision systems have evolved to see in three primary colours, machine vision systems see things differently; in terms of hue, intensity and saturation (HIS). Camera sensors typically still use RGB filters, so the first stage in an intelligent vision system is to convert RGB data into HIS. Combining FPGAs and advanced HSAs, OEMs are developing intelligent vision systems. As an example, Unibap has developed the IVS-70, an intelligent vision system now being used to add advanced stereoscopic vision to machines across a number of industrial applications (Figure 1 shows such a system).

The software infrastructure needed to develop intelligent vision systems, such as OpenCV (Open Source Computer Vision) and OpenCL (Open Computer Language) require high performance processing platforms to execute their advanced algorithms. The AMD G-Series, for example, combines the powerful x86 CPU core with a highly optimised GPU in a combination AMD calls the Advanced Processing Unit (APU). Figure 2 shows a representation of how AMD’s APU brings together these two powerful processing functions.

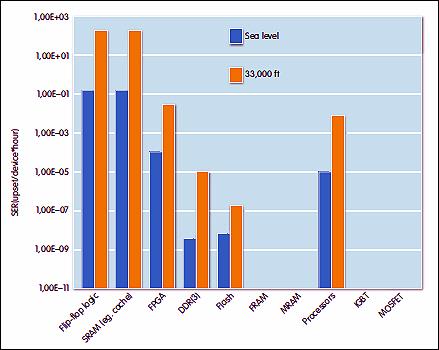

Figure 2: The susceptibility of common electronics for the background neutron radiation cross-section Single Event Ratio (Upset/device*hour).

This architecture is also now supported by AMD’s AMDGPU Hybrid Pro Driver package for Linux, which can be used to further accelerate the performance of OpenCL and OpenGL software, as well as the Khronos Group’s Vulkan API; a low overhead, cross-platform 3D graphics and compute API (which was also known as ‘Next Generation OpenGL until the name ‘Vulkan’ was announced). The VDPAU (video decoding and presentation API for Unix) interface, which makes accessing the advanced features of the AMD G-Series APUs simpler, is also supported.

A further consideration in the safety of vision systems in autonomous vehicles is their susceptibility to so-called Single Event Upsets (SEUs), which is likely to be a crucial requirement for any systems used to achieve Level 4 in the autonomous continuum. Tests carried out by the NASA Goddard Space Flight Center have shown that the AMD G-Series SoC can withstand ionising radiation doses that far exceed those currently specified for standard space flight.

Autonomous vehicles will encompass the most advanced technology ever deployed in large volumes. In terms of operations per second, the underlying hardware will outstrip anything that has gone before it.

Intelligent vision systems will be a key driver, enabled by a combination of advanced processing platforms and supported by a software ecosystem that targets these powerful hardware solutions.

When coupled with deep learning artificial intelligence, these vision systems will be equipped to monitor and understand the world around them, delivering on the promise of safer, faster and more sustainable mass transport.

Author profile:

Dr. Lars Asplund is Chairman and Dr. Fredrik Bruhn, Director and CEO of Unibap.