One of the first products to seize on the use of unique IDs was inkjet cartridges – often carrying a similar price to that of the printer. The relative high price of cartridges provides the opportunity for competitors to undercut the printer manufacturer's supplies and still make a profit. Manufacturers quickly stepped in with techniques to allow the printer to authenticate cartridges and to reject those that fail the test.

Industries that rely on the ability to have spare parts checked and certifiedfor safety and security purposes have taken a keen interest in the use of ID codes to weed out counterfeits that might compromise the system in terms of its ability to function, or siphon secrets out to hackers.

Uniqueness is important in these environments because the cost of a counterfeiter finding the master code for a particular product line is so high. Giving an installed part a unique ID that still allows the machinery's software to authenticate it as coming from the manufacturer limits the cost of that ID being revealed. The manufacturer then has to weigh the risks and problems of using a pattern for the IDs so the machine can authenticate each part using a simple algorithm – which could be reverse engineered or be fooled by the counterfeiter finding one of the IDs and using that across all the parts it sells. A more secure alternative is toinstead employ a database of known good IDs generated during manufacture that the machine checks online, but this risks inconveniencing the user.

FPGA manufacturers have provided one means to give parts a unique ID and in a way that limits the risk of the ID itself being exposed to the world. By including a cryptographic engine and an area of non volatile storage onchip, the device can take part in challenge-response communications that interrogate the ID – implemented as a secret key – to come up with a valid response. The challenge-response protocol ensures the chip does not actually pass the digits of the ID to an external bus so they can be intercepted by someone trying to reverse-engineer the system.

Using secret keys, it is possible to institute checks on the supply chain all the way through manufacture. Rather than producing fakes from scratch, counterfeiters can use a company's own products against itself by bribing manufacturers and assemblers in the supply chain to build too many parts. Overbuilding is a significant risk in a supply chain that relies extensively on third parties, all the way from wafer fabs through to contract electronics manufacturers.

Some security specialists recommend multistage provisioning, in which elements of the ID are programmed at different points during manufacture. At wafer sort, each chip may receive a unique ID. When a chip is packaged, it receives another part and when assembled into a subsystem, the key is complete. Only if earlier steps are complete and correct can the final part be be used.

Another option, which removes the need to program secret keys during manufacture – although it offers less flexibility in terms of provisioning – is to use the chip's natural attributes as an ID. Variability has always been a challenge to chip designers, demanding close attention to analogue circuits in particular to ensure that tiny changes in transistor and interconnect behaviour do not cause problems. The physically unclonable function (PUF) harnesses this variability.

The idea is that cells in regular structures on an IC will have subtly different behaviours, even between neighbouring cells. For example, there will be small differences in threshold voltage caused by the changes in dopant concentrations within the channel of each transistor.

Normally, the read-write circuitry in an SRAM, for example, will be set at a tolerance to avoid these threshold voltage differences causing problems. But a PUF circuit can measure the differences. Although it is possible to measure the changes in threshold directly, one common technique is to time the delay of logic gates – which will be affected by those threshold shifts. Another technique used for SRAM PUFs is to read out the state of the memory array after power up: the different threshold voltages will determine whether each cell stores a 0 or 1 naturally. The values collected from an array of cell are then used to compute an ID that is statistically likely to be practically unique and extremely difficult for an outsider to predict or obtain directly, at least in principle.

Because the circuitry will be subject to changes in temperature and supply voltage, the ID is never a fixed number, but will vary within a range. The PUF circuitry needs to generate a reliable result from that fuzzy data, often using techniques similar to those employed for error correction in memories. As with systems based on secret keys, the PUF ID should only used in a challenge-response protocol. As a result, imitating a legitimate device should be extremely difficult. But security experts remain unconvinced as to the unclonability of PUFs and have come up with several ways to attack implementations.

One technique is side-channel analysis, in which an attacker monitors subtle power fluctuations or EM radiation from the target device to try to work out what it is doing when it computes a response. This is a common attack used against high-value chips that contain secret keys – such as those used to decrypt satellite and cable TV signals. Side-channel analysis can also be used against PUF circuitry, although so far there have been very few published attacks.

Most PUF attacks have focused on the lack of entropy that some implementations exhibit and the ability of attackers to build self-learning models that, after training on the real device across several hundred challenge-response transactions, can predict its future behaviour.

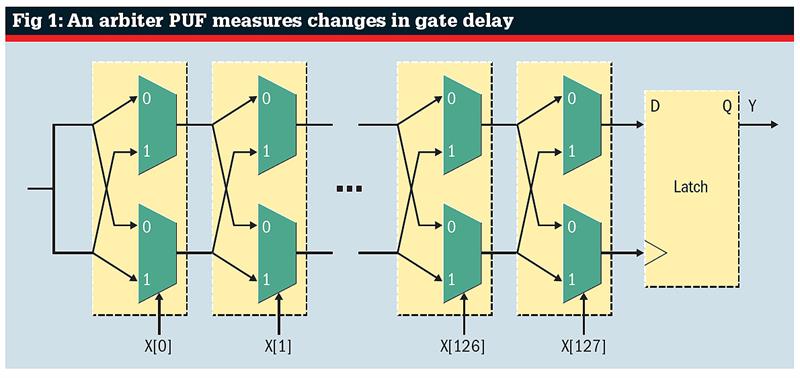

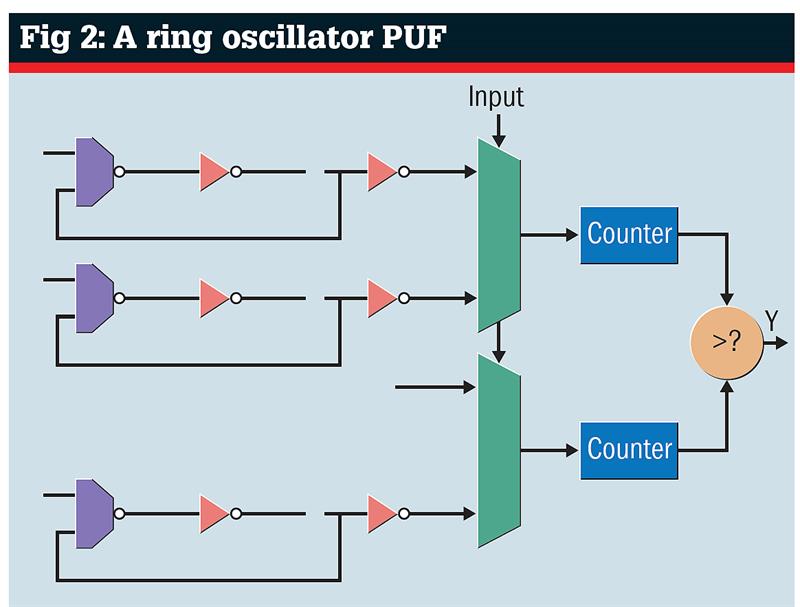

Researchers at the Technical University of Darmstadt found that arbiter-based PUFs – which analyse the delay through a long logic tree – have less entropy and so are more predictable than those based on ring oscillators or SRAM properties. The ring oscillator and SRAM also showed much greater variation across chips, so the result from analysing one PUF implementation could not be used on all the parts that employ it. As a result, attacks on arbiter PUFs have tended to be more successful.

Experience with side channel analysis has shown that dedicated attackers can extract keys from supposedly secure devices. However, many of these attacks rely on the ability to collect large amounts of data that can support statistical analysis. Chips that monitor how they are being used and 'expire' if too many attempts are made to access a particular circuit can be highly effective against these attacks. However, even these countermeasures can be vulnerable – a common attack is to use lasers or clock glitches to force the counters to reset or simply to not tick over every time a challenge is processed.

As IP is encapsulated more by software and reprogrammable hardware, manufacturers will seek to find ways to protect their assets. Although not foolproof, unique IDs provide a useful weapon and further research into their security should make attacks more difficult and less lucrative to carry out.

In an arbiter PUF, subtle differerences in gate delay cause the output of two parallel logic paths to differ in timing. The challenge code switches the muxes at each stage so an attacker cannot read the PUF state directly.

In an arbiter PUF, subtle differerences in gate delay cause the output of two parallel logic paths to differ in timing. The challenge code switches the muxes at each stage so an attacker cannot read the PUF state directly.

A ring oscillator PUF uses differences in a count to establish the output state of a comparator. The bits from a challenge code activate different oscillators feeding into each mux