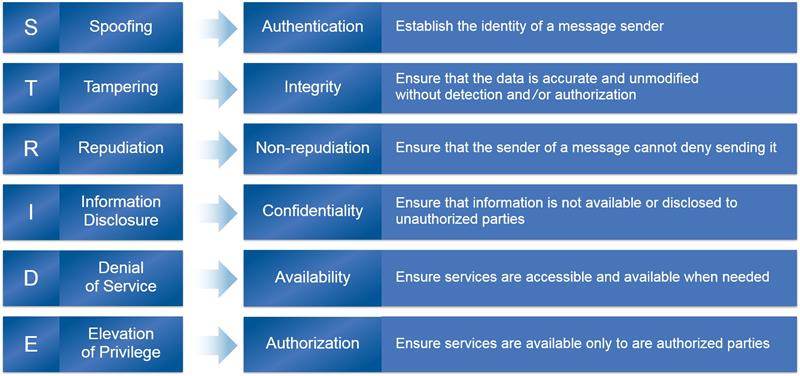

The STRIDE threat classification model, originally developed by Microsoft, lists the potential security threats an IoT device or user of that device faces: Spoofing; Tampering; Repudiation; Information disclosure; Denial of service; and Elevation of privilege.

The security functions and resources required to protect an IoT device against these security threats are available in specialised discrete ICs such as:

- a secure element – an SoC combining an MCU with on-board cryptographic capabilities, secure memory and interfaces

- secure non-volatile memory ICs, which typically feature a cryptographic engine for pairing the memory securely to authorised devices

However, the use of such discrete ICs in IoT devices increases their component count, complexity and bill-of-materials cost compared to designs that use the integrated security capabilities of the host MCU (or in some cases an applications processor). The crucial question for IoT device designers, then, is whether the capabilities of the host MCU are sufficient to counter the threats described in the STRIDE model.

Layers of protection

IoT devices are vulnerable by virtue of their networked operation. A connected wristband monitoring a patient’s heartbeat and blood oxygen levels, for instance, might continually send sensitive private data over a wireless link to a medical application hosted by a cloud service provider.

It is useful to think of the vulnerability in this type of device – and therefore the protection that is required – in terms of layers. For example, one layer is the personal area network connection, typically a Bluetooth Low Energy radio link to a smartphone or tablet with which the wristband is paired.

An extension of this layer might be the Wi-Fi link provided by the smartphone or tablet to a home router or gateway. The second layer might be the cloud platform, such as Microsoft’s Azure or Amazon’s AWS; and the third is the application itself running in the cloud. Further layers can be defined, depending on the architecture.

Of course, the wristband’s user expects to enjoy complete peace of mind, free of concern about potential security threats. Threats to consider from the STRIDE model might include:

- Spoofing; another device pretending to be the user’s wristband, and thereby gaining access to its application data on the cloud

- Tampering; falsifying the heart-rate or blood-oxygen readings provided by the wristband to the cloud-based application

- Information disclosure; an attacker snooping on the patient’s private medical data

- Denial of service; potentially a temporary inability to use the cloud service. If the patient relies on the cloud-based application to alert them when care or medication is urgently required, denial of service could have serious health consequences.

The wristband might be vulnerable to one or more of these threats at each of the layers described above. Furthermore, it is not appropriate to use a single security mechanism to cover the entire connected system. For instance, as recently reported, a single vulnerability in Yahoo!’s security enabled intruders to use forged cookies to impersonate valid users (a ‘spoofing’ attack) and to then access those users’ accounts without a password.

Such a spoofing attack on a cloud service provider might open up the wristband’s channel to the cloud. It is therefore essential that the security mechanisms protecting the other layers – the application hosted in the cloud, as well as the Wi-Fi and Bluetooth Low Energy links to the internet – are implemented separately. For instance, the key used to encrypt the heart-rate data fed to the cloud-based application should be different from the key used by the wristband to authenticate the cloud-based service.

Requirement for core security capabilities

For each of the six STRIDE categories of threats, there are corresponding types of security requirements (see Figure 1). Furthermore, an IoT device’s security relies on three elements:

- Security policies. These define which security requirements are used to provide system, network and/or data access.

- Cryptography. Arithmetic algorithms used to implement different types of security requirements.

- Tamper resistance. The ability of a system to perform cryptography and enforce its policies without leaking sensitive information or being altered.

Figure 1: Each STRIDE threat requires a protecting counter-measure

Typically, the security policies for an IoT device and its users are the first things that are decided. Next, the system design engineer needs to decide whether the security requirements that use cryptography and tamper resistance can be implemented adequately in an IoT device’s MCU. This raises the following questions:

- can cryptography keys be stored securely?

- are hardware acceleration blocks for standard cryptographic algorithms such as RSA, ECC, AES and SHA available?

- is there a true random number generator? Is it certified?

- is it possible to detect physical tampering with the IoT device?

To date, availability of these functions has been limited to discrete, specialised ICs. However, the explosion of new IoT devices is creating demand for MCUs that integrate these and other functions to meet the needs of the IoT. This is what Cypress is addressing with the PSoC 6 MCU family.

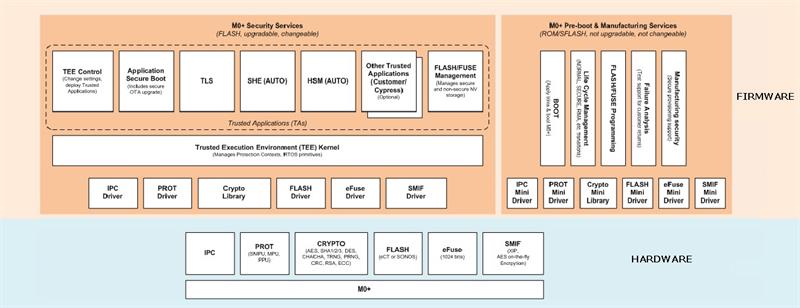

PSoC 6 MCUs have a dual-core architecture:

- a high-performance ARM Cortex-M4 core, capable of performing computationally intensive tasks fast and shifting to a low-power shut-down mode on completion;

- an ARM Cortex-M0+ core, which can run simple always-on functions, such as operating as a sensor hub or managing a Bluetooth Low-Energy connection, at ultra-low power levels

The PSoC 6 MCU architecture takes advantage of the availability of two cores to provide memory secured at a hardware level.

A single core MCU can only operate within a single address space. Secure data, such as keys, are stored in this space along with regular firmware. A real-time supervisory function (typically part of an RTOS) then manages the secure addresses during normal operation so that only authorised programs have access.

In PSoC 6, access control can be configured at an architectural level prior to the MCU moving to normal operation. For example, a section of shared memory can be configured to be accessed only by the Cortex-M0+ core. In this way, PSoC 6 MCUs can offer hardware-based secure memory that adds an additional level of protection against unauthorised access (see Figure 2).

Figure 2: The Cortex-M0+ core in the PSoC 6 dual-core architecture may be used to provide security services

PSoC 6 MCUs also offer a dedicated security hardware acceleration block that supports familiar encryption algorithms such as AES, RSA, ECC and SHA, and provides a true random number generator. As with on-chip memory, access to this block can be controlled at an architectural level.

Finally, functions to detect tampering with a product’s enclosure are integrated into PSoC 6: programmable analogue blocks may be configured to perform voltage and current sensing and clock monitoring, while Cypress’ CapSense capacitive-sensing technology may be used to detect unauthorised attempts to open the enclosure.

Security purpose-built for the IoT

This ability to offer integrated hardware-based secure storage, cryptographic acceleration and tamper-detection functions has never before been available in an MCU for mainstream IoT applications. The new demands generated by the IoT call for new MCUs that are purpose-built for the IoT, with the security features, low-power attributes and processing capabilities that IoT devices need.

Author profile:

Jack Ogawa is senior director of marketing with Cypress Semiconductor.