The benefit has been a dramatic improvement in the efficiency of electronics design. It is a shift that is now being replicated in software, with developers looking to make more use of reusable modules as opposed to relying primarily on the lines of code they write themselves.

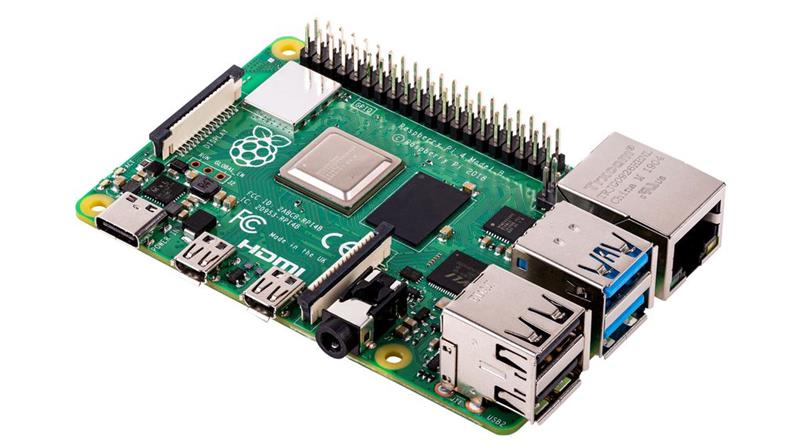

There are many advantages that lie in a shift to modular design. One is greater ability to share in the economies of scale that come from the use of platforms that attract many customers. Industrial users have a long experience with modular hardware. The Versa Module Eurocard (VME) and CompactPCI standards provided integrators and Original Equipment Manufacturers (OEMs) working in low-volume markets with the ability to use high-performance computing. They could perform more extensive customisation of a computer’s capabilities without having to invest time and effort in high-end printed circuit board (PCB) design. Since those days, Moore’s Law has delivered incredible gains in functionality while also reducing the cost of individual parts. The Raspberry Pi single board computer is a key example.

By leveraging the economies of scale that come with a smartphone System on a Chip (SoC) platform, the consortium behind Raspberry Pi has been able to deliver a far more effective product than would have been possible with a design created originally for educational use. The non-recurrent engineering (NRE) costs incurred by the silicon provider were easily absorbed by the primary target market, delivering much greater value to Raspberry Pi’s target users. This cost advantage was passed onto the industrial sector. Integrators and OEMs have taken advantage of modularity of the Raspberry Pi platform, using the HAT expansion bus to add their own custom interface modules.

The use of the Pi modules frees engineering teams from having to source similar components and design them onto custom PCBs. These often require more time-consuming signal-integrity and functional checks than those needed to create the front-end HAT modules. Very often, those custom modules can use relatively simple two- or four-layer PCBs.

Software modules

A similar trend to modularise software has emerged. Engineers can now focus purely on elements of an application where they can add value. This trend is driven not just by economies of scale and the ability of some suppliers to amortise NRE effectively, but the larger trend toward networked integration and service-driven business models. An embedded system is often not complete today unless it forms part of a larger system of systems, such as the Internet of Things (IoT). In this environment, a device may be used to help deliver one or more services – many of which will be changed during the lifetime of the hardware used to support them. This combination of the IoT and the cloud is yielding new business models that leverage these capabilities, such as software as a service (SaaS) and pay per use. Flexibility has become a key criterion in this commercial environment: one that pushes implementors to seek more modular structures.

Modularity begins with the operating system. The operating system supports abstractions that are vital to building flexible, modular environments. Typically, an operating system provides a set of services that range from simple input/output to full networking stacks, all accessed through a set of documented application-programming interfaces (APIs). As long as the services continue to support the APIs, the code that delivers them can change without affecting the applications that use those APIs. It is as true for the simple real-time scheduler FreeRTOS that is shipped with many microcontroller development tools as it is for commercial and more complex RTOS implementations, such as Wind River’s VxWorks . VxWorks sets the industry standard for embedded operating systems, powering some of the most critical infrastructure and devices.

Linux and other operating systems can make memory management go further by making it possible to isolate tasks from each other. One possible issue with simple RTOS structures is that they operate in a completely unpartitioned memory space. Bugs or malicious behaviour in one task can lead to data and code being overwritten accidentally in another, leading to a system crash or other undesired outcomes. Linux uses virtual addressing, mediated by a hardware memory-management unit, to prevent tasks from accessing each other’s memory spaces. They can only interact through operating system APIs or inter-application protocols built on top of these APIs.

Virtual memory addressing is not an absolute requirement for task isolation. Some microcontroller architectures, including several members of the Arm Cortex-M and Cortex-R families, can enforce memory protection in a flat memory space. Arm also provides the Trustzone secure software mode in a number of its processors, which make it possible to isolate sensitive software from user-level tasks. With this protection, it becomes easier to combine custom code with the growing range of off-the-shelf software modules that have been developed to cope with common tasks.

Integration of functions

Today, engineers have access to a range of free, open source software modules and protocol stacks available through Github, Sourceforge and other services. Commercial stacks that offer greater support, additional functionality or certification for safety-critical applications are also on offer. The reference designs put together by silicon manufacturers will often combine a range of open source and proprietary functions to make it easier for customers to build prototypes through to full product implementations. In some cases, the reference design implements a full application that the end user can tune to their own needs.

Some system designers are taking advantage of the increasing modularity of software to construct development environments that tune parameters and generate code automatically. These tools often use block-based representations of software that the developer assembles on a graphical user interface. One example is Microchip’s MPLAB Code Configurator for the PIC8, PIC16 and PIC32 microcontroller families.

Advanced applications, such as machine learning and image processing, are examples of areas where users can benefit from the high NRE investment of specialists and avoid the years of development time such software would require if users had to build it from scratch. Caffe, PyTorch and Google’s Tensorflow make it possible to build, train and tune complex artificial intelligence (AI) models that easily integrate into embedded processing pipelines. For image processing, OpenCV is a widely used library that can easily be integrated into real-time applications. With the rise of machine learning, an increasingly common usage model today is for OpenCV to pre-process image data before being passed to an AI model built using Caffe or Tensorflow, with custom code being used primarily to provide the real-time response to events the model detects.

Bringing it all together

Developers now have access to cloud-oriented software modules and tools that easily integrate with common network stacks and RTOS implementations. This enables embedded systems of varying levels of complexity to be integrated into the IoT. Avnet’s IoT Connect Platform, for example, provides cloud-based processing for complex tasks such as AI. As the system is defined by both cloud and embedded device software services, cloud providers such as Amazon Web Services and Microsoft Azure now provide a range of offerings that bring the two together: all leveraging the modularity of the software components they employ.

Modularisation is changing the required skillset of embedded software engineers. The balance of responsibilities is shifting from code development to an ability to construct flexible architectures based on pre-existing modules that allow for easy custom coding and runtime configuration as new services are deployed. By harnessing this modularity, OEMs and systems integrators can easily keep pace with the demands of customers that would simply be inconceivable by traditional means.

Author details: Cliff Ortmeyer is Global Head of Technical Marketing, Farnell