The goal of such systems, as defined by Shapiro and Stockman in Computer Vision, is to 'make useful decisions about real physical objects and scenes based on sensed images'.

There are many opportunities to create value through the implementation of computer vision. Developers of security systems can now implement cameras which can identify vehicles by reading their number plate; vehicle manufacturers are designing systems which monitor how long the driver's eyes are closed for and sound an alert if they detect the driver might be asleep.

An intelligent camera must be capable of recognising shapes, lines, edges and colours, and of synthesising the information into a data set which enables it to decide 'is this a face or not?'

This seemingly complex process can be accomplished using a small number of standard functions. These functions operate as cascaded digital filters: the output of one filter is fed into the input of the next. The type, order and parameters of these filters define what the algorithm does. Filters are also used in audio processing, but in video applications, the algorithms operate on 2d data rather than the 1d data found in audio signals, thus complexity is markedly greater.

There are broadly five categories of image processing functions:

- Colour space converters take an image and convert it into a more useable form, for instance converting an image into greyscale

- Binary morphology comprises five sub functions. These sub functions dilate, erode, open and close the buffer, and implement a threshold.

- Image transform filters include convolution – a way to implement high and low pass filters – and Hough transforms, used to detect simple shapes such as straight lines and circles

- Feature detection includes functions such as edge, corner and blob detection. There are several algorithms associated with edge detection including the Sobel filter and the Prewitt filter.

- Face detection.

In the past, electronics OEMs would have had to develop proprietary software to implement these functions. But this field has been boosted by the emergence of OpenCV, an open source library of computer vision software which can be cross compiled on to a variety of processor platforms. Compiled code can also run on popular operating systems, providing APIs for image capture, image manipulation and various filtering algorithms.

There are more than 2500 computer vision functions organised into a number of categories. OpenCV is platform neutral and aims to support pc based applications, as well as embedded systems. This means the typical requirements of embedded systems – code density and minimised memory footprint – are therefore not necessarily catered for. In addition, the data formatted in OpenCV has not been designed to be written and read by a DMA engine, which could be a problem in some embedded systems.

Proprietary alternatives include CogniVue, which provides a library of functions for its CV220x devices. These are offered as image manipulation primitives accessible through an API in C. The library has been designed to match a port of OpenCV as closely as possible, but providing a subset of the OpenCV functions, with a different API and optimised for specific CogniVue devices.

The CogniVue library's functions include:

- Basic image memory and manipulation

- Colour space conversion

- Statistical functions

- Image filtering

- Image feature detection

- Feature and object tracking

New hardware choices

Running software such as OpenCV is a highly compute intensive task. Well within the capability of a pc, it presents a challenge to the embedded developer who must operate within tight size, power and cost constraints.

The choice of processor is therefore crucial and there are considerable advantages to choosing media optimised ARM based processors. The alternative is to use a general purpose dsp. Because they lack on board image sensing interfaces, these devices will result in a less integrated design, with a higher component count and a larger footprint.

By contrast, Freescale's i.MX53 processor – with an ARM Cortex-A8 core – is optimised for multimedia applications. Board support packages running on a low cost evaluation board enable the use of operating systems including Linux and Windows CE.

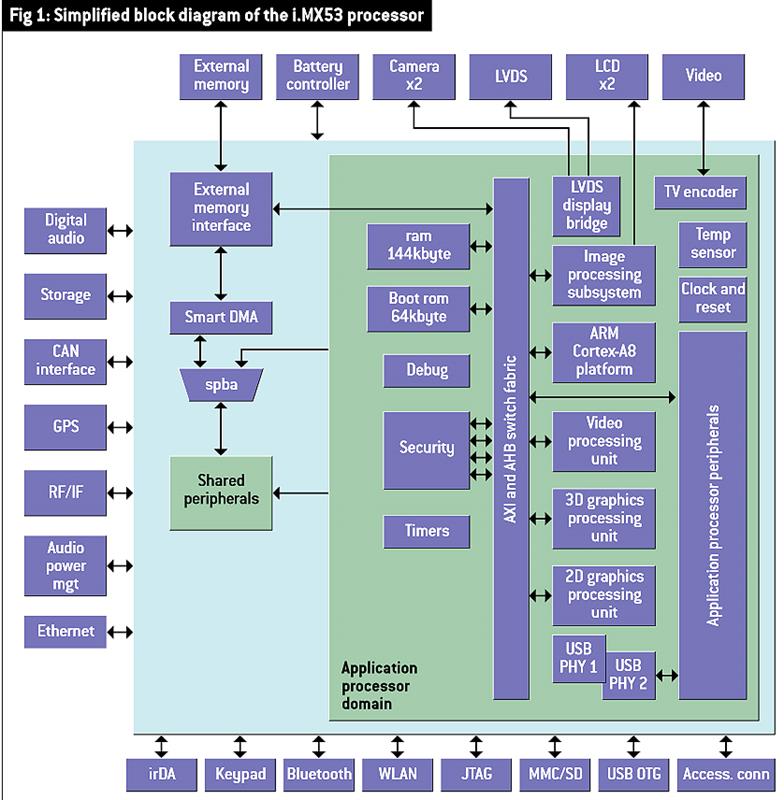

In the i.MX53, the Cortex-A8 core runs at 1GHzand DDR2/3 memory is supported at 400MHz. The device has two camera interfaces, a 20bit parallel interface and a bandwidth of 3Mpixels at 15frame/s, a total throughput of 45Mpixel/s (see fig 1).

The i.MX53 offers strong multimedia and graphics capabilities. It includes optimised blocks for the OpenGL ES2.0 graphics library and OpenVG1.1. Design support for computer vision system developers is available through www.imxcommunity.org, Freescale's i.MX forum site, where detailed instructions can be found on creating a cross compiled version of OpenCV for the i.MX53 Quick Start Board.

Freescale has also licensed CogniVue's Image Cognition Processing IP for use in automotive applications. This means devices such as the iMX53 will be able to run CogniVue software for advanced driver assistance systems.

The i.MX53's display driver enables images to be displayed on a local lcd or output to an external display via lvds or vga. Data can also be sent via Ethernet to a network computer. This would be suitable for a distributed system of multiple cameras in which image processing is performed locally, but where a central controller makes decisions based on multiple inputs.

Equally, the device can operate as an integrated image processor and controller, making decisions based on the image processing outputs and controlling equipment via its CAN port.

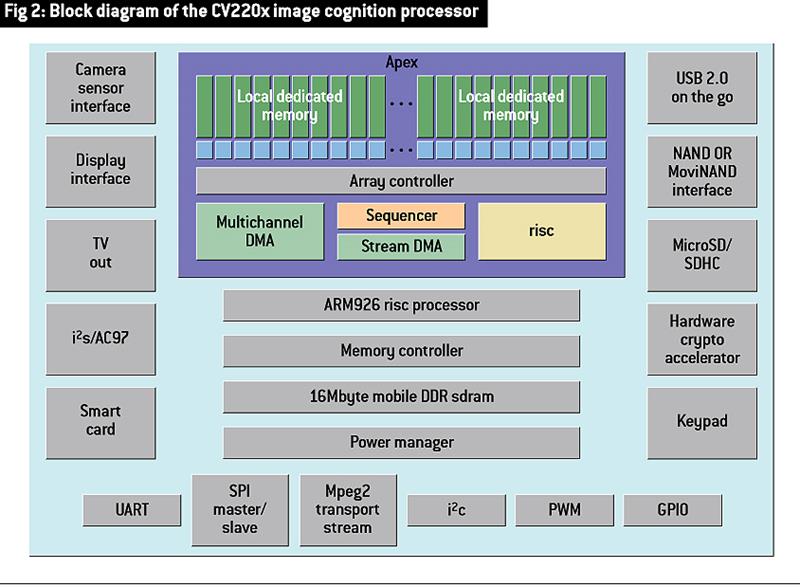

More closely tied to computer vision applications, devices in the CogniVue CV220x family feature an ARM9 processor running at 350MHz for housekeeping and allocation functions and an APEX core for image processing operations – the key to the part's high performance. The APEX block is an array of 96 processing elements running in parallel, each supported by 3kbyte of local memory and running at up to 180MHz. This parallel architecture provides for very low power operation (see fig 2).

The CV2201 also has 16Mbyte of stacked dram. This makes for a simple hardware implementation, offering low power consumption and a small footprint. The part contains a video d/a converter which can drive NTSC and PAL tv signals directly. Current parts offer USB and low speed serial ports, supporting operation primarily as a local device, but Ethernet enabled devices are on the road map.

CogniVue provides two evaluation kits: the reference development kit is a comprehensive development system offering access to all interfaces; while a lower cost alternative is the CV2201 Prototype Design Kit (PDK). This camera module can be programmed via the USB port using preoptimised or customer developed libraries from the CogniVue software developer kit.

By combining an optimised processor with off the shelf image processing software, computer vision can be implemented quickly within tight power, cost and size constraints.

Richard Parker is a field application engineer for high end products with Future Electronics UK and Ireland.