The rules that govern the road were developed for human drivers, but with the rise of automation, the way in which services and people move around will begin to change. As such, road legislation will need to be reviewed and adapted to accommodate for the new driver: the machine.

Human error is said to be the cause of the majority of road accidents, there were 1,770 UK road deaths in the year ending June 2018 alone, according to the Department for Transport (DfT). Insurer AXA has pointed to it being responsible for 90% of traffic accidents; adding that it believes removing the driver from the driving seat is how road fatalities can be dramatically reduced. “Technology doesn’t have the limitations or lapses in concentration which humans do,” it noted.

It is, in fact, a widely held consensus that around 1.3 million road deaths could be prevented annually with the introduction of autonomous vehicles; Government adds that reducing such accidents could also contribute £2 billion of savings to UK economy by 2030. Yet, in a survey conducted by IAM RoadSmart of 1,000 British drivers, more than a third of motorists believe the technology is a bad idea, with 45% saying they are “unsure”. While almost two-thirds believe a human should always be in control of a vehicle.

The reason behind this uncertainty is mostly attributed to the fear of relieving full control to the autonomous vehicle, which has been heightened by media attention to the few self-driving vehicle accidents that have occurred.

“People are killed on the roads every day by accidents that probably could have been eliminated by some type of assisted safety system, but those incidents aren’t advertised,” said Lance Williams, VP, automotive strategy, ON Semiconductor. “An accident in a self-driving car however, is all over the news.”

But handing over the power to the machine raises difficult questions, such as machine morality – what does the car do in certain unavoidable situations which could result in injury or loss of life – and liability – who is responsible and accountable?

At this year’s electronica, New Electronics approached a number of industry experts to hear their point of view, but found that most were uncomfortable to make credited comment due to the question’s philosophical and personal nature. Despite the majority wishing to make their statements anonymously, one individual admitted that it was a topic that “needed to be talked about”.

Also among the comments made was: “I don’t fully trust machines to make decisions based on their own experiences, I would like human intelligent built into the algorithms. But for autonomy to succeed, people will have to start trusting machines”. Trust is a particular area many outline as a problem. Without it, none of the anticipated benefits such as improved road safety and traffic conditions can be realised. Consequently, a number of institutes and investing companies, as well as Government have been actively discussing and looking to develop new standardisation that accommodates for such concerns.

Being such a complex topic, consultations have been opened to the public for some time now. Among them is MIT’s Massive Online Experiment (MOE) known as the “Moral Machine” which was launched in an effort to collate human responses to certain scenarios. The idea behind this – and many other similar projects – is that the general consensus can be used and integrated into machine learning to create an ethical, fair and safer system.

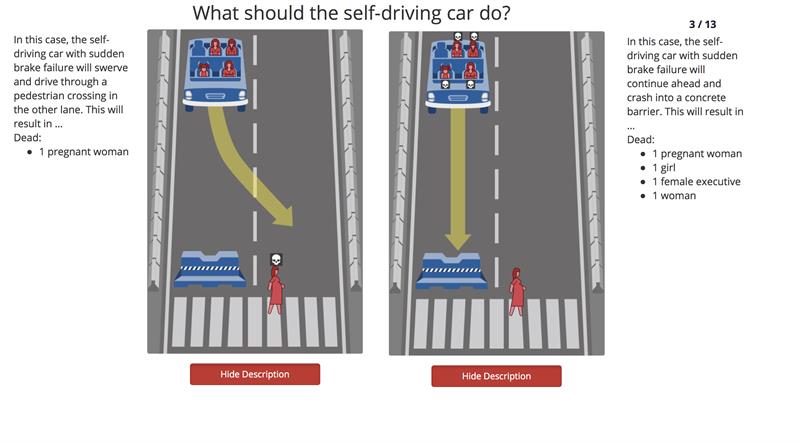

MIT’s test presents various situations based on the Trolley Problem, a well-known ethics quandary, introducing elements such as gender, age, law and status to determine whether certain factors affect a user’s decision.

For example: A passenger of a fully autonomous vehicle makes their way down a road. Suddenly a group of pedestrians appear in the car’s path. Should the car hit the group or swerve and put the passenger or a pedestrian in danger?

MIT found that generally people take a utilitarian approach – in that they prefer autonomous vehicles to minimise casualties. In other words, they would favour a car with one driver to swerve off the road to avoid ten people. However, at the same time, the respondents also revealed they would be much less likely to use a vehicle programmed that way. “Most people want to live in in a world where cars will minimise casualties,” explained Associate Professor Iyad Rahwan of MIT. “But everybody wants their own car to protect them at all costs.”

Earlier this year, NXP Automotive communications manager, Monica Davis, wrote of the consequence of such attitudes, commenting that it becomes a “social dilemma, as self-interest risks overpowering moral values”, along with a technical dilemma for the industry, “how does industry design algorithms that reconcile moral values and personal interest?”.

The IEEE AI Ethics Initiative is presently looking into these matters, and as a result, have developed Ethically Aligned Design (EAD), which aims to set the global standards for ethics in autonomous and intelligent systems (A/IS). But as such a complicated topic, it’s probably fair to predict that the booklet, currently on version 2, is yet to see more revisions.

| "Most people want to live in a world where cars will minimise casualties. But everyone wants their own car to protect them at all costs." Iyad Rahwan |

Williams points to infrastructure as an area that needs more focus, explaining that presently, the cars are well ahead. The Government Actuary’s Department (GAD) has also noted this as one of the most important areas in need of consideration. “At present, the road network is designed to accommodate the existing ‘driven’ transport. The combined effects of road traffic accidents, weather-related damage and ever increasing traffic volumes continue to make both repairs and design/expansion of the existing infrastructure a significant challenge for both Government and users,” GAD stated.

“Connected autonomous vehicles (CAVs) would require markings, signals and signs to be maintained to a higher standard than at present to make sure the instructions can be followed.” GAD also highlighted a greater need to maintain road surfaces as CAVs may be less able to adapt to hazards such as potholes.

It believes once a proper infrastructure is established, the benefit to traffic flow will be great, enabling vehicles to move more efficiently. GAD also envisions further benefits, in which it proposes that self-driving vehicles could drop passengers off and self-park, noting that this could – in theory – result in parking fines becoming non-existent. However, for this to be possible, it states that cities and towns will have to be redesigned – and with plentiful parking so to avoid the risk of CAVs clogging roads.

But Williams says this vision is “far off” pointing to the current poor conditions of the road slowing redesign. He also notes that construction work will cause further problems, with the potential for site barriers to be misplaced and to cause errors in vehicle alignment.

A fundamental change in insurance policy will also have to be addressed, GAD stated. With human drivers no longer in charge of the wheel, responsibility may shift from ‘named driver’ to manufacturer. But, GAD pointed out that many are so confident in their technology, they will readily accept liability.

Most recently, the Law Commission has launched an open consultation into “new rules for the UK’s self-driving future”. The discussion opens up further possibilities, asking anyone willing to contribute, questions such as ‘should a new Government agency monitor and investigate accidents involving automated vehicles’; whether ‘criminal and civil liability laws need to be modified’; and ‘should a car mount a pavement to let an emergency vehicle through like a human driver would’?

It’s clear to see that there are many unanswered questions – with more appearing each year – and despite experts claiming what impressive technology is available, it’s clear to see that self-driving cars will not be possible until debates such as these are settled. Further to this, GAD has pointed out that a mixture of self-driving and driven vehicles will, in fact, actually make matters worse – with delays increasing. In other words, if we are ever to truly have autonomy, we need global acceptance – and that is going take a long time.

| MIT's Moral Machine lists a series of potential scenarios, posing different outcomes that the user must choose from |

If you fancy partaking in MIT's Moral Machine project, click here.